Loubna Ben Allal

@LoubnaBenAllal1

SmolLMs @huggingface

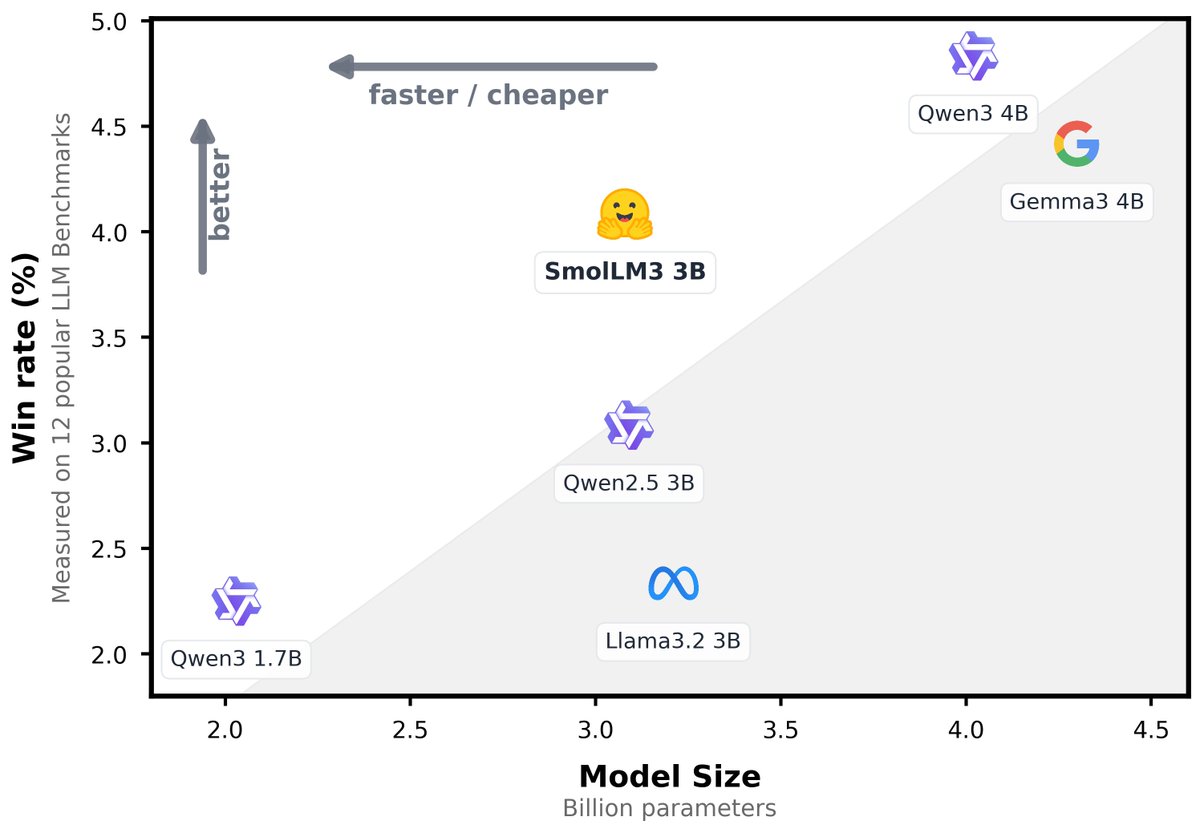

Introducing SmolLM3: a strong, smol reasoner! > SoTA 3B model > dual mode reasoning (think/no_think) > long context, up to 128k > multilingual: en, fr, es, de, it, pt > fully open source (data, code, recipes) huggingface.co/blog/smollm3

Hey fellow researchers and hackers, I’m looking at about a petabyte of raw code for The Next Big Dataset. What is on your wish list for code data features that you wanna see? 🎅

SmolLM 3 made by @huggingface is now available in the app 🤗 - SOTA 3B model - Dual mode reasoning - Multilingual (6 languages) SmolLM 3 outperforms other 3B models while staying competitive with larger 4B models. Running on-device on iPhone and iPad. Optimized for Apple MLX.

From GPT to MoE: I reviewed & compared the main LLMs of 2025 in terms of their architectural design from DeepSeek-V3 to Kimi 2. Multi-head Latent Attention, sliding window attention, new Post- & Pre-Norm placements, NoPE, shared-expert MoEs, and more... magazine.sebastianraschka.com/p/the-big-llm-…

Bye Qwen3-235B-A22B, hello Qwen3-235B-A22B-2507! After talking with the community and thinking it through, we decided to stop using hybrid thinking mode. Instead, we’ll train Instruct and Thinking models separately so we can get the best quality possible. Today, we’re releasing…

Richy Mini and SmolLM3 are featured in Github's weekly news! 🚀 🚀

We've just release 100+ intermediate checkpoints and our training logs from SmolLM3-3B training. We hope this can be useful to the researcher working on mech interpret, training dynamics, RL and other topics :) Training logs: -> Usual training loss (the gap in the loss are due…

Really cool to see SmoLLM3, the current state-of-the-art 3B model land on @Azure AI. @Microsoft is a strong force in small efficient models as shown with the Phi family and others and we've been enjoying partnering with them closely on this and other topics. Thanks @satyanadella…

HF's got a couple papers at COLM 2025 covering all stages of open model life! 📚Data: FineWeb2 (led by @HKydlicek and @gui_penedo) 🧱Model creation: SmolLM2 (led by @LoubnaBenAllal1) and SmolVLM (led by @andimarafioti ) 🧪Evals: YourBench (led by @sumukx) Good job team! 🎉

It is cool to be capable. It is cool to know shit. That's why the HF team is open-sourcing not just the model, but the training code and datasets too. Learn. Build. Make it your own. github.com/huggingface/sm…

We're releasing SmolTalk2: the dataset we used to post-train SmolLM3-3B! Our model wouldn't be fully open-source without the dataset we used to train it, so we're including all our processed data with the details to replicate our post-training. huggingface.co/datasets/Huggi… (1/3)

Happy to introduce Kimina-Prover-72B ! Reaching 92.2% on miniF2F using Test time RL. It can solve IMO problems using more than 500 lines of Lean 4 code ! Check our blog post here: huggingface.co/blog/AI-MO/kim… And play with our demo ! demo.projectnumina.ai

Liquid AI open-sources a new generation of edge LLMs! 🥳 I'm so happy to contribute to the open-source community with this release on @huggingface! LFM2 is a new architecture that combines best-in-class inference speed and quality into 350M, 700M, and 1.2B models.

v1.10.4 is out with support for SmolLM3! Android: play.google.com/store/apps/det… iOS: Coming soon. In the meantime, try it on TestFlight: testflight.apple.com/join/B3KE74MS

Introducing SmolLM3: a strong, smol reasoner! > SoTA 3B model > dual mode reasoning (think/no_think) > long context, up to 128k > multilingual: en, fr, es, de, it, pt > fully open source (data, code, recipes) huggingface.co/blog/smollm3

One of many training anecdotes. Would people be interested in more of these behind-the-scenes stories?

Here’s a nice example which highlights the messiness of AI R&D and why I’m somewhat bearish on its automation in the near future. In this example @eliebakouch and I were a bit stumped on how to preserve the long context performance of the SmolLM3 base model after post-training.…

when your multiple recent releases cross each others on the front page of reddit