Sebastian Raschka

@rasbt

ML/AI researcher & former stats professor turned LLM research engineer. Author of "Build a Large Language Model From Scratch" (https://amzn.to/4fqvn0D).

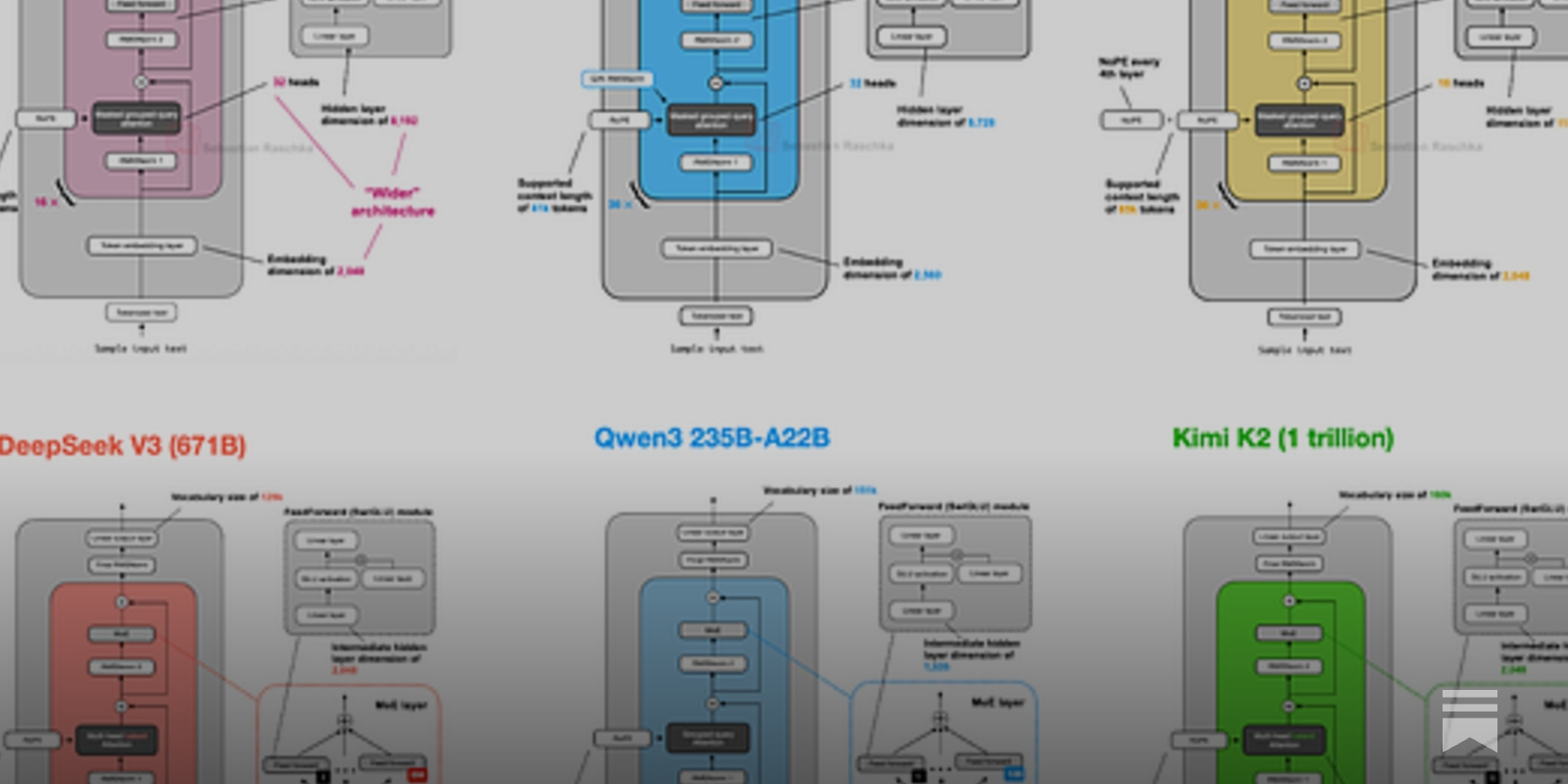

From GPT to MoE: I reviewed & compared the main LLMs of 2025 in terms of their architectural design from DeepSeek-V3 to Kimi 2. Multi-head Latent Attention, sliding window attention, new Post- & Pre-Norm placements, NoPE, shared-expert MoEs, and more... magazine.sebastianraschka.com/p/the-big-llm-…

The new Qwen3 update takes back the benchmark crown from Kimi 2. Some highlights of how Qwen3 235B-A22B differs from Kimi 2: - 4.25x smaller overall but has more layers (transformer blocks); 235B vs 1 trillion - 1.5x fewer active parameters (22B vs. 32B) - much fewer experts in…

Kimi K2 🆚 Qwen-3-235B-A22B-2507 The new updated Qwen 3 model beats Kimi K2 on most benchmarks. The jump on the ARC-AGI score is especially impressive An updated reasoning model is also on the way according to Qwen researchers

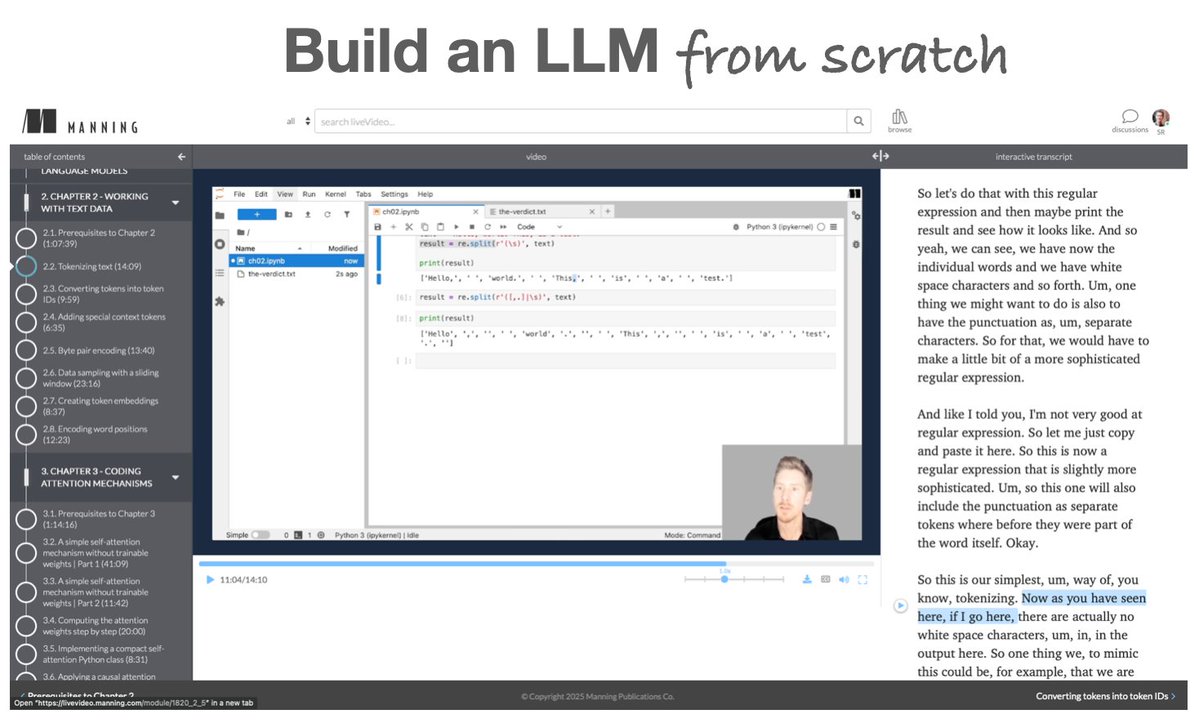

Btw if you're learning how to build LLMs from the ground up, there's now a 17h companion video course for my LLMs From Scratch book on Manning: manning.com/livevideo/mast… It follows the book chapter by chapter, so it works great either as a standalone or code-along resource. It's…

Holy shit. Kimi K2 was pre-trained on 15.5T tokens using MuonClip with zero training spike. Muon has officially scaled to the 1-trillion-parameter LLM level. Many doubted it could scale, but here we are. So proud of the Moum team: @kellerjordan0, @bozavlado, @YouJiacheng,…

Right now LLM providers serve you one-size-fits-all LLMs, which is one of the main bottlenecks.

I started coding again recently. No, not vibe coding. Actual coding. I fell in love with coding as a teenager. I’d stay up all night ‘playing on my computer’ and sleep all day in school. Coding is like a drug for me, everything in the world disappears. Hours pass like…

There were many people who asked me about joining their startup & co-founding a startup in the past 2 weeks. Not career advice, but just my 2 cents: I actually think it’s a great time to start a bootstrapped startup compared to going the VC-backed route. These days, there are…

I read a few chapters and I would recommend this for any AI enthusiast who is willing to read more. A lot of topics are very complex and Sebastian explains the "why" then gives papers, code and homework for you to figure out "what and how." 10/10

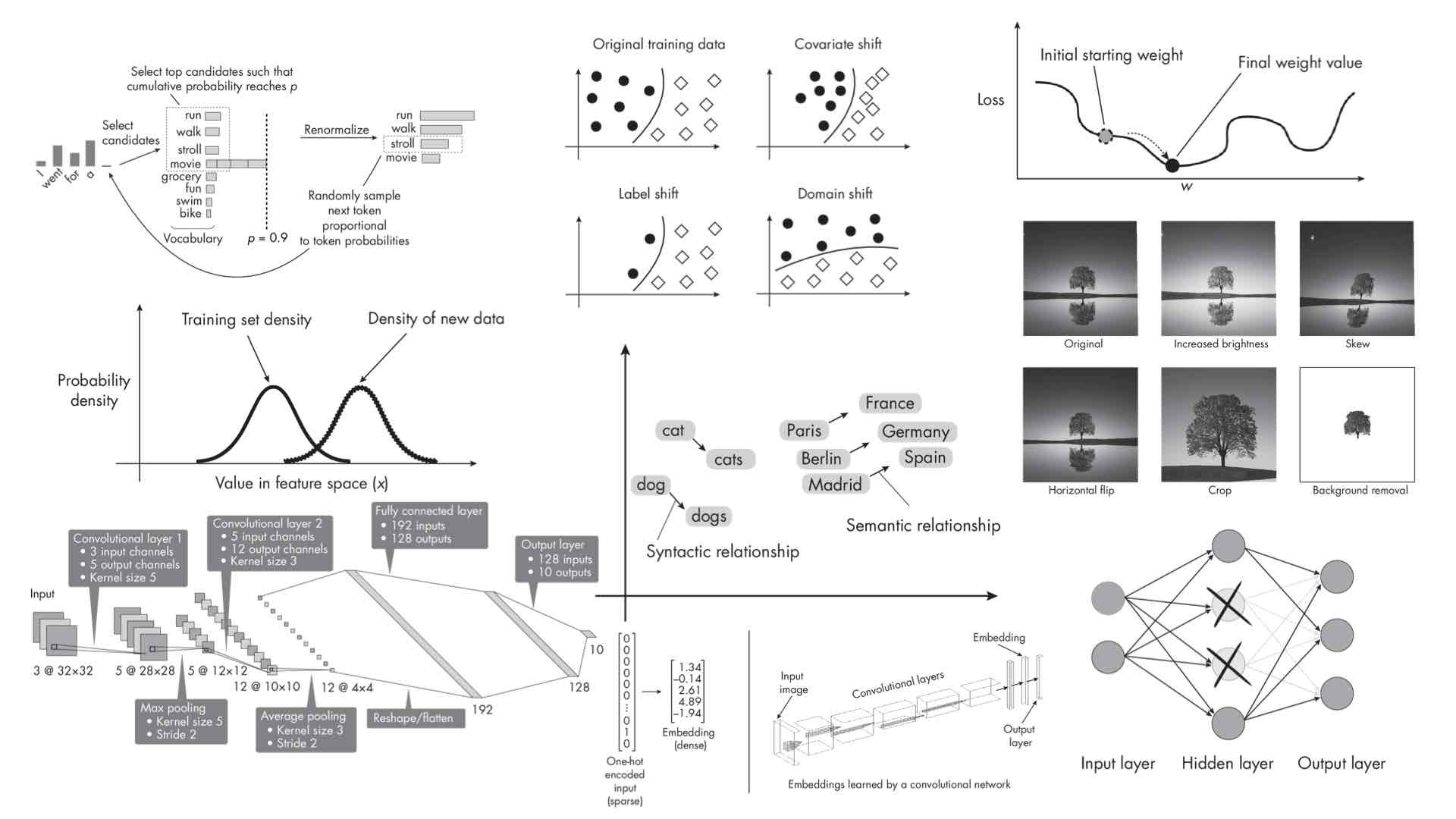

Since it's summer, and more or less internship and tech interview season, I made all 30 chapters of my Machine Learning Q and AI book freely available for the summer: sebastianraschka.com/books/ml-q-and… Hope it’s helpful! Happy reading, and good luck if you are interviewing!

Since it's summer, and more or less internship and tech interview season, I made all 30 chapters of my Machine Learning Q and AI book freely available for the summer: sebastianraschka.com/books/ml-q-and… Hope it’s helpful! Happy reading, and good luck if you are interviewing!

Job roles in 2027: We let LLMs focus on the “how”. We focus on the “why”. Programmer → Code Composer Before: Writing code line by line After: Designing logic & structuring systems Web Dev → Experience Designer Before: Building layouts and components After: Defining flow,…