Xuan-Son Nguyen

@ngxson

Engineer @huggingface

Introducing: The most visually intuitive article about RoPE, 2D-RoPE, and M-RoPE that you can find on the internet 😆 Link in 🧵

ik_llama.cpp was taken down by @github I believe this was done by mistake. Please restore the repo and his account. Thank you 🙏

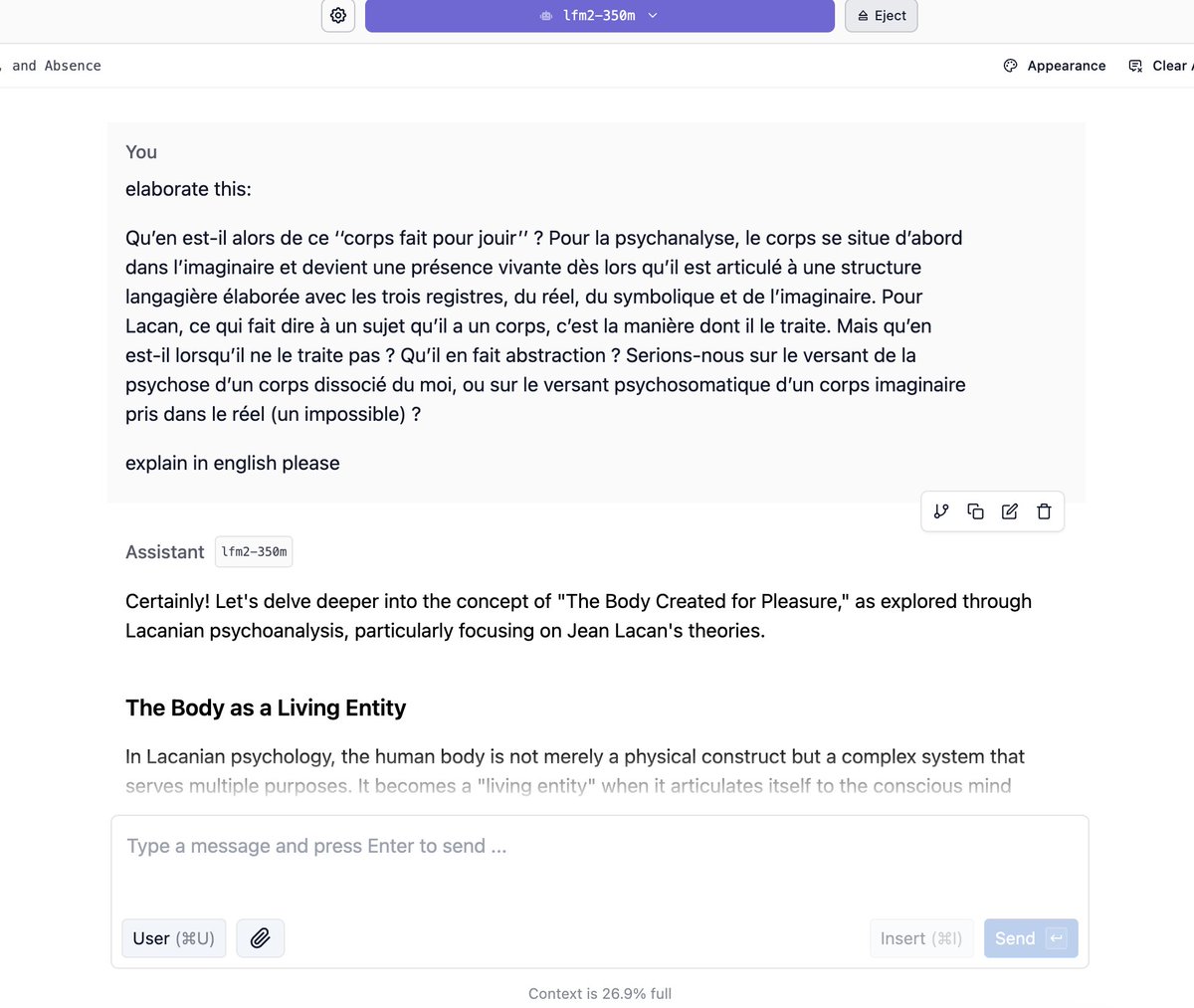

Trying out LFM2 350M from @LiquidAI_ and was mind-blown 🤯 The responses were very coherent. Less hallucinations compared to models of the same size. Very well done!! The best part: Q4_K_M quantization is just 230 Megabytes, wow!

🚀 friday afternoon funtime: quickly shipped a tool to explore different plasma boundaries 🌟one more step towards achieving fusion! 🔗 huggingface.co/spaces/proxima…

hugging face on apple watch - gotta know what’s trending, NOW!

Congrats on the exciting release! The new architecture changes to the model are definitely interesting and will be fun to play with. Admirations for the community initiative to build on-device products. Looking forward to many people joining the challenge!

I’m so excited to announce Gemma 3n is here! 🎉 🔊Multimodal (text/audio/image/video) understanding 🤯Runs with as little as 2GB of RAM 🏆First model under 10B with @lmarena_ai score of 1300+ Available now on @huggingface, @kaggle, llama.cpp, ai.dev, and more

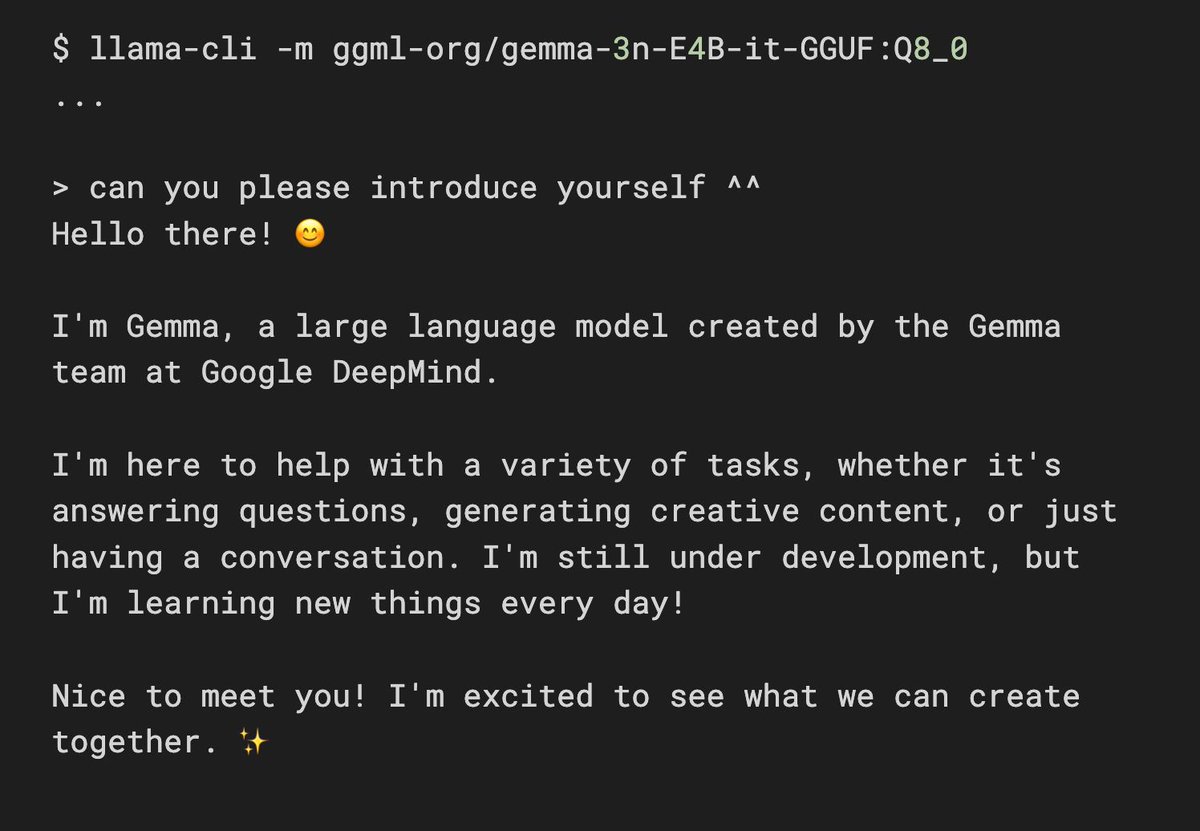

Gemma 3n has arrived in llama.cpp 👨🍳 🍰 Comes in 2 flavors: E2B and E4B (E means "effective/active parameters")

save r/LocalLlama

We need r/LocalLlama back :( Hopefully a good neutral moderator takes the reins asap!

IN: video fine-tuning support for @AIatMeta's V-JEPA 2 in HF transformers 🔥 it comes with > fine-tuning notebook > four models fine-tuned on Diving48 and SSv2 dataset > FastRTC demo on V-JEPA2 SSv2 (see below) we're looking forward to see fine-tuned V-JEPA2 models on Hub ⏯️

Apple in design guideline: accessiblity, no overuse Apple in reality: no accessiblity, overuse