elie

@eliebakouch

Training llm's at @huggingface | http://hf.co/science

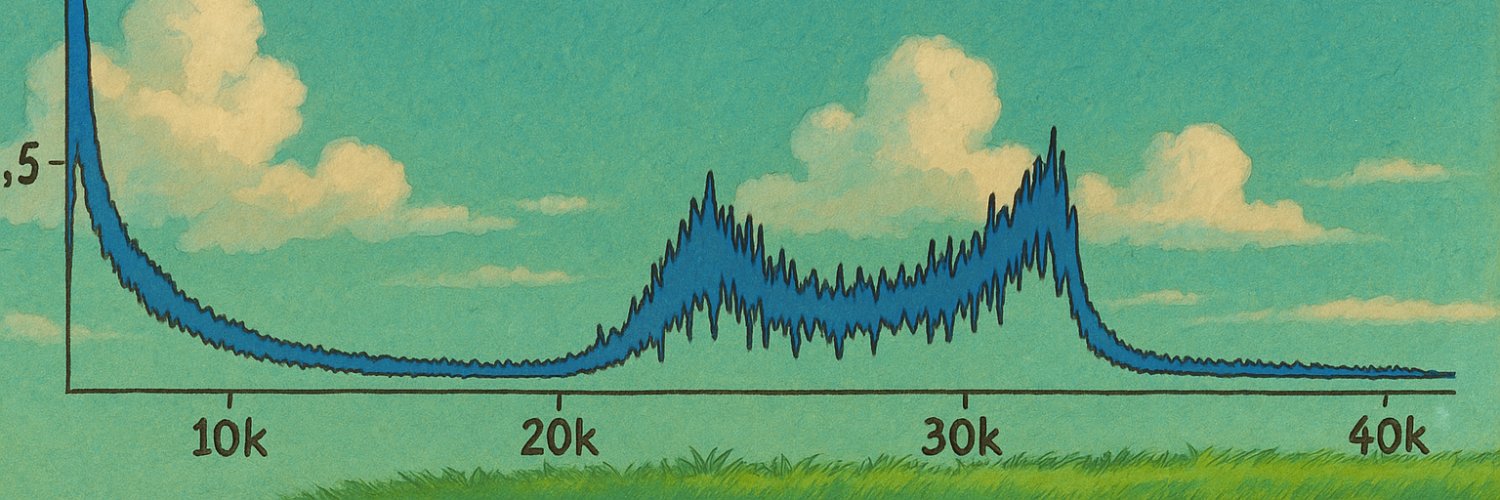

Super excited to share SmolLM3, a new strong 3B model. SmolLM3 is fully open, we share the recipe, the dataset, the training codebase and much more! > Train on 11T token on 384 H100 for 220k GPU hours > Support long context up to 128k thanks to NoPE and intra document masking >…

There is a small group of anons that keep doing fantastic work like @tensorqt who everyone should follow. There are others who does push the emotional buttons by warping perspective to be negative sum or zero sum. If you are an anon and post really good useful/interesting…

I think anons are essential to the ecosystem but also something has gone awry lately and there is a need for a reset.

This is the Deep Blue moment for soccer

Watch my best shot of the day!

I’ll be presenting TorchTitan: a PyTorch native platform for training foundation models tomorrow at the ICML @ESFoMo workshop! Come and say Hi!

Looking forward to seeing everyone for ES-FoMo part three tomorrow! We'll be in East Exhibition Hall A (the big one), and we've got an exciting schedule of invited talks, orals, and posters planned for you tomorrow. Let's meet some of our great speakers! 1/

🤗🤗🤗 🤗❤️🤗 @huggingface & Cline = your LLM playground 🤗🤗🤗 You can access Kimi K2 & 6,140 (!) other open source models in Cline.

Really cool to see SmoLLM3, the current state-of-the-art 3B model land on @Azure AI. @Microsoft is a strong force in small efficient models as shown with the Phi family and others and we've been enjoying partnering with them closely on this and other topics. Thanks @satyanadella…

In this report, we describe the 2025 Apple Foundation Models ("AFM"). We also introduce the new Foundation Models framework, which gives app developers direct access to the on-device AFM model machinelearning.apple.com/research/apple…

.@StanfordCRFM's Marin project has released the first fully open model in JAX. It’s an 'open lab' sharing the entire research process - including code, data, and logs, to enable reproducibility and further innovation. developers.googleblog.com/en/stanfords-m…

But actually this is the og way of doing it and should stop by E-2103 to see @jxbz and Laker Newhouse whiteboard the whole paper.

Laker and I are presenting this work in an hour at ICML poster E-2103. It’s on a theoretical framework and language (modula) for optimizers that are fast (like Shampoo) and scalable (like muP). You can think of modula as Muon extended to general layer types and network topologies

Laker and I are presenting this work in an hour at ICML poster E-2103. It’s on a theoretical framework and language (modula) for optimizers that are fast (like Shampoo) and scalable (like muP). You can think of modula as Muon extended to general layer types and network topologies

In case the post was too vague, yes - this is the Hermes 3 dataset - 1 Million Samples - Created SOTA without the censorship at it's time on Llama-3 series (8, 70, and 405B) - Has a ton of data for teach system prompt adherence, roleplay, and a great mix of subjective and…

huggingface.co/datasets/NousR…

considering Muon is so popular and validated at scale, we've just decided to welcome a PR for it in PyTorch core by default. If anyone wants to take a crack at it... github.com/pytorch/pytorc…