clem 🤗

@ClementDelangue

Co-founder & CEO @HuggingFace 🤗, the open and collaborative platform for AI builders

This isn’t a goal of ours because we have plenty of money in the bank but quite excited to see that @huggingface is profitable these days, with 220 team members and most of our platform being free (like model hosting) and open-source for the community! Especially noteworthy at…

I'm not sure how HF is paying for all those TBs going in and out, but at least now we're chipping in a little bit. Thanks @huggingface for being the great library of AI models for us all 🙏

🚨 New release: MegaScience The largest & highest-quality post-training dataset for scientific reasoning is now open-sourced (1.25M QA pairs)! 📈 Trained models outperform official Instruct baselines 🔬 Covers 7+ disciplines with university-level textbook-grade QA 📄 Paper:…

Thrilled to announce our MiMo-VL series hit 100K downloads on HuggingFace last month! 🚀🚀 Incredible to see the community's enthusiasm for our VLMs. More exciting updates coming soon! 😜 huggingface.co/XiaomiMiMo/MiM…

💥 BREAKING: @Alibaba_Qwen just dropped the worlds leading coding model - a 480B Qwen3 Coder with 35B active parameters and a huge context window! This non-reasoning coder is getting near SOTA at SWE-bench, 68.7 on BFCL (function calling) and 61.8 on Aider! 🧵

>>> Qwen3-Coder is here! ✅ We’re releasing Qwen3-Coder-480B-A35B-Instruct, our most powerful open agentic code model to date. This 480B-parameter Mixture-of-Experts model (35B active) natively supports 256K context and scales to 1M context with extrapolation. It achieves…

🎨 We’re thrilled to officially launch Neta Lumina — the most advanced open-source anime model yet. As our 4th open-source model, Neta Lumina has achieved: 🔹 Expertly tuned for 200+ anime aesthetics including Guofeng, Furry, Pets, Scenery Shots and more niche themes 🔹…

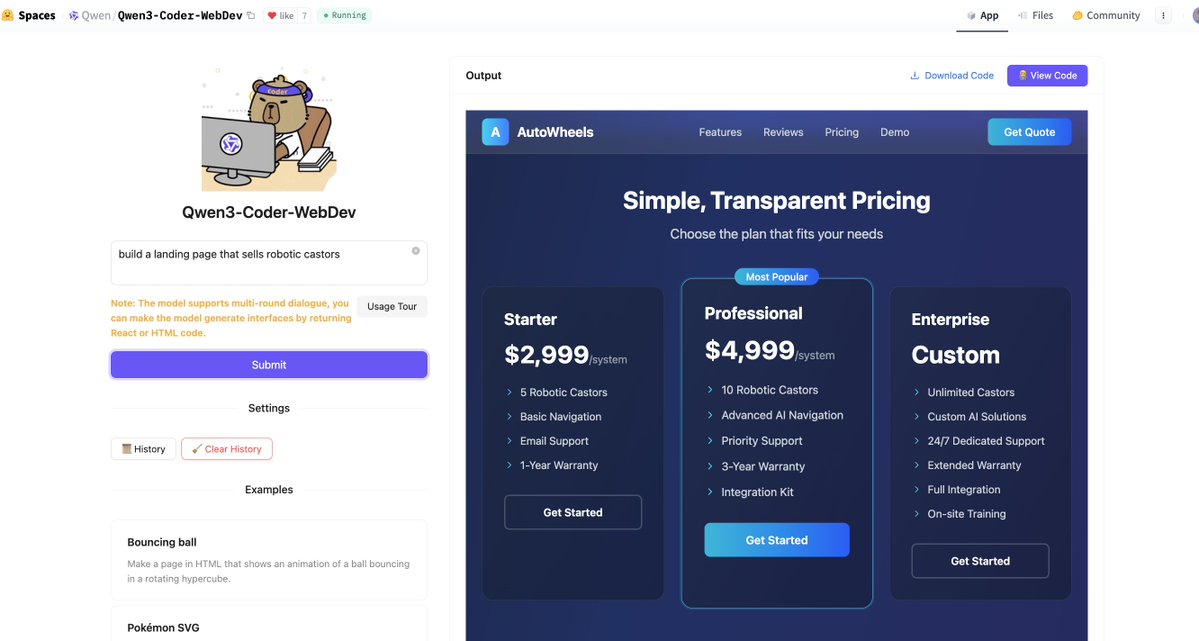

Qwen just released a 480B coding model & a space to try it out for web dev. Fun! Model: huggingface.co/Qwen/Qwen3-Cod… Space: huggingface.co/spaces/Qwen/Qw…

Wait so Alibaba Qwen has just released ANOTHER model?? Qwen3-Coder is simply one of the best coding model we've ever seen. → Still 100% open source → Up to 1M context window 🔥 → 35B active parameters → Same performance as Sonnet 4 They're releasing a CLI tool as well ↓

The @huggingface Transformers ↔️ @vllm_project integration just leveled up: Vision-Language Models are now supported out of the box! If the model is integrated into Transformers, you can now run it directly with vLLM. github.com/vllm-project/v… Great work @RTurganbay 👏

Qwen3-Coder 💻 agentic code model by @Alibaba_Qwen huggingface.co/collections/Qw… ✨ 480B total, 35B activated MoE ✨ Agentic Coding + Browser Use → Top code model performance ✨ 256K context (up to 1M via Yarn) for repo-scale understanding

As always, you'll see it on HF first! huggingface.co/Qwen

Qwen about to release a 480B MoE for coding with 1 million context! "Qwen3-Coder-480B-A35B-Instruct is a powerful coding-specialized language model excelling in code generation, tool use, and agentic tasks."

Qwen about to release a 480B MoE for coding with 1 million context! "Qwen3-Coder-480B-A35B-Instruct is a powerful coding-specialized language model excelling in code generation, tool use, and agentic tasks."

ARC-AGI-3 Agent Competition (27 days left) $10K prize pool in partnership with @huggingface Your first submission is 3 lines of code away Here are quick-start templates from @LangChainAI @AgentOpsAI and @AnthropicAI and lessons learned from devs who've tried ARC-AGI-3 🧵

A 3D-printed robotic hand for under $200? 🤯 @huggingface just made it real. They’ve open-sourced the Amazing Hand, a lightweight, fully 3D-printable robotic hand with 8 degrees of freedom, no cables, and a build cost under €200. Each of its four fingers is powered by two…

So strange how most people refer to "AGI" generically as one monolith, like they'll all be the same. There isn't going to be one AGI, or one type of AGI. There's going to be an infinite variety of flavors.