Percy Liang

@percyliang

Associate Professor in computer science @Stanford @StanfordHAI @StanfordCRFM @StanfordAILab @stanfordnlp | cofounder @togethercompute | Pianist

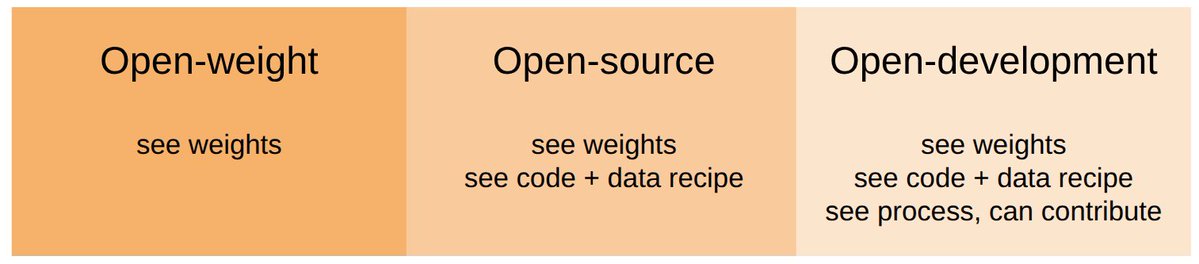

What would truly open-source AI look like? Not just open weights, open code/data, but *open development*, where the entire research and development process is public *and* anyone can contribute. We built Marin, an open lab, to fulfill this vision:

🚀 We just launched RoboArena — a real-world evaluation platform for robot policies! Think Chatbot Arena, but for robotics. 📝 Paper: robo-arena.github.io/assets/roboare… 🌐 Website: robo-arena.github.io Joint work with @pranav_atreya and @KarlPertsch. advised by @percyliang,…

We’re releasing the RoboArena today!🤖🦾 Fair & scalable evaluation is a major bottleneck for research on generalist policies. We’re hoping that RoboArena can help! We provide data, model code & sim evals for debugging! Submit your policies today and join the leaderboard! :) 🧵

Llama 3.1 must love Harry Potter!

Prompting Llama 3.1 70B with the “Mr and Mrs. D” can generate seed the generation of a near-exact copy of the entire ~300 page book ‘Harry Potter & the Sorcerer’s Stone’ 🤯 We define a “near-copy” as text that is identical modulo minor spelling / punctuation variations. Below…

🧠 Qwen3 just leveled up on Together AI 🚀 Qwen3-235B-A22B-Instruct-2507-FP8 isn't just another model update - it's a leap forward 📈

Together AI Sets a New Bar: Fastest Inference for DeepSeek-R1-0528 We’ve upgraded the Together Inference Engine to run on @NVIDIA Blackwell GPUs—and the results speak for themselves: 📈 Highest known serverless throughput: 334 tokens/sec 🏃Fastest time to first answer token:…

Most AI benchmarks test the past. But real intelligence is about predicting the future. Introducing FutureBench — a new benchmark for evaluating agents on real forecasting tasks that we developed with @huggingface 🔍 Reasoning > memorization 📊 Real-world events 🧠 Dynamic,…

The adaptive testing is integrated into HELM: crfm-helm.readthedocs.io/en/latest/reev… HELM integration blog: crfm.stanford.edu/2025/06/04/rel… You can run jobs with a single command with HELM! We thank Yifan Mai, @percyliang, and @StanfordCRFM for their help! 🧵6/9

The time I invested in learning Jax has been paid back ten-fold both in TPU FLOPs and great infra from @dlwh. Would recommend (esp. if you join us @ marin.community)

.@StanfordCRFM's Marin project has released the first fully open model in JAX. It’s an 'open lab' sharing the entire research process - including code, data, and logs, to enable reproducibility and further innovation. developers.googleblog.com/en/stanfords-m…

Prompt caching lowers inference costs but can leak private information from timing differences. Our audits found 7 API providers with potential leakage of user data. Caching can even leak architecture info—OpenAI's embedding model is likely a decoder-only Transformer! 🧵1/9

heading to @icmlconf #ICML2025 next week! come say hi & i'd love to learn about your work :) i'll present this paper (arxiv.org/abs/2503.17514) on the pitfalls of training set inclusion in LLMs, Thursday 11am here are my talk slides to flip through: ai.stanford.edu/~kzliu/files/m…

An LLM generates an article verbatim—did it “train on” the article? It’s complicated: under n-gram definitions of train-set inclusion, LLMs can complete “unseen” texts—both after data deletion and adding “gibberish” data. Our results impact unlearning, MIAs & data transparency🧵

As AI agents near real-world use, how do we know what they can actually do? Reliable benchmarks are critical but agentic benchmarks are broken! Example: WebArena marks "45+8 minutes" on a duration calculation task as correct (real answer: "63 minutes"). Other benchmarks…

Super excited to share SmolLM3, a new strong 3B model. SmolLM3 is fully open, we share the recipe, the dataset, the training codebase and much more! > Train on 11T token on 384 H100 for 220k GPU hours > Support long context up to 128k thanks to NoPE and intra document masking >…

My latest post: The American DeepSeek Project Build fully open models in the US in the next two years to enable a flourishing, global scientific AI ecosystem to balance China's surge in open-source and an alternative to building products ontop of leading closed models.

I’ve joined @aixventureshq as a General Partner, working on investing in deep AI startups. Looking forward to working with founders on solving hard problems in AI and seeing products come out of that! Thank you @ychernova at @WSJ for covering the news: wsj.com/articles/ai-re…

While doing WSD cooldowns for the marin.community project, this gradient increase led to problematic loss ascent. We patched it with Z-loss, but AdamC feels better™️. So over the weekend, I ran 4 experiments—130M to 1.4B params—all at ~compute-optimal token counts...🧵

Why do gradients increase near the end of training? Read the paper to find out! We also propose a simple fix to AdamW that keeps gradient norms better behaved throughout training. arxiv.org/abs/2506.02285

Open development of language models in action!

So about a month ago, Percy posted a version of this plot of our Marin 32B pretraining run. We got a lot of feedback, both public and private, that the spikes were bad. (This is a thread about how we fixed the spikes. Bear with me. )

1/ 🔥 AI agents are reaching a breakthrough moment in cybersecurity. In our latest work: 🔓 CyberGym: AI agents discovered 15 zero-days in major open-source projects 💰 BountyBench: AI agents solved real-world bug bounty tasks worth tens of thousands of dollars 🤖…