Nathan Lambert

@natolambert

Figuring out AI @allen_ai, open models, RLHF, fine-tuning, etc Contact via email. Writes @interconnectsai Wrote The RLHF Book Mountain runner

My latest post: The American DeepSeek Project Build fully open models in the US in the next two years to enable a flourishing, global scientific AI ecosystem to balance China's surge in open-source and an alternative to building products ontop of leading closed models.

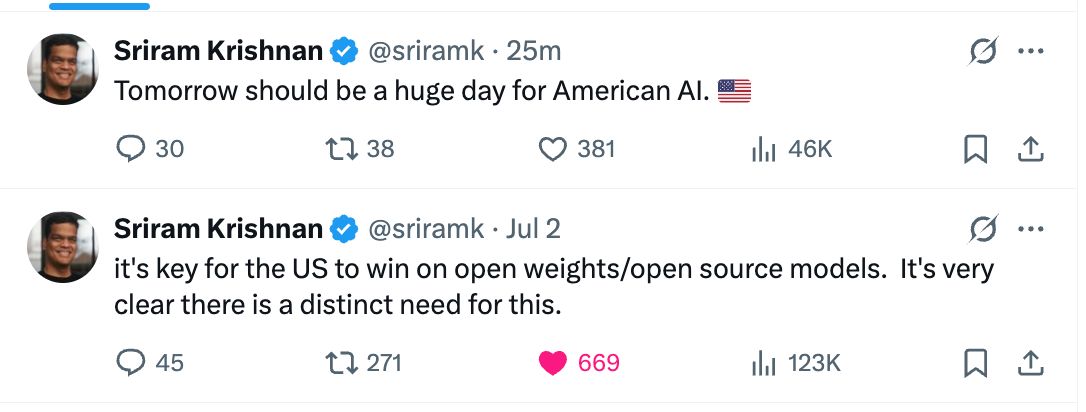

but really these being the last two tweets from a senior AI advisor gives me hope for at least some part of the AI puzzle (white house AI action plan drops tomorrow)

I think my dog prefers chewing on premium usb c cables over standard cables, gonna need to increase my IT budget

Writing every week as a researcher gives me: 1. Better taste of which projects to choose 2. Better ability to steer projects towards higher impact 3. Freedom to make some mental time to just think about something else, even if project is stuck Highly recommend.

Yes. Writing is not a second thing that happens after thinking. The act of writing is an act of thinking. Writing *is* thinking. Students, academics, and anyone else who outsources their writing to LLMs will find their screens full of words and their minds emptied of thought.

I gain a lot of mental clarity and peace from not bringing my phone: 1. In the bedroom for sleep, 2. For meals, coffee, or a snack with friends close to home/work. Both are very easy and worth trying.

for your entertainment :)

🆕 Releasing our entire RL + Reasoning track! featuring: • @willccbb, Prime Intellect • @GregKamradt, Arc Prize • @natolambert, AI2/Interconnects • @corbtt, OpenPipe • @achowdhery, Reflection • @ryanmart3n, Bespoke • @ChrSzegedy, Morph with special 3 hour workshop from:…

Claude code is such a joy. It's a remarkable level of product fit.

Counterpoint: Claude code is by far the best coding tool I’ve ever used and is notably better than everything else, despite being a thin wrapper around a model.

What's the next wall people are claiming for AI? First we ran out of data, then RL wouldn't generalize outside of math/code, what's next? Only real wall seems to be compute availability.

The point of this is to avoid psyops not to take away from an obvious, major technical accomplishment, cmon fam I'm not an AI hater so many haters in the replies

Not falling for OpenAI’s hype-vague posting about the new IMO gold model with “general purpose RL” and whatever else “breakthrough.” Google also got IMO gold (harder than mastering AIME), but remember, simple ideas scale best.