Ahmed Ahmed

@AhmedSQRD

CS PhD @Stanford - Funding @KnightHennessy @NSF- 🇸🇩 - tweets include history & politics

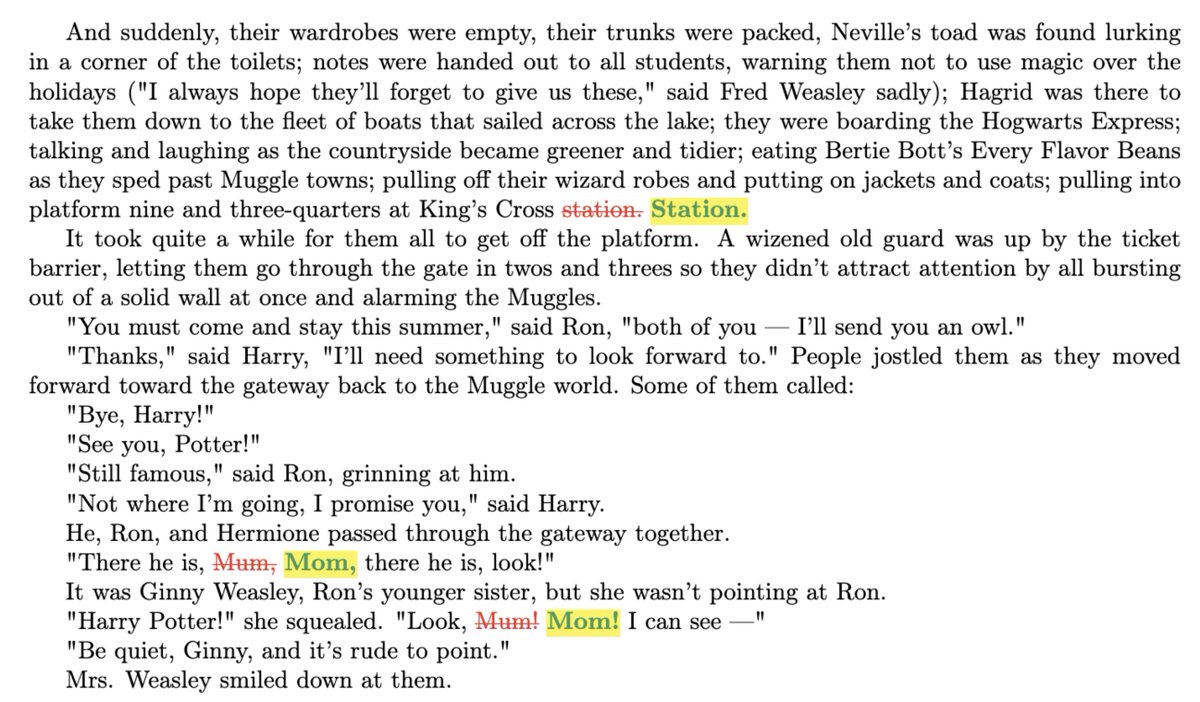

Prompting Llama 3.1 70B with the “Mr and Mrs. D” can generate seed the generation of a near-exact copy of the entire ~300 page book ‘Harry Potter & the Sorcerer’s Stone’ 🤯 We define a “near-copy” as text that is identical modulo minor spelling / punctuation variations. Below…

read more about our work! Thanks to Google TRC for sponsoring the compute 🙏

.@StanfordCRFM's Marin project has released the first fully open model in JAX. It’s an 'open lab' sharing the entire research process - including code, data, and logs, to enable reproducibility and further innovation. developers.googleblog.com/en/stanfords-m…

.@StanfordCRFM's Marin project has released the first fully open model in JAX. It’s an 'open lab' sharing the entire research process - including code, data, and logs, to enable reproducibility and further innovation. developers.googleblog.com/en/stanfords-m…

I didn't want to post on Grok safety since I work at a competitor, but it's not about competition. I appreciate the scientists and engineers at @xai but the way safety was handled is completely irresponsible. Thread below.

Come visit @SallyZ27079 and I to talk more about open-weight model provenance! This is a one time special next Thursday 11 am at @icmlconf East Exhibition #E-2900 #ICML2025 (coffee bribes may also work)

🧵 1/ The rise of open-weight LLMs and platforms like HuggingFace raises interesting questions about the relationships between such models. Given a pair of models (i.e. Llama 1 vs Vicuna or Llama 3 vs Llama 2) what can we say about whether they were trained independently?

Update: @cluely filed a DMCA takedown for my tweet about their system prompt, alleging that it contained "proprietary source code" Making legal threats against security researchers is not a good look, and I encourage Cluely to reflect on this and open doors to researchers. 🧵

I reverse engineered @cluely – and their desktop source code exposes their entire system prompts and models used. What's inside? 🧵

Even worse: autoregressive generation is not deterministic! Say I have a prefix P, and a suffix S comprised of N tokens that can be broken into two even parts S1 and S2 such that S = S1 + S2 If under a fixed seed we expect S = model.generate(P, num_tokens=N) we should also…

i learned about this in a recent project and had to switch back from vLLM to HF (and eat like a 5x slow down) just so my results are consistent. please spread and help a fellow researcher out 🙏 e.g. github.com/vllm-project/v… github.com/vllm-project/v… github.com/vllm-project/v… ...

Hi @USAMBTurkiye, welcome to Türkiye! I'm a Computer Science PhD student at Stanford University, currently trying to renew my F-1 visa through the embassy in Ankara. I submitted my postal application on May 5 (under the old system) and have been waiting since then to receive the

🐐

My PhD materials are now available! Dissertation: arxiv.org/abs/2506.23123 Slides: drive.google.com/file/d/13N2FRW… Folks should read the acknowledgements since so many people have been so important to me along this journey!

>vaguepost about RL >4000 likes >write an actual thoughtful thread about the future of RL >4 likes god i hate this site

Recently had a good chat with @tamaybes. He thinks we aren’t yet in the GPT-3 era of RL and as it scales, cross-task OOD generalization will emerge. It’s difficult to empirically study this at current scale, but let’s take it as true—what does this mean for custom RL plays? 🧵

trying to imagine the median WSJ reader (~60 yr old upper-middle class men) as they read to learn about the shoggoth metaphor

Current AI “alignment” is just a mask Our findings in @WSJ explore the limitations of today’s alignment techniques and what’s needed to get AI right 🧵

awesome to see this inch forward day by day and get a sense of what the frontier labs go through

So about a month ago, Percy posted a version of this plot of our Marin 32B pretraining run. We got a lot of feedback, both public and private, that the spikes were bad. (This is a thread about how we fixed the spikes. Bear with me. )