Jiao Sun

@sunjiao123sun_

Senior Research Scientist at Google DeepMind \n\n NLP PhD @ USC, Amazon ML Fellow \n\n ex-{Google Brain, Alexa AI} nlper, IIIS Tsinghua-Ren

🚨 Agent Wars Incoming? What happens when both the buyer and seller use AI agents to make decisions? Turns out: 💡 If your agent’s dumber, you pay more. Stronger reasoning models dominate the game. Welcome to the future of asymmetric AI! 💸🤖💸 #AIagents #LLM #FutureOfMarkets

AI Shopping/Sales Agents sound very cool! But what if both the buyer and seller use AI agents? Our recent study found that stronger agents can exploit weaker ones to get a better deal, and delegating negotiation to AI agents might lead to economic losses. arxiv.org/abs/2506.00073…

Jiaxin is simply awesome!! Go work with him on fun agent stuff!

Life Update: I will join @UTiSchool as an Assistant Professor in Fall 2026 and will continue my work on LLM, HCI, and Computational Social Science. I'm building a new lab on Human-Centered AI Systems and will be hiring PhD students in the coming cycle!

Wow. As an AC/reviewer, it's disheartening to see how low-effort reviews can unfairly hurt students and erode the community we’ve worked so hard to build. @ReviewAcl — can you help track down this reviewer/AC and investigate? Before it’s too late!

@ReviewAcl @emnlpmeeting Urgent help needed. acFZ: initial score 3 🧊 Complete silence during discussion. ⏰ 4am PST, 9 min before deadline: quietly drops to 2. with “Thanks for the rebuttal. I have updated the score.” ⚠️ No explanation. No notice. No chance to respond. (0/n)

I really enjoyed our run in Cubbon Park, @divy93t! Plus ending with fresh coconut was awesome!

Thrilled to share our new reasoning model, Polaris✨! The 4B version achieves a score of 79.4 on AIME 2025, surpassing Claude 4 Opus (75.5) We’re releasing the full RL recipe, data, and weights 🔓 — see all the details below

# 🚨 4B open-recipe model beats Claude-4-Opus 🔓 100% open data, recipe, model weights and code. Introducing Polaris✨--a post-training recipe for scaling RL on advanced reasoning models. 🥳 Check out how we boost open-recipe reasoning models to incredible performance levels…

LLMs trained to memorize new facts can’t use those facts well.🤔 We apply a hypernetwork to ✏️edit✏️ the gradients for fact propagation, improving accuracy by 2x on a challenging subset of RippleEdit!💡 Our approach, PropMEND, extends MEND with a new objective for propagation.

✨ New paper ✨ 🚨 Scaling test-time compute can lead to inverse or flattened scaling!! We introduce SealQA, a new challenge benchmark w/ questions that trigger conflicting, ambiguous, or unhelpful web search results. Key takeaways: ➡️ Frontier LLMs struggle on Seal-0 (SealQA’s…

🌏How culturally safe are large vision-language models? 👉LVLMs often miss the mark. We introduce CROSS, a benchmark of 1,284 image-query pairs across 16 countries & 14 languages, revealing how LVLMs violate cultural norms in context. ⚖️ Evaluation via CROSS-EVAL 🧨 Safety…

I’ve seen many questions about how to choose ARR tracks for submissions aim at the new tracks at #emnlp2025. We actually wrote a blogpost along with the 2nd CFP exactly to address this: 2025.emnlp.org/track-changes/ Please help us share it widely! Good luck with your emnlp submissions!

Happy to see #EMNLP2025 introducing new tracks on AI/LLM Agents, Code Models, Safety & Alignment, Reasoning, LLM Efficiency, and more. Big thanks to the organizers for making this happen! @emnlpmeeting #NLProc Perfect venue for agentic research and language technologies.…

Today we’re sharing an early look at our latest Gemini update for I/O! Introducing the updated Gemini 2.5 Pro (I/O edition), which ranks #1 on WebDev Arena and surpasses our previous 2.5 Pro model by +147 Elo points. 🏆 blog.google/products/gemin…

Was part of the force driving Gemini #1 on WebDev Arena. We are still cooking for better things to come! 👩🍳🤘

🚨Breaking: @GoogleDeepMind’s latest Gemini-2.5-Pro is now ranked #1 across all LMArena leaderboards 🏆 Highlights: - #1 in all text arenas (Coding, Style Control, Creative Writing, etc) - #1 on the Vision leaderboard with a ~70 pts lead! - #1 on WebDev Arena, surpassing Claude…

We had such a fun tutorial on Creative Planning at NAACL 2025!! I think it’s the first tutorial in ACL history to include LIVE musical performances from @VioletNPeng and @Songyan_Silas_Z!!

Come chat with DQ and me about text-image alignment in front of our poster! Tomorrow 4pm MT at hall 3!

#NAACL2025 Checkout DreamSync's poster tomorrow (May 1) at Hall 3, 4:00-5:30pm. Feel free to stop by to chat about multimodality, evaluation, and interpretability. We are also planning an interpretability lunch tomorrow. Find it on whova and join!

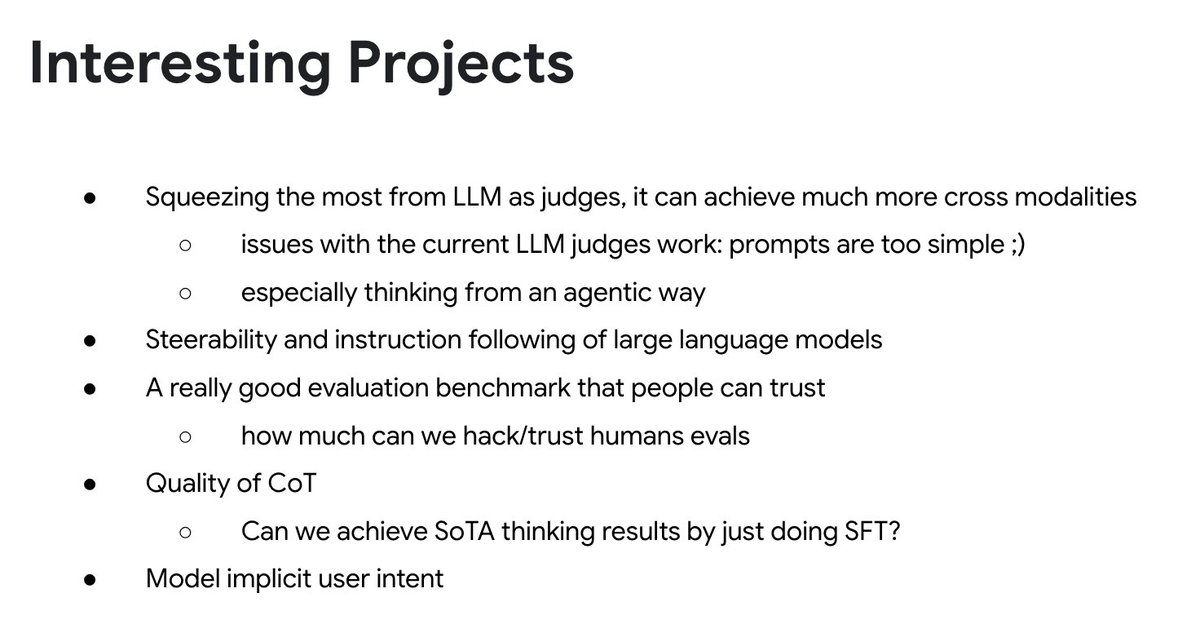

Today I gave a talk at USC reading group about “The Role of Evaluation in RL era” and thought the following topics could be interesting to work on at both school and industry. Hope it helps! Happy to talk more about these at #NAACL2025 in person if you are also coming! 🤘

You don’t want to miss Violet!!

Excited to speak more about AI creativity at SSNLP today in Singapore ssnlp-website.github.io/ssnlp25/ Also look forward to hear what Qwen team has to say about their latest breakthrough! Friends in Singapore: let’s catch up!

TLDR: Low Price, High Performance! Proud to be part of Gemini team!! 👑

Gemini 2.5 Flash just dropped. ⚡ As a hybrid reasoning model, you can control how much it ‘thinks’ depending on your 💰 - making it ideal for tasks like building chat apps, extracting data and more. Try an early version in @Google AI Studio → ai.dev

I expected LLMs to have more faithful reasoning as they gained more from reasoning. Bigger capability gains suggested to me that models would use the stated reasoning more. Sadly, we only saw small gains to faithfulness from reasoning training, which also quickly plateau-ed.

New Anthropic research: Do reasoning models accurately verbalize their reasoning? Our new paper shows they don't. This casts doubt on whether monitoring chains-of-thought (CoT) will be enough to reliably catch safety issues.

Interested in test time / inference scaling laws? Then check out our newest preprint!! 📉 How Do Large Language Monkeys Get Their Power (Laws)? 📉 arxiv.org/abs/2502.17578 w/ @JoshuaK92829 @sanmikoyejo @Azaliamirh @jplhughes @jordanjuravsky @sprice354_ @aengus_lynch1…

Gemini 2.5 Pro is SOTA on pretty much everything

Wow we just ran Gemini 2.5 Pro on our evals and it got a new state of the art. Congrats to the Gemini team! Sharing preliminary results here and working on bringing it into Devin:

🚨 New paper 🚨 Excited to share my first paper w/ my PhD students!! We find that advanced LLM capabilities conferred by instruction or alignment tuning (e.g., SFT, RLHF, DPO, GRPO) can be encoded into model diff vectors (à la task vectors) and transferred across model…