Rylan Schaeffer

@RylanSchaeffer

CS PhD Student at Stanford Trustworthy AI Research with @sanmikoyejo. Prev interned/worked @ Meta, Google, MIT, Harvard, Uber, UCL, UC Davis

I'll be at @icmlconf #ICML2025 next week to present three papers - reach out if you want to chat about generative AI, scaling laws, synthetic data or any other AI topic! #1 How Do Large Language Monkeys Get Their Power (Laws)? x.com/RylanSchaeffer…

Interested in test time / inference scaling laws? Then check out our newest preprint!! 📉 How Do Large Language Monkeys Get Their Power (Laws)? 📉 arxiv.org/abs/2502.17578 w/ @JoshuaK92829 @sanmikoyejo @Azaliamirh @jplhughes @jordanjuravsky @sprice354_ @aengus_lynch1…

Advanced version of Gemini Deep Think (announced at #GoogleIO) using parallel inference time computation achieved gold-medal performance at IMO, solving 5/6 problems with rigorous proofs as verified by official IMO judges! Congrats to all involved! deepmind.google/discover/blog/…

Come to Convention Center West room 208-209 2nd floor to learn about optimal data selection using compression like gzip! tldr; you can learn much faster if you use gzip compression distances to select data given a task! DM if you are interested or what to use the code!

🚨 What’s the best way to select data for fine-tuning LLMs effectively? 📢Introducing ZIP-FIT—a compression-based data selection framework that outperforms leading baselines, achieving up to 85% faster convergence in cross-entropy loss, and selects data up to 65% faster. 🧵1/8

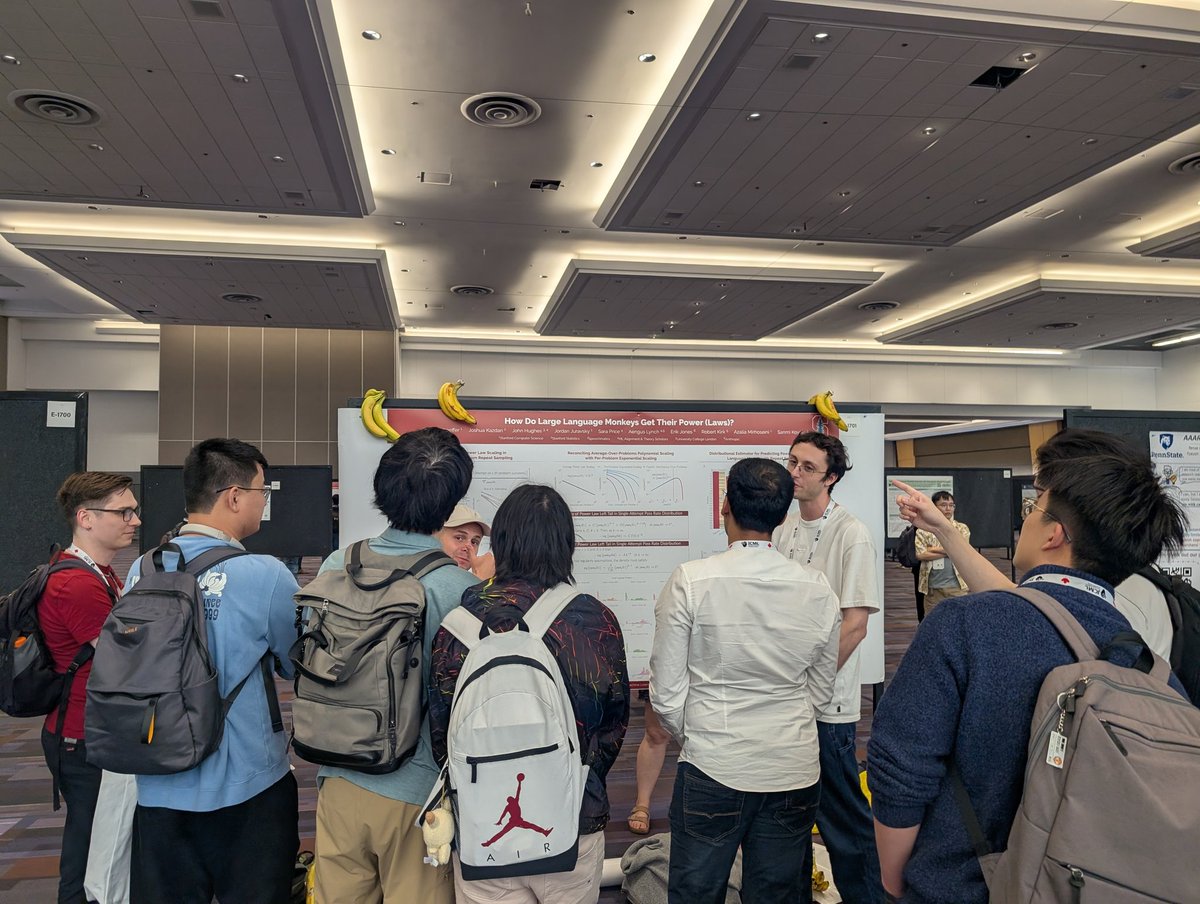

Large Language Monkeys are scaling and they are hungry! 🍌 #ICML2025

I'll be at @icmlconf #ICML2025 next week to present three papers - reach out if you want to chat about generative AI, scaling laws, synthetic data or any other AI topic! #1 How Do Large Language Monkeys Get Their Power (Laws)? x.com/RylanSchaeffer…

If you want to learn about the power (laws) of large language monkeys (and get a free banana 🍌), come to our poster at #ICML2025 !!

One of two claims is true: 1) We fully automated AI/ML research, published one paper and then did nothing else with the technology, or 2) People lie on Twitter Come to our poster E2809 at #ICML2025 now to find out which!!!

We refused to cite the paper due to severe misconduct of the authors of that paper: plagiarism of our own prior work, predominantly AI-generated content (ya, the authors plugged our paper into an LLM and generated another paper), IRB violations, etc. Revealed during a long…

I'm excited to announce that @pfau is wrong - people do still care about non language modeling ML research! #ICML2025

Post-AGI employment opportunity: AI Chain of Thought Inspector?

If you don't train your CoTs to look nice, you could get some safety from monitoring them. This seems good to do! But I'm skeptical this will work reliably enough to be load-bearing in a safety case. Plus as RL is scaled up, I expect CoTs to become less and less legible.

#ICML2025 hot take: A famous researcher (redacted) said they feel like AI safety / existential risk from AI is the most important challenge of our time, and despite many researchers being well intentioned, this person feels like the field has produced no deliverables, has no idea…

An #ICML2025 story from yesterday: @BrandoHablando and I made a new friend who told us that she saw me give a talk at NeurIPS 2023 and then messaged Brando to chat, without realizing that Brando and I are different people 😂

An #ICML2025 story from yesterday: @BrandoHablando and I made a new friend who told us that she saw me give a talk at NeurIPS 2023 and then messaged Brando to chat, without realizing that Brando and I are different people 😂

#Vancouver is beautiful! Excited for #ICML2025 Share food recommendations!

On my way to #ICML2025 ! What food recommendations do people have for #Vancouver? A friend jokingly said Oshinori oshinori.com changed his life

We are raising $20k (which amounts to $800 per person), to cover their travel and lodging to Kigali, Rwanda in August, from either Nigeria or Ghana. Donate what you can here! donorbox.org/mlc-nigeria-de…

That PNAS paper and its sister arxiv.org/abs/1312.6120 are the reasons why I first fell in love with ML & NeuroAI research Beautiful, elegant research

btw, 1) we proved conditions for a simple version of the Platonic Representation Hypothesis for two layer linear networks back in 2019 in our @PNASNews paper: A mathematical theory of semantic development in deep nets (Fig. 11): pnas.org/doi/10.1073/pn… 2) we also showed evidence…

✨ Test-Time Scaling for Robotics ✨ Excited to release 🤖 RoboMonkey, which characterizes test-time scaling laws for Vision-Language-Action (VLA) models and introduces a framework that significantly improves the generalization and robustness of VLAs! 🧵(1 / N) 🌐 Website:…