Hui Chen

@chchenhui

Researcher @NUSComputing; Prev: @NTUsg, Ph.D. @sutdsg, B.Eng. in CS @ZJU_china.

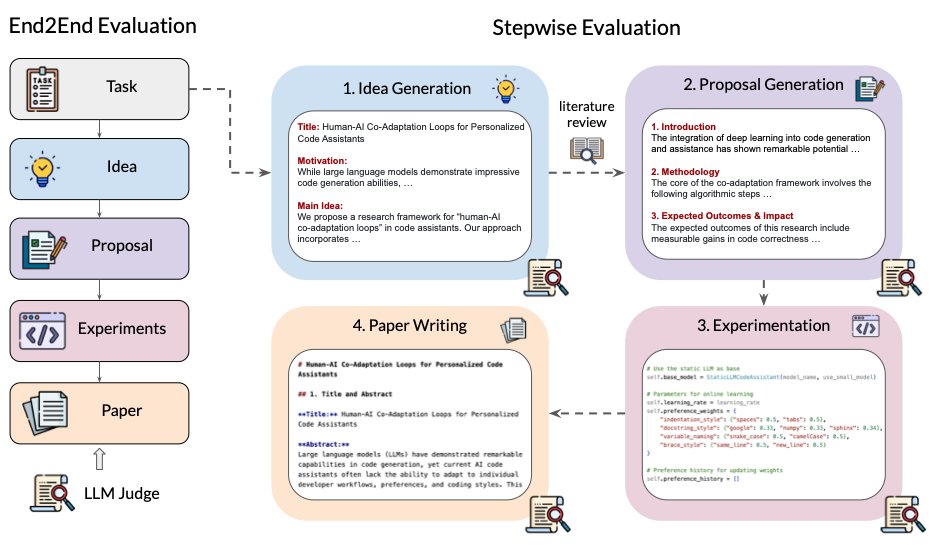

🤖How well can AI agents conduct open-ended machine learning research? 🚀Excited to share our latest #AI4Research benchmark, MLR-Bench, for evaluating AI agents on open-ended machine learning research!!📈 arxiv.org/pdf/2505.19955 1/

New blog post about asymmetry of verification and "verifier's law": jasonwei.net/blog/asymmetry… Asymmetry of verification–the idea that some tasks are much easier to verify than to solve–is becoming an important idea as we have RL that finally works generally. Great examples of…

This year, there have been various pieces of evidence that AI agents are starting to be able to conduct scientific research and produce papers end-to-end, at a level where some of these generated papers were already accepted by top-tier conferences/workshops. Intology’s…

Giving your models more time to think before prediction, like via smart decoding, chain-of-thoughts reasoning, latent thoughts, etc, turns out to be quite effective for unblocking the next level of intelligence. New post is here :) “Why we think”: lilianweng.github.io/posts/2025-05-…

I have the same feeling. Claude Code might be a better option since its full auto mode already has access to the internet.

Until Codex can access the web and install things in its own environment it'll be pretty meh. Fun UX, but feels pretty lost when I try to use it. Like the model is pushing a really heavy object, but can't budge it at all yet. Obvious that it'll work in the future.

Introducing AlphaEvolve: a Gemini-powered coding agent for algorithm discovery. It’s able to: 🔘 Design faster matrix multiplication algorithms 🔘 Find new solutions to open math problems 🔘 Make data centers, chip design and AI training more efficient across @Google. 🧵

New benchmark for deep research agents! An agent that is creative and persistent should be able to find any piece of information on the open web, even if it requires browsing hundreds of webpages. Models that exercise this ability are like a frictionless interface to the…

🚀 DeepSeek-R1 is here! ⚡ Performance on par with OpenAI-o1 📖 Fully open-source model & technical report 🏆 MIT licensed: Distill & commercialize freely! 🌐 Website & API are live now! Try DeepThink at chat.deepseek.com today! 🐋 1/n

I just read the "Thinking LLMs: General Instruction Following With Thought Generation" paper (I), which offers a simple yet effective way to improve the response quality of instruction-finetuned LLMs. Thinking of it as a very simple alternative to OpenAI's o1 model, which…

LLaMA-3.2-Instruct-Vision is on Vision Arena now! 🤗huggingface.co/spaces/WildVis…

Explore real-world failure cases and see how top VLMs like GPT-4o and Yi-VL-Plus handle visual challenges like object orientation—test it yourself at WildVision Arena in the Failure Case Examples tab. 🔗WildVision-Arena: huggingface.co/spaces/WildVis… Thanks to @XingyuFu2 and other…

🔗 Thoughts on Research Impact in AI. Grad students often ask: how do I do research that makes a difference in the current, crowded AI space? This is a blogpost that summarizes my perspective in six guidelines for making research impact via open-source artifacts. Link below.

🚨 Introducing WildVision’s datasets for research on vision-language models (VLMs) — ideal for SFT, RLHF, and Eval. One of the first large-scale VLM alignment data collections sourced from human users. - 💬 WildVision-Chat: Human-VLM conversations with images for VLM training…