Yujie Lu

@yujielu_10

Research Scientist @AIatMeta #Llama | CS PhD @UCSB | ex-intern in Microsoft Research, Amazon AWS AI, Meta FAIR

I’m excited to share that I’ve joined @AIatMeta as a Research Scientist, where I’ll be working on Llama Post-training (multimodality). Can’t wait to work with the team and contribute to open-source multimodal AI! This new chapter comes right after successfully completing my PhD…

I strongly recommend Xinyi! Her talks on the theoretical understanding of LLMs are always inspiring, and discussing research ideas with her is such a joy. She's incredibly talented, collaborative, and creative!

I'm on the job market this year! My research focuses on developing principled insights into large language models and creating practical algorithms from those insights. If you have relevant positions, I’d love to connect! wangxinyilinda.github.io Share/RT appreciated!

🤖How well can AI agents conduct open-ended machine learning research? 🚀Excited to share our latest #AI4Research benchmark, MLR-Bench, for evaluating AI agents on open-ended machine learning research!!📈 arxiv.org/pdf/2505.19955 1/

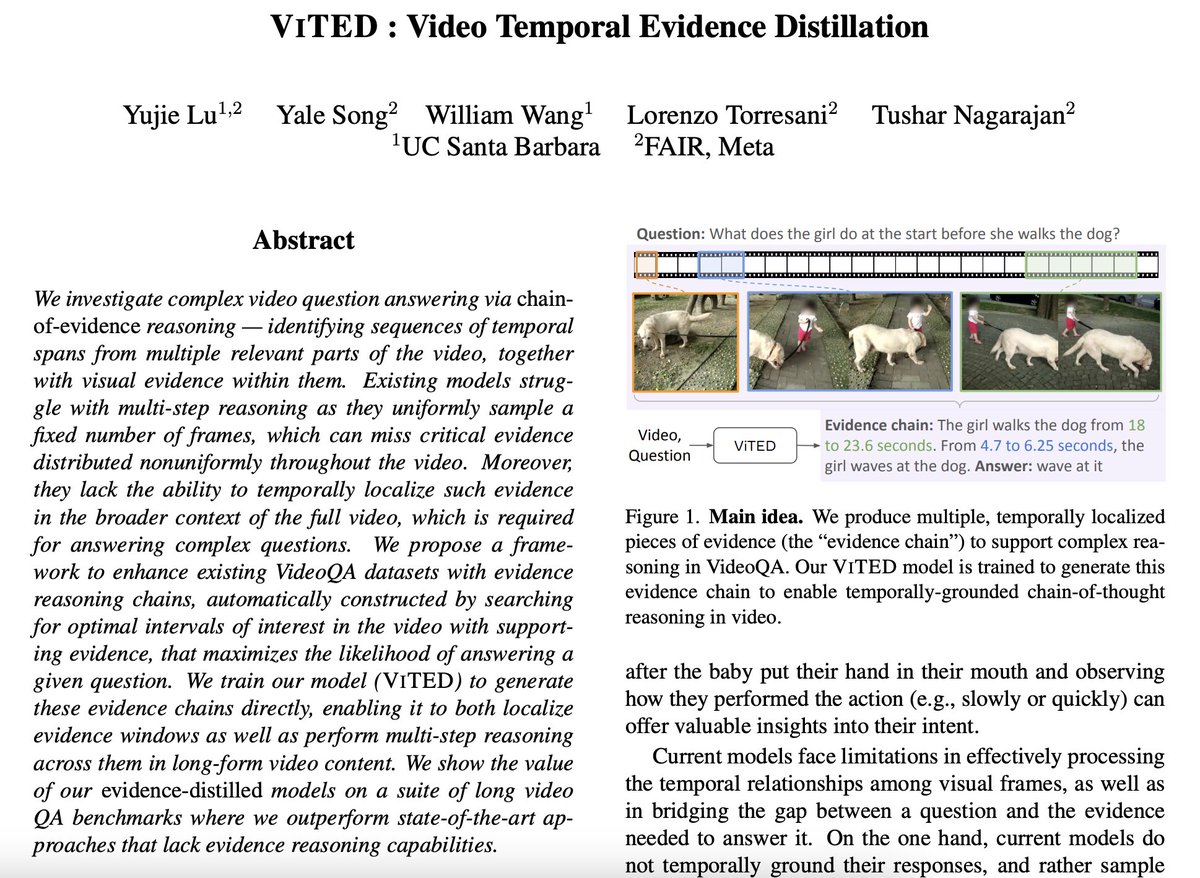

Had an amazing internship at FAIR NYC last summer, and I’m excited to share the result — our paper VITED: Video Temporal Evidence Distillation is accepted at CVPR 2025! 🎉 We tackle complex video QA using evidence reasoning chains for better temporal localization and multi-step…

m-WildVision: Multilingual Multimodal Evaluation Benchmark - 11,500 image-text pairs across 23 languages - 500 challenging vision-language tasks per language - Translated from proven WildVision benchmark queries

🚀 Excited to share the latest on our recent UCSB NLP alumni! 🎉 🔹 @JiachenLi11 (PhD '25) → xAI 🔹 @yujielu_10 (PhD '25) → Meta GenAI 🔹 @PanLiangming (Postdoc '24) → Asst. Prof., Arizona 🔹 @Qnolan4 (PhD '24) → Meta GenAI Proud of their achievements—onward and upward! 🚀

Congrats to the new batch of PhD graduates from @ucsbNLP (24/25)! 🎉 This year, they all decided the industry research scientist path: Apple AI/ML, Meta GenAI, xAI. A shift from past years, when most pursued academia. Wishing you all the best in your new roles!🚀

Glad to share that MMWorld will appear at #ICLR2025 in Singapore! MMWorld is the first-ever video evaluation benchmark for multi-discipline, multi-faceted video understanding! It was introduced half a year ago and extended the MMLU family! 🔥

🌟Thrilled to introduce MMWorld, a new benchmark for multi-discipline, multi-faceted multimodal video understanding, towards evaluating the "world modeling" capabilities in Multimodal LLMs. 🔥 🔍 Key Features of MMWorld: - Multi-discipline: 7 disciplines, Art🎨 & Sports🥎,…

When evaluating the quality/faithfulness of generated images, simpler, computationally cheaper metrics like CLIPScore and ALIGNScore perform surprisingly well, often matching or outperforming more complex, expensive neural-based metrics like TIFA and DSG. @m2saxon…

I am not at NeurIPS this year, but my amazing collaborator @DongfuJiang is presenting our WildVision-Arena right now at East Exhibition Hall 3602. Feel free to stop by and chat with him!

we are resenting WildVision-Arena from 11am to 2pm at east exhibition hall 3602,welcome to pass by and take a look!

WildVision-Arena Huggingface Space: huggingface.co/spaces/WildVis…

Will be at #NeurIPS2024 from Dec 10 to Dec 14. I will present 2 papers accepted to NeurIPS 2024 D/B track where I am the main contributor of both: - GenAI-Arena on Dec 13 from 11am to 2pm at East Exhibit Hall A-C #1103 (neurips.cc/virtual/2024/p…) - WildVision-Arena on Dec 12 from…

Michael is a rising star! It’s always such a pleasure working with him. He’s encouraging, incredibly talented, and full of creative ideas. Every collaboration with him is truly inspiring.

🚨😱Obligatory job market announcement post‼️🤯 I'm searching for faculty positions/postdocs in multimodal/multilingual NLP and generative AI! I'll be at #NeurIPS2024 presenting our work on meta-evaluation for text-to-image faithfulness! Let's chat! Website in bio, papers in🧵

Life update: Excited to announce that I’ll be joining UCSB CS (@ucsbcs) as an Assistant Professor in Fall 2025—where my research dream began! If you’re interested in PhD research (3+ openings) on multimodal, GenAI, or agents (embodied/digital), apply to UCSB CS by Dec 15! 1/n

📣new paper📣Our neurips2024 work improves detection of ChatGPT-generated text!

Do you want to see if your text is generated by AI models such as ChatGPT, GPT-4, Claude and so on? Try our new NeurIPS work: DALD: Improving Logits-based Detector without Logits from Black-box LLMs. You can find the paper at: arxiv.org/abs/2406.05232

Great energy at the AI x Materials summit at UCSB today! Exciting discussions on how AI is transforming materials science, from discovery to sustainability. Inspiring to see so many brilliant minds coming together to push the boundaries of innovation! 🚀

Come and test open VLMs on the WildVision Arena! We have added several amazing VLMs such as Pixtral @MistralAI, Molmo @allen_ai, and Aria @rhymes_ai_. The mug-under-the-table example is still quite challenging for some VLMs, showing their limitations in spatial reasoning. 🔗 on…

Molmo and Aria are on Vision Arena now! Molmo: huggingface.co/allenai/Molmo-… Aria: huggingface.co/rhymes-ai/Aria Try out on WildVision-Arena: huggingface.co/spaces/WildVis…

Molmo and Aria are on Vision Arena now! Molmo: huggingface.co/allenai/Molmo-… Aria: huggingface.co/rhymes-ai/Aria Try out on WildVision-Arena: huggingface.co/spaces/WildVis…

👏Aria has reached 3rd place in the WildVision-Bench, showcasing great chat ability in the vision scenario. Congrats to the Aria Team! @DongxuLi_ Yudong Liu @HaoningTimothy WildVision-Bench: github.com/WildVision-AI/… Aria: huggingface.co/rhymes-ai/Aria 🔥WildVision-Bench is a…

Introducing Aria: the first open-source, multimodal native MoE, with best-in-class performance across multimodal, language, and coding tasks! Blog: rhymes.ai/blog Technical Report: arxiv.org/pdf/2410.05993 Github: github.com/rhymes-ai/Aria Demo: rhymes.ai