Omar Khattab

@lateinteraction

Asst professor @MIT EECS & CSAIL (@nlp_mit). Author of http://ColBERT.ai and http://DSPy.ai (@DSPyOSS). Prev: CS PhD @StanfordNLP. Research @Databricks.

DSPy's biggest strength is also the reason it can admittedly be hard to wrap your head around it. It's basically say: LLMs & their methods will continue to improve but not equally in every axis, so: - What's the smallest set of fundamental abstractions that allow you to build…

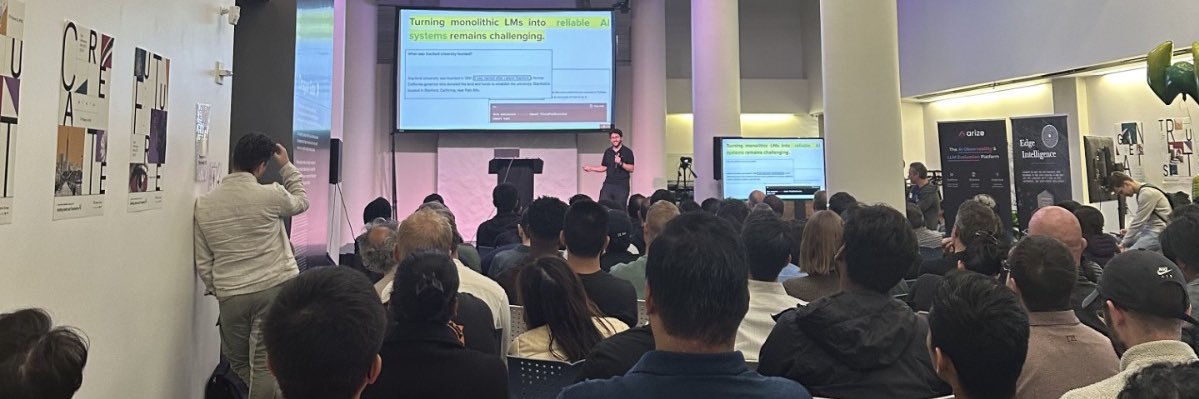

Is this guy talking about DSPy?

In this vein, the IMO results show how deep learning that uses symbolic tools (either in learning or solving) can achieve performance competitive with elite humans even in these formal domains.

The best research questions arise from engaging hands-on with working systems & experiencing the issues; abstracting them into well-defined technical problems, and only then thinking about solutions! P.S. Most high value work in industry actually involves smart "data cleaning."

Academia must be the only industry where extremely high-skilled PhD students spend much of their time doing low value work (like data cleaning). A 1st year management consultant outsources this immediately. Imagine the productivity gains if PhDs could focus on thinking

Great minds think alike! 👀🧠 We also found that more thinking ≠ better reasoning. In our recent paper (arxiv.org/abs/2506.04210), we show how output variance creates the illusion of improvement—when in fact, it can hurt precision. Naïve test-time scaling needs a rethink. 👇…

New Anthropic Research: “Inverse Scaling in Test-Time Compute” We found cases where longer reasoning leads to lower accuracy. Our findings suggest that naïve scaling of test-time compute may inadvertently reinforce problematic reasoning patterns. 🧵

Thanks @lateinteraction ! Every time I think about the gazillion prompt / systems engineering tweaks that also go into making an AI system work I think about how early you were with @DSPyOSS :) Shared theme: find the key human input and make it programmatic.

Every time I think about what it takes to systematically organize the gazillion training tasks that together make a great foundation model, my appreciation for how early @SnorkelAI was increases.

The code is actually super nice to read too. Having great abstractions truly does make a big difference to usability. Also, I highly recommend reading the DSPy docs end-to-end. It makes learning and using it SO much nicer, because it all "clicks".

Day 1 of using @DSPyOSS, and it's amazing 🚀. It's indeed remarkable how simple its API is to relate with - all you need to wrap your head around is the idea of signatures & modules (don't worry about optimizers to start with). And knowledge of Pydantic helps. It's a breeze!

Back in grad school, when I realized how the “marketplace of ideas” actually works, it felt like I’d found the cheat codes to a research career. Today, this is the most important stuff I teach students, more than anything related to the substance of our research. A quick…

oh shit nice, glad i get this better now than when you og posted lol

This original RLHF process by itself is not enough to define rewards for most types of tasks. It's the one that bakes in the fewest assumptions, but it's not enough for math, coding, factual knowledge, instruction-following, etc where you can design better reward functions.