Krithik Ramesh

@KrithikTweets

AI + Math @MIT, compbio stuff @broadinstitute, prev: research @togethercompute

🧬 Meet Lyra, a new paradigm for accessible, powerful modeling of biological sequences. Lyra is a lightweight SSM achieving SOTA performance across DNA, RNA, and protein tasks—yet up to 120,000x smaller than foundation models (ESM, Evo). Bonus: you can train it on your Mac. read…

Big Token can’t handle H-net supremacy

BPE transformer watching an H-Net output an entire wikipedia article as one chunk

Banger tweet

Big Token is quaking in their boots dont worry, we’re here to free you all

🎉 Excited to share that our paper "Pretrained Hybrids with MAD Skills" was accepted to @COLM_conf 2025! We introduce Manticore - a framework for automatically creating hybrid LMs from pretrained models without training from scratch. 🧵[1/n]

Tokenization has been the final barrier to truly end-to-end language models. We developed the H-Net: a hierarchical network that replaces tokenization with a dynamic chunking process directly inside the model, automatically discovering and operating over meaningful units of data

Tokenization is just a special case of "chunking" - building low-level data into high-level abstractions - which is in turn fundamental to intelligence. Our new architecture, which enables hierarchical *dynamic chunking*, is not only tokenizer-free, but simply scales better.

Tokenization has been the final barrier to truly end-to-end language models. We developed the H-Net: a hierarchical network that replaces tokenization with a dynamic chunking process directly inside the model, automatically discovering and operating over meaningful units of data

MFW people are surprised that scaling up transformer-based protein language models didn't help with hella high-resolution variant effect fitness prediction tasks

I converted one of my favorite talks I've given over the past year into a blog post. "On the Tradeoffs of SSMs and Transformers" (or: tokens are bullshit) In a few days, we'll release what I believe is the next major advance for architectures.

1/10 ML can solve PDEs – but precision🔬is still a challenge. Towards high-precision methods for scientific problems, we introduce BWLer 🎳, a new architecture for physics-informed learning achieving (near-)machine-precision (up to 10⁻¹² RMSE) on benchmark PDEs. 🧵How it works:

Despite theoretically handling long contexts, existing recurrent models still fall short: they may fail to generalize past the training length. We show a simple and general fix which enables length generalization in up to 256k sequences, with no need to change the architectures!

Nico Hulkenberg getting his podium is enough to make a grown man cry 🥹

1/ Excited to share our recent work in #ICML2025, “A multi-region brain model to elucidate the role of hippocampus in spatially embedded decision-making”. 🎉 🔗 minzsiure.github.io/multiregion-br… Joint w/ @FieteGroup @jaedong_hwang, @brody_lab, @PrincetonNeuro ⬇️ 🧵 for key takeaways…

Excited to share our new work on Self-Adapting Language Models! This is my first first-author paper and I’m grateful to be able to work with such an amazing team of collaborators: @jyo_pari @HanGuo97 @akyurekekin @yoonrkim @pulkitology

What if an LLM could update its own weights? Meet SEAL🦭: a framework where LLMs generate their own training data (self-edits) to update their weights in response to new inputs. Self-editing is learned via RL, using the updated model’s downstream performance as reward.

exciting to see that hybrid models maintain reasoning performance with few attention layers. benefits of linear architectures are prominent for long reasoning traces, when efficiency is bottlenecked by decoding - seems like a free win if reasoning ability is preserved as well!

👀 Nemotron-H tackles large-scale reasoning while maintaining speed -- with 4x the throughput of comparable transformer models.⚡ See how #NVIDIAResearch accomplished this using a hybrid Mamba-Transformer architecture, and model fine-tuning ➡️ nvda.ws/43PMrJm

In the test time scaling era, we all would love a higher throughput serving engine! Introducing Tokasaurus, a LLM inference engine for high-throughput workloads with large and small models! Led by @jordanjuravsky, in collaboration with @HazyResearch and an amazing team!

Happy Throughput Thursday! We’re excited to release Tokasaurus: an LLM inference engine designed from the ground up for high-throughput workloads with large and small models. (Joint work with @achakravarthy01, @ryansehrlich, @EyubogluSabri, @brad19brown, @jshetaye,…

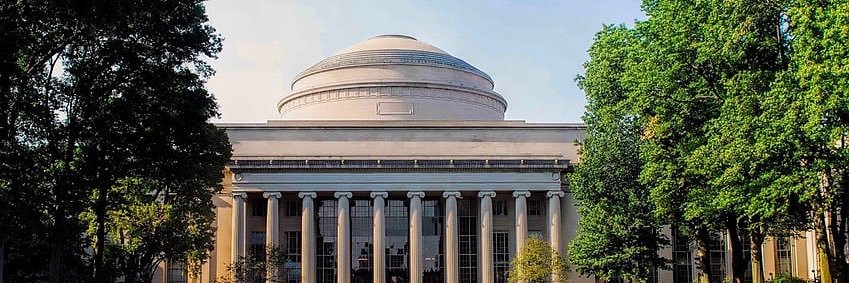

You did it, MIT students! We’re so proud of you. Image: Jenny Baek '25

"Pre-training was hard, inference easy; now everything is hard."-Jensen Huang. Inference drives AI progress b/c of test-time compute. Introducing inference aware attn: parallel-friendly, high arithmetic intensity – Grouped-Tied Attn & Grouped Latent Attn

Anthropic intelligence*

We're rolling out voice mode in beta on mobile. Try starting a voice conversation and asking Claude to summarize your calendar or search your docs.