tender

@tenderizzation

PRs reverted world champion

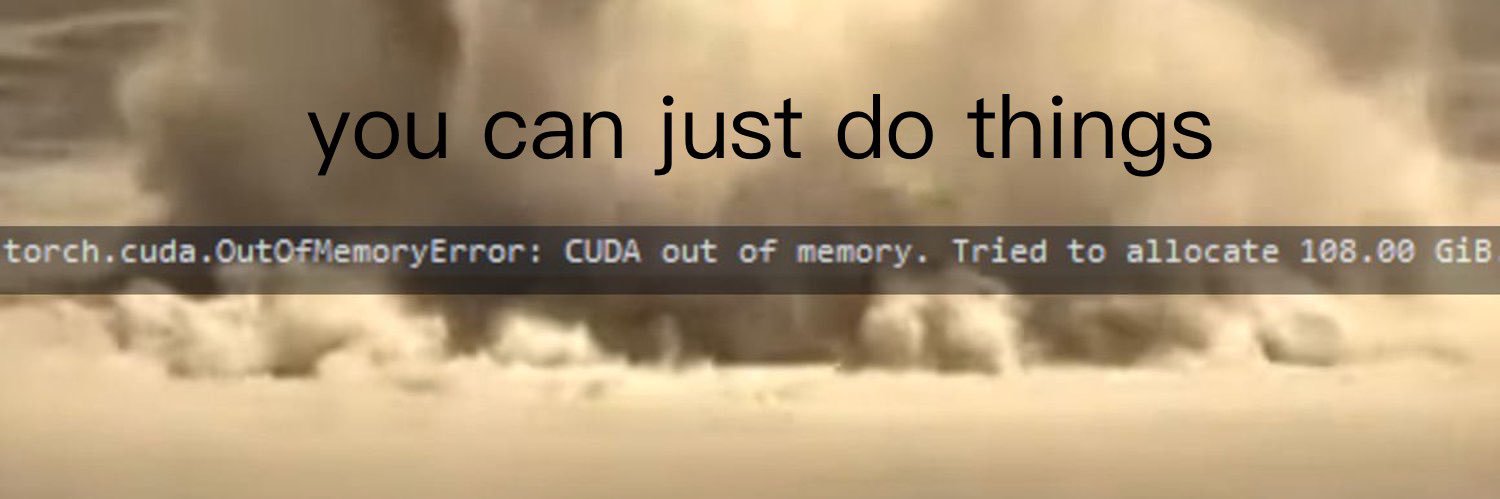

DM me "hey" I'll debug your CUDA error: an illegal memory access🍒 "hi" I'll debug your cuDNN error: CUDNN_STATUS_BAD_PARAM 🍑 "howdy" I'll debug your CUDA error: CUBLAS_STATUS_EXECUTION_FAILED 🍓

people will say this and then you make the default config more optimal only to find out it is broken on GeForce 1050 Ti when the horde of windows huggingface/diffusers users descends on your github project

running some workload on torchtitan with default config, 23% mfu, change a bit the config, enable compile and flex, increase batch size a bit --> 58% mfu. I wish we had more performant default in the torch ecosystem

the corollary to this is that until gdm poaches some high profile folks the cost to convince someone to switch from pytorch to JAX is unbounded

we can only surmise that it costs $100M to poach an openai researcher but $1B to poach a gdm researcher because that’s how much it costs to convince someone to switch from JAX to pytorch

world class AI researcher explaining why their FP4 quantization-aware training recipe is the only one that prevents the model from diverging

"the patient needs horse piano to live"

Tri Dao beating one of his own kernels with RL

what if Tri Dao is one of the co-authors😏

if you want to build this you can just start from the code generated by the torch.compile Inductor C++ wrapper and remove dependencies until there are none left </shitpost>

It's honestly incomprehensible to me that we haven't started writing training solutions like game engines. Stable, well designed abstractions, in a clean zero-dependency C++ project. You know game engines also just build GPU command buffers, right?

to be fair you will see the same kind of stuff at Regeneron (rip Intel) STS and ISEF

The children yearn to be working in fabs Taiwan high school science exhibition this year are discussing about 1.5nm Gate All Around transistor structure optimization The kids are unbelievably cracked

contributors adding a new feature and then realizing it has to be compatible with all previous use-cases, including bugs

Aaand it’s reverted (hopefully back in soon though)

on the heels of the IMO gold announcement I would like to point out native FusedRMSNorm by @Norapom04 was finally merged in pytorch (and not reverted yet🤞) torch.nn.RMSNorm will automatically pick the faster path with no user code changes multiple times speedup over previous…

back in my day our pile of S-curves was called Moore’s Law and it lasted four decades CMOS, immersion lithography, DUV, EUV, multi-patterning, FinFET, just to name a few but it doesn’t seem like we need four decades of sustained AI progress for it to become self-sustaining

What seems like an exponential in AI is just a series of S curves. Each era rides on a wave of increasing compute but finds a new way to utilise it - overcoming limitations of the previous stage. Eg pre-training was the dominant way to utilise compute, but the limitations of…

the NaN loss making its way from a worker gpu register through global memory, host memory, cpu registers, the NIC, TCP/IP, the wandb server's NIC, its system memory, ..., TCP/IP back to some web browser just so the researcher can kill the experiment