Zhaofeng Wu ✈️ ACL

@zhaofeng_wu

PhD student @MIT_CSAIL | Previously @allen_ai | MS'21 BS'19 BA'19 @uwnlp

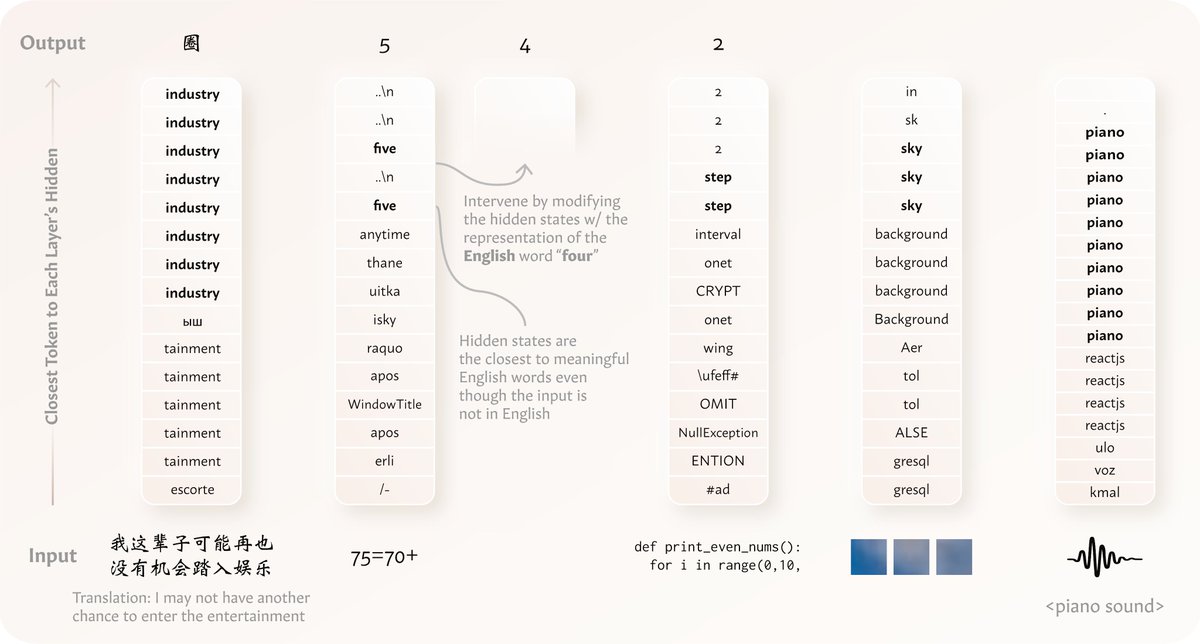

💡We find that models “think” 💭 in English (or in general, their dominant language) when processing distinct non-English or even non-language data types 🤯 like texts in other languages, arithmetic expressions, code, visual inputs, & audio inputs ‼️ 🧵⬇️arxiv.org/abs/2411.04986

A short 📹 explainer video on how LLMs can overthink in humanlike ways 😲! had a blast presenting this at #icml2025 🥳

1+1=3 2+2=5 3+3=? Many language models (e.g., Llama 3 8B, Mistral v0.1 7B) will answer 7. But why? We dig into the model internals, uncover a function induction mechanism, and find that it’s broadly reused when models encounter surprises during in-context learning. 🧵

WHY do you prefer something over another? Reward models treat preference as a black-box😶🌫️but human brains🧠decompose decisions into hidden attributes We built the first system to mirror how people really make decisions in our #COLM2025 paper🎨PrefPalette✨ Why it matters👉🏻🧵

I'll be in Vienna at #acl2025! Please lmk if you'd like to chat 🙂

🤖New paper w/ @zh_herbert_zhou @SimonCharlow @bob_frank in ACL2025 & SCiL 💡We use core ideas from Dynamic Semantics to evaluate LLMs and found that they show human-like judgments on anaphora accessibility but rely on specific lexical cues under more careful scrutiny. 🧵1/6

I will be in Vancouver🇨🇦 for #ICML2025 this week and present #SelfCite on Tuesday morning. Happy to chat and connect. See you there! Blog post link: selfcite.github.io

(1/5)🚨LLMs can now self-improve to generate better citations✅ 📝We design automatic rewards to assess citation quality 🤖Enable BoN/SimPO w/o external supervision 📈Perform close to “Claude Citations” API w/ only 8B model 📄arxiv.org/abs/2502.09604 🧑💻github.com/voidism/SelfCi…

🎉 Excited to announce that the 4th HCI+NLP workshop will be co-located with @EMNLP in Suzhou, China! 🌍📍 Join us to explore the intersection of human-computer interaction and NLP. 🧵 1/

✨Hi everyone! We are running a user study on a physical activity coaching chatbot to help boost your STEPCOUNT 💪💪💪! This study will span 4 - 6 weeks. Please sign up if you are interested! forms.gle/K9wgvcL8jBsKTf…

Seeing an experiment and thinking "but have they tried X? what if we do Y?" is a key part of research and a start to new discoveries. RexBench tests if coding agents can implement new extensions. It complements recent evals (eg PaperBench from @OpenAI) on replication! See 👇

Can coding agents autonomously implement AI research extensions? We introduce RExBench, a benchmark that tests if a coding agent can implement a novel experiment based on existing research and code. Finding: Most agents we tested had a low success rate, but there is promise!

Are AI scientists already better than human researchers? We recruited 43 PhD students to spend 3 months executing research ideas proposed by an LLM agent vs human experts. Main finding: LLM ideas result in worse projects than human ideas.

📈 Key results ✅ +5.3% avg accuracy on RewardBench (Safety +12.4%, Reasoning +7.1%) ✅ +9.1% aggregate gain on reWordBench (21/23 transformations) that tests for robustness to meaning preserving transformations. Thanks to @zhaofeng_wu, @gh_marjan and others for the great…

✅ +9.1% aggregate gain on reWordBench (21/23 transformations) that tests for robustness to meaning preserving transformations. Thanks to @zhaofeng_wu, @gh_marjan and others for the great benchmark. See more details about reWordBench x.com/zhaofeng_wu/st…

Robust reward models are critical for alignment/inference-time algos, auto eval, etc. (e.g. to prevent reward hacking which could render alignment ineffective). ⚠️ But we found that SOTA RMs are brittle 🫧 and easily flip predictions when the inputs are slightly transformed 🍃 🧵

Web data, the “fossil fuel of AI”, is being exhausted. What’s next?🤔 We propose Recycling the Web to break the data wall of pretraining via grounded synthetic data. It is more effective than standard data filtering methods, even with multi-epoch repeats! arxiv.org/abs/2506.04689

I didn't believe when I first saw, but: We trained a prompt stealing model that gets >3x SoTA accuracy. The secret is representing LLM outputs *correctly* 🚲 Demo/blog: mattf1n.github.io/pils 📄: arxiv.org/abs/2506.17090 🤖: huggingface.co/dill-lab/pils-… 🧑💻: github.com/dill-lab/PILS

What happens when an LLM is asked to use information that contradicts its knowledge? We explore knowledge conflict in a new preprint📑 TLDR: Performance drops, and this could affect the overall performance of LLMs in model-based evaluation.📑🧵⬇️ 1/8 #NLProc #LLM #AIResearch

LLMs trained to memorize new facts can’t use those facts well.🤔 We apply a hypernetwork to ✏️edit✏️ the gradients for fact propagation, improving accuracy by 2x on a challenging subset of RippleEdit!💡 Our approach, PropMEND, extends MEND with a new objective for propagation.

What if an LLM could update its own weights? Meet SEAL🦭: a framework where LLMs generate their own training data (self-edits) to update their weights in response to new inputs. Self-editing is learned via RL, using the updated model’s downstream performance as reward.

🚨 70 million US workers are about to face their biggest workplace transmission due to AI agents. But nobody asks them what they want. While AI races to automate everything, we took a different approach: auditing what workers want vs. what AI can do across the US workforce.🧵

Flash Linear Attention (github.com/fla-org/flash-…) will no longer maintain support for the RWKV series (existing code will remain available). Here’s why:

🔥Unlocking New Paradigm for Test-Time Scaling of Agents! We introduce Test-Time Interaction (TTI), which scales the number of interaction steps beyond thinking tokens per step. Our agents learn to act longer➡️richer exploration➡️better success Paper: arxiv.org/abs/2506.07976