Ryan Liu @ ICML, CogSci

@theryanliu

2nd year CS PhD @ Princeton advised by Tom Griffiths

A short 📹 explainer video on how LLMs can overthink in humanlike ways 😲! had a blast presenting this at #icml2025 🥳

New preprint! 🤖🧠 The cost of thinking is similar between large reasoning models and humans 👉 osf.io/preprints/psya… w/ Ferdinando D'Elia, @AndrewLampinen, and @ev_fedorenko (1/6)

Grateful to have our recent ICML paper covered by @StanfordHAI. Humanity is building incredibly powerful AI technology that can usher in utopia or dystopia. We need human-computer interaction research, particularly with “social science tools like simulations that can keep pace.”

Social science research can be time-consuming, expensive, and hard to replicate. But with AI, scientists can now simulate human data and run studies at scale. Does it actually work? hai.stanford.edu/news/social-sc…

Today (w/ @UniofOxford @Stanford @MIT @LSEnews) we’re sharing the results of the largest AI persuasion experiments to date: 76k participants, 19 LLMs, 707 political issues. We examine “levers” of AI persuasion: model scale, post-training, prompting, personalization, & more 🧵

New Anthropic Research: “Inverse Scaling in Test-Time Compute” We found cases where longer reasoning leads to lower accuracy. Our findings suggest that naïve scaling of test-time compute may inadvertently reinforce problematic reasoning patterns. 🧵

A hallmark of human intelligence is the capacity for rapid adaptation, solving new problems quickly under novel and unfamiliar conditions. How can we build machines to do so? In our new preprint, we propose that any general intelligence system must have an adaptive world model,…

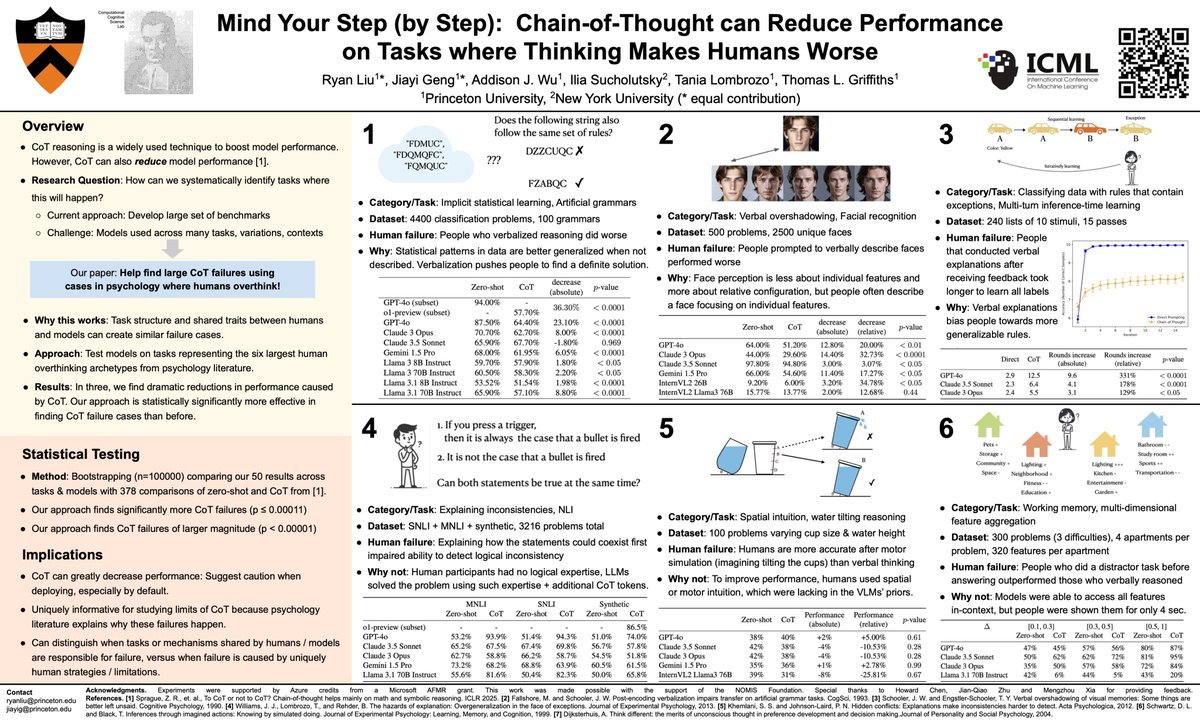

Chain of thought can hurt LLM performance 🤖 Verbal (over)thinking can hurt human performance 😵💫 Are when/why they happen similar? Come find out at our poster at West-320 ⏰11am tomorrow! #ICML2025

🤔 Feel like your AI is bullshitting you? It’s not just you. 🚨 We quantified machine bullshit 💩 Turns out, aligning LLMs to be "helpful" via human feedback actually teaches them to bullshit—and Chain-of-Thought reasoning just makes it worse! 🔥 Time to rethink AI alignment.

Using LLMs to build AI scientists is all the rage now (e.g., Google’s AI co-scientist [1] and Sakana’s Fully Automated Scientist [2]), but how much do we understand about their core scientific abilities? We know how LLMs can be vastly useful (solving complex math problems) yet…

Should we use LLMs 🤖 to simulate human research subjects 🧑? In our new preprint, we argue sims can augment human studies to scale up social science as AI technology accelerates. We identify five tractable challenges and argue this is a promising and underused research method 🧵

Todo lists, docs, email style – if you've got individual or team knowledge you want ChatGPT/Claude to have access to, Knoll (knollapp.com) is a personal RAG store from @Stanford that you can add any knowledge into. Instead of copy-pasting into your prompt every time,…

OpenAI has launched Operator, an agent that can perform tasks in your browser. I asked it to complete a Qualtrics survey I created. The results are very promising for Operator but *very* concerning for survey researchers

Constitutional AI works great for aligning LLMs, but the principles can be too generic to apply. Can we guide responses with context-situated principles instead? Introducing SPRI, a system that produces principles tailored to each query, with minimal to no human effort. [1/5]

Simulating future outcomes of people's interactions with AI reduces deception... Because eventually the person will find out! 🙃 Great application of simulation, really enjoyed working on the project with @kevin_lkq @HaiminHu @cocosci_lab & Jaime

Social science research can be time-consuming, expensive, and hard to replicate. But with AI, scientists can now simulate human data and run studies at scale. Does it actually work? hai.stanford.edu/news/social-sc…

Do reviewers appropriately update their scores based on rebuttals? In this PLOS ONE paper with @theryanliu, Steven Jecmen, @fangf07, and Nihar Shah, we present a randomized controlled trial that suggests that, at least under certain conditions, they do. journals.plos.org/plosone/articl…

So thrilled to share our work on this~! Grounding generative agents in real, verifiable behavior is the step that takes this method from producing simulacra to creating simulations that capture the richness of human experiences.

Simulating human behavior with AI agents promises a testbed for policy and the social sciences. We interviewed 1,000 people for two hours each to create generative agents of them. These agents replicate their source individuals’ attitudes and behaviors. 🧵arxiv.org/abs/2411.10109

Simulating human behavior with AI agents promises a testbed for policy and the social sciences. We interviewed 1,000 people for two hours each to create generative agents of them. These agents replicate their source individuals’ attitudes and behaviors. 🧵arxiv.org/abs/2411.10109

Academic job market post! 👀 I’m a CS Postdoc at Stanford in the @StanfordHCI group. I develop ways to improve the online information ecosystem, by designing better social media feeds & improving Wikipedia. I work on AI, Social Computing, and HCI. piccardi.me 🧵