Daniel van Strien

@vanstriendaniel

Machine Learning Librarian @huggingface 🤗 I like datasets.

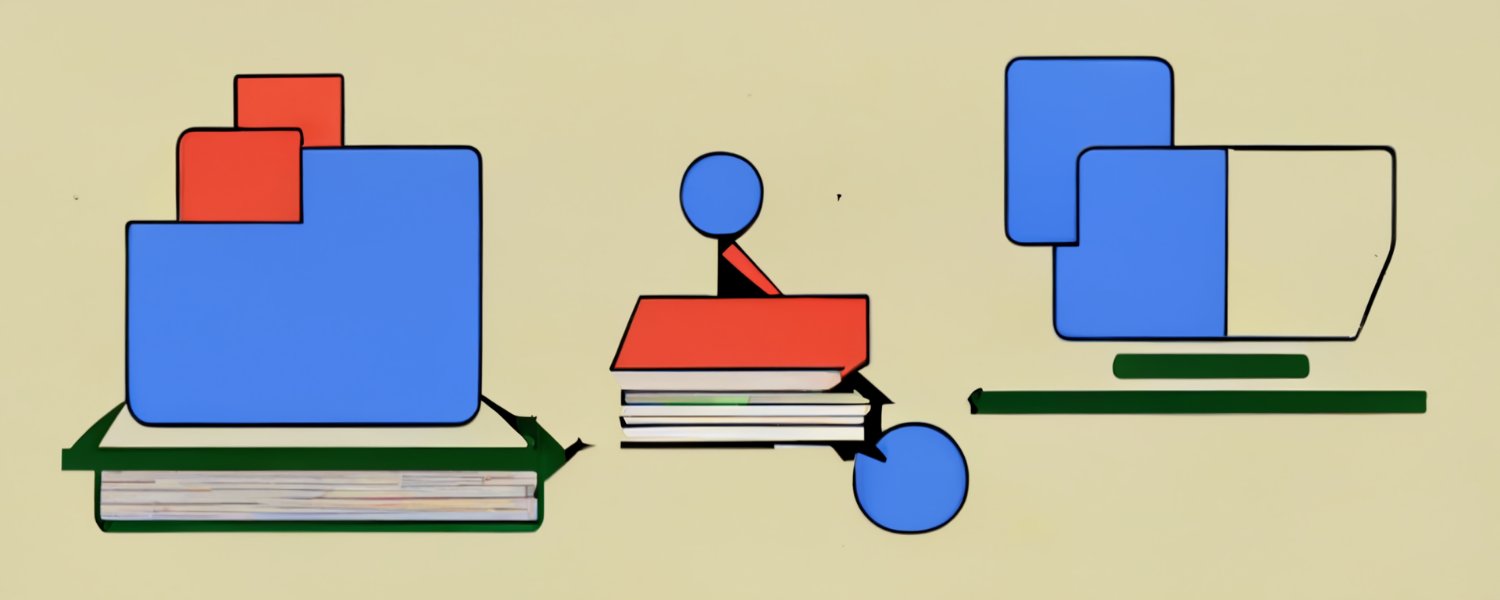

A new Pandas feature landed 3 days ago and no one noticed. Upload ONLY THE NEW DATA to dedupe-based storage like @huggingface (Xet). Data that already exist in other files don't need to be uploaded. Possible thanks to the recent addition of Content Defined Chunking for Parquet.

🤔 Have you ever wondered how good ModernBERT is compared to decoders like Llama? We made an open-data version of ModernBERT and used the same recipe for encoders and decoders. Turns out, our encoder model beat ModernBERT and our decoder model beats Llama 3.2 / SmolLM2 🤯 🧵

Today we're releasing Community Alignment - the largest open-source dataset of human preferences for LLMs, containing ~200k comparisons from >3000 annotators in 5 countries / languages! There was a lot of research that went into this... 🧵

Microsoft releases a new dataset that improves Qwen2.5-7B from 17.4% to 57.3% on LiveCodeBench. It's called rStar-Coder, 418K tasks designed to push competitive code reasoning. A 7B model trained on it outperforms QWQ-32B on the USA Computing Olympiad. huggingface.co/datasets/micro…

In case the post was too vague, yes - this is the Hermes 3 dataset - 1 Million Samples - Created SOTA without the censorship at it's time on Llama-3 series (8, 70, and 405B) - Has a ton of data for teach system prompt adherence, roleplay, and a great mix of subjective and…

huggingface.co/datasets/NousR…

Working on something fun. Imagine being able to use a VLM to annotate any IIIF image by selecting a region and annotating/transcribing it. This can also be used to map to line-level models (like Kraken) for HTR. Imagine no more! =) would anyone find this useful?

🚀Our rStar-Coder dataset is now released! A verified dataset of 418K competition-level code problems, each with test cases of varying difficulty. On LiveCodeBench, it boosts Qwen2.5-14B from 23.3% → 62.5%, beating o3-mini (low) by +3.1%. Try it here: huggingface.co/datasets/micro…

Google Drive is great for many things — sharing research datasets isn’t one of them. If your dataset isn’t on the @huggingface Hub yet, LLMs can now help. Inspired by @jeremyphoward’s llms.txt, we’ve made a guide to help LLMs convert your data to Hub format.

A truly remarkable and inspiring initiative!🎉 Proud to have been a part of it

465 people. 122 languages. 58,185 annotations! FineWeb-C v1 is complete! Communities worldwide have built their own educational quality datasets, proving that we don't need to wait for big tech to support languages. Huge thanks to all who contributed! huggingface.co/blog/davanstri…

Happy to announce 🤗Datasets 4 ! we've added the most requested feature 👀 Introducing streaming data pipelines for Hugging Face Datasets ✨ With support for large, multimodal datasets in any standard file format, and with num_proc= for speed⚡

465 people. 122 languages. 58,185 annotations! FineWeb-C v1 is complete! Communities worldwide have built their own educational quality datasets, proving that we don't need to wait for big tech to support languages. Huge thanks to all who contributed! huggingface.co/blog/davanstri…

Nice! Norwegian Bokmål is also here 🇳🇴 huggingface.co/datasets/NbAiL…

Glad to have contributed to Tamil! One thing I noticed while annotating was the poor quality (i.e) no educational value of the content What counts as NO educational value? – Nothing to learn from – Pure entertainment, ads, or personal content ....

465 people. 122 languages. 58,185 annotations! FineWeb-C v1 is complete! Communities worldwide have built their own educational quality datasets, proving that we don't need to wait for big tech to support languages. Huge thanks to all who contributed! huggingface.co/blog/davanstri…

I have an alternative proposal.

For the last few months I’ve brought up ‘transparency’ as a policy framework for governing powerful AI systems and the companies that develop them - to help move this conversation forward @anthropicai has published details about what a transparency framework could look like