Zihan Wang - on RAGEN

@wzihanw

PhD Student @NorthwesternU. Intern @yutori_ai. I study PhysiCS of LLM. Ex @deepseek_ai @uiuc_nlp @RUC. RAGEN | Chain-of-Experts | ESFT.

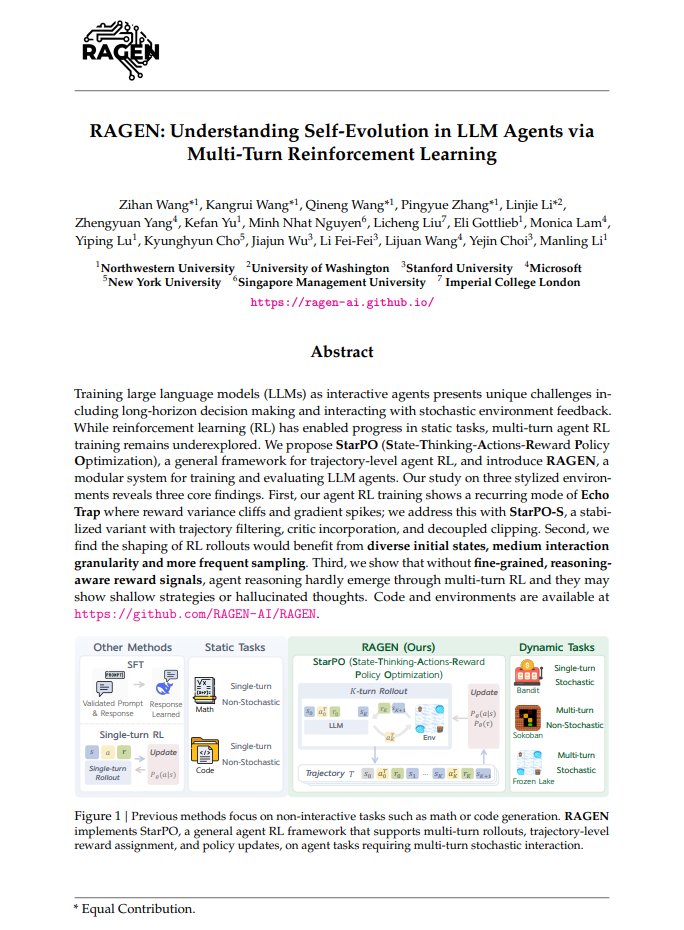

Why does your RL training always collapse? In our new paper of RAGEN, we explore what breaks when you train LLM *Agents* with multi-turn reinforcement learning—and possibly how to fix it. 📄 github.com/RAGEN-AI/RAGEN… 🌐 ragen-ai.github.io 1/🧵👇

Single-turn data -> Multi-turn RL for general reasoning. Simple method yet effective. Worth trying out! Kudos to our amazing intern @liulicheng10 . He is applying for PhD positions for Fall 26!

Will conversation history help reasoning? We found that when models mess up once, they often get stuck. Surprisingly, a simple “try again” fixes this — and boosts reasoning.🧵 Project Page: unary-feedback.github.io

Excited that @RuohanZhang76 is joining NU @northwesterncs ! If you are thinking about pursuing a PhD, definitely reach out to him! During my wonderful year at @StanfordAILab @StanfordSVL, when I was completely new to robotics, he was the nicest person who was incredibly patient…

📢 Beginning this fall, four new tenure-track, clinical, and visiting faculty members will join our department! 📢 We are thrilled to welcome Shaddin Dughmi, Sidhanth Mohanty, Lydia Tse, and Ruohan Zhang! Meet the newest members of our team: spr.ly/6019fGdXv

Excited to be speaking about agents at #GenAIWeek Silicon Valley this Sunday, July 13! Meet us at the panel Agent-to-Human Interfaces & Interactions, 10:50-11:30 AM :)

📣 Excited to announce SpaVLE: #NeurIPS2025 Workshop on Space in Vision, Language, and Embodied AI! 👉 …vision-language-embodied-ai.github.io 🦾Co-organized with an incredible team → @fredahshi · @maojiayuan · @DJiafei · @ManlingLi_ · David Hsu · @Kordjamshidi 🌌 Why Space & SpaVLE? We…

MindCube is the result of a joint effort of @NorthwesternEng @StanfordAILab @StanfordHAI @StanfordSVL @NYU_Courant @uwcse. Huge thanks to project leader @qineng_wang @Baiqiao_Yin and our incredible team @drfeifei @jiajunwu_cs @sainingxie @RanjayKrishna @HanLiu @WilliamZhangNU…

Grateful to be a part of this amazing project on spatial intelligence lead by my awesome labmate @qineng_wang !

Can VLMs build Spatial Mental Models like humans? Reasoning from limited views? Reasoning from partial observations? Reasoning about unseen objects behind furniture / beyond current view? Check out MindCube! 🌐mll-lab-nu.github.io/mind-cube/ 📰arxiv.org/pdf/2506.21458…

Are AI scientists already better than human researchers? We recruited 43 PhD students to spend 3 months executing research ideas proposed by an LLM agent vs human experts. Main finding: LLM ideas result in worse projects than human ideas.

Finally available on arxiv! arxiv.org/abs/2506.18945

🚀 Introducing Chain-of-Experts (CoE), A Free-lunch optimization method for DeepSeek-like MoE models! within $200, we explore to train MoEs that enables 17.6-42% efficiency boost in memory! Code: github.com/ZihanWang314/c… Blog: notion.so/Chain-of-Exper……

Chain-of-Experts (CoE) , proposed by @wzihanw and @ruipeterpan , is a fresh take on Mixture-of-Experts that trades parallelism for communication. Instead of picking experts once, CoE iteratively routes tokens through a chain of experts within each layer, re-evaluating at every…

Getting reward from human preference for robots👇 ROSETTA won best paper at CRLH @ RSS and will be at HitLRL today! Led by the amazing @sanjana__z , come and chat more with her at RSS today!

🤖 Household robots are becoming physically viable. But interacting with people in the home requires handling unseen, unconstrained, dynamic preferences, not just a complex physical domain. We introduce ROSETTA: a method to generate reward for such preferences cheaply. 🧵⬇️

Catch our Chief Scientist, @DhruvBatraDB, at @ Scale tomorrow (June 25) at 1:20pm PT for a panel on building generative AI startups.

It's actually a pity that we got no enough time to maintain OpenManus during the past 3 months. But the better news is that we will build a formal open-source community for OpenManus at the end of this month.

My Scout's report this morning on @AIatMeta's hiring progress for superintelligence: - Johan Schalkwyk: success - @polynoamial and @koraykv: failed - Low retention rates compared to @AnthropicAI Things happen in the world and I get a very succinct timely report. Reporting…

I have a Scout set up for leadership changes to major AI labs. It told me 30 min ago that @jack_w_rae was moving to @AIatMeta following @shiringhaffary @KurtWagner8 @rileyraygriffin's article in Bloomberg. I couldn't have set up a Google alert for this specific change. I…

Just started my first day at Yutori @yutori_ai (based in SF)! I’ll continue building autonomous and personalized agents. Super excited to join the team — friends in SF, let’s catch up soon! 🚀

What if an LLM could update its own weights? Meet SEAL🦭: a framework where LLMs generate their own training data (self-edits) to update their weights in response to new inputs. Self-editing is learned via RL, using the updated model’s downstream performance as reward.

We will present T* and LV-Haystack this afternoon @CVPR, Poster #306. Come check out our poster!

🚀 Introducing T* and LV-Haystack — our latest leap forward in VLMs for long video understanding! 🧩 Lightweight plugin: T* boosting LLaVA-OV-72B (56→62%) and GPT-4o (50→53%)! ⚡ Fast inference: 34.9s → 10.4s latency, 691 → 170 TFLOPs v.s. SOTA. 📚 Large-scale dataset: 400…

Honored to sponsor the #CVPR workshop and present alongside brilliant researchers. Let’s push the boundaries of embodied AI together! 🚀 #AI #MachineLearning

Appreciate the support from @abaka_ai for our workshop!