Martin Ziqiao Ma

@ziqiao_ma

〽️ PhD @UMichCSE | 💼 @IBM @Adobe @Amazon | Weinberg Cogsci Fellow | Language, Interaction, Embodiment | Make community better @ACLMentorship @GrowAILikeChild

Can we scale 4D pretraining to learn general space-time representations that reconstruct an object from a few views at any time to any view at any other time? Introducing 4D-LRM: a Large Space-Time Reconstruction Model that ... 🔹 Predicts 4D Gaussian primitives directly from…

Big congrats! If you’re thinking about a PhD in multimodal reasoning, generation, and evaluation, go work with @jmin__cho!

🥳 Gap year update: I'll be joining @allen_ai/@UW for 1 year (Sep2025-Jul2026 -> @JHUCompSci) & looking forward to working with amazing folks there, incl. @RanjayKrishna, @HannaHajishirzi, Ali Farhadi. 🚨 I’ll also be recruiting PhD students for my group at @JHUCompSci for Fall…

Test-time scaling nailed code & math—next stop: the real 3D world. 🌍 MindJourney pairs any VLM with a video-diffusion World Model, letting it explore an imagined scene before answering. One frame becomes a tour—and the tour leads to new SOTA in spatial reasoning. 🚀 🧵1/

Does vision training change how language is represented and used in meaningful ways?🤔 The answer is a nuanced yes! Comparing VLM-LM minimal pairs, we find that while the taxonomic organization of the lexicon is similar, VLMs are better at _deploying_ this knowledge. [1/9]

Wow lots of discussions about my paper...sorry I am a bit late to the party.😄 You raise an important point about prompt sensitivity. But it’s crucial to recognize the asymmetry in the logical implications of positive vs. negative evidence: -> To demonstrate that a system P…

This is actually a great example of what I'm criticizing. They design a bunch of prompts but do not evaluate the variance across these options, nor which aspects of the prompts matter or not.

+1 on this! Mixed-effects models are such an underrated protocol for behavioral analysis that AI researchers often overlook. Behavioral data are almost never independent: clustering, repeated measures, and hierarchical structures abound. Mixed-effects models account for these…

I'd highlight the point on generalization: to make a "poor generalization" argument, we need systematic evaluations. A promising protocol is prompting multiple LMs and treating each as an individual in mixed-effect models. arxiv.org/pdf/2502.09589 w/ @tom_yixuan_wang (2/n)

🤨Ever dream of a tool that can magically restore and upscale any (low-res) photo to crystal-clear 4K? 🔥Introducing "4KAgent: Agentic Any Image to 4K Super-Resolution", the most capable upscaling generalist designed to handle broad image types. 🔗4kagent.github.io 1/🧵

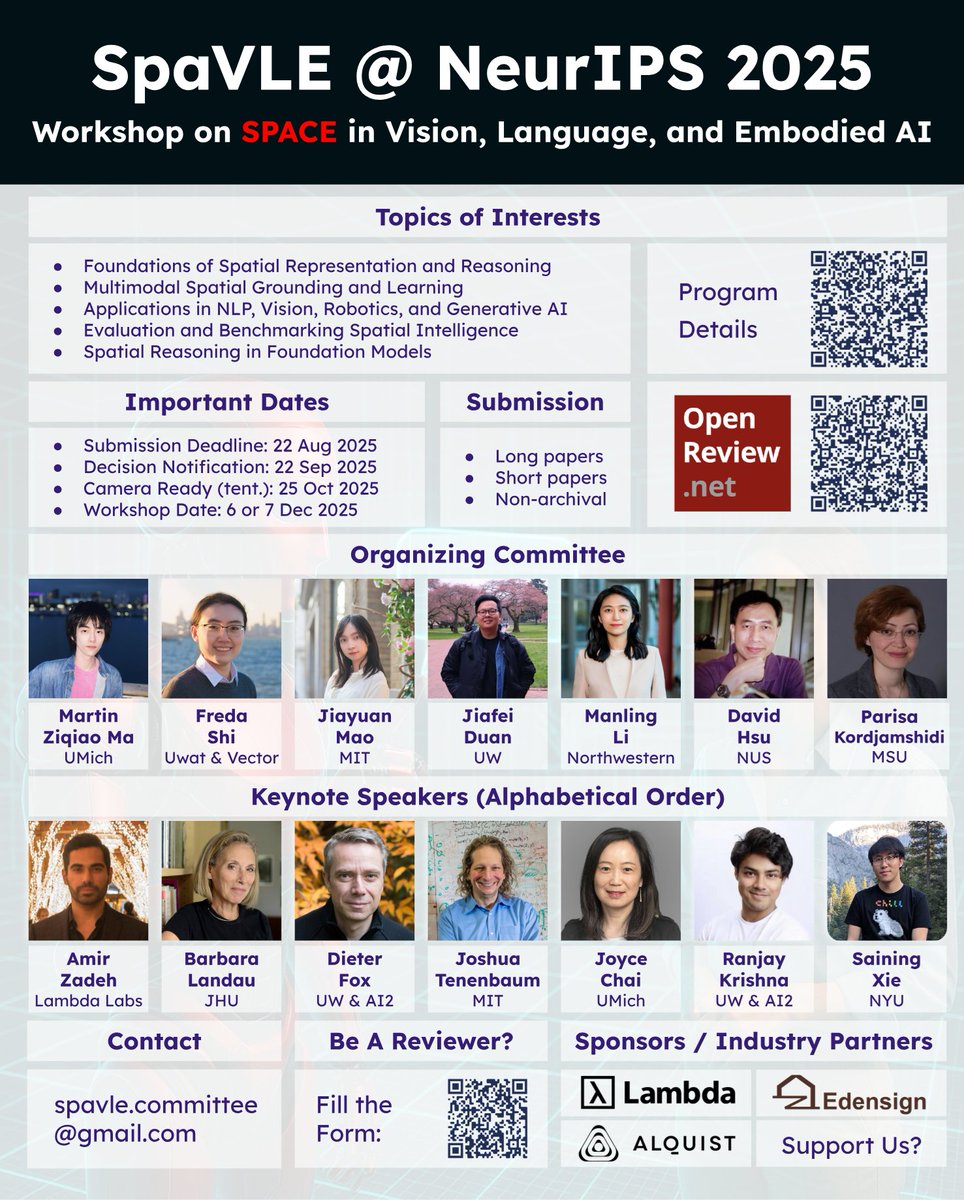

📣 Excited to announce SpaVLE: #NeurIPS2025 Workshop on Space in Vision, Language, and Embodied AI! 👉…vision-language-embodied-ai.github.io

📣 Excited to announce SpaVLE: #NeurIPS2025 Workshop on Space in Vision, Language, and Embodied AI! 👉 …vision-language-embodied-ai.github.io 🦾Co-organized with an incredible team → @fredahshi · @maojiayuan · @DJiafei · @ManlingLi_ · David Hsu · @Kordjamshidi 🌌 Why Space & SpaVLE? We…

Can data owners & LM developers collaborate to build a strong shared model while each retaining data control? Introducing FlexOlmo💪, a mixture-of-experts LM enabling: • Flexible training on your local data without sharing it • Flexible inference to opt in/out your data…

Introducing FlexOlmo, a new paradigm for language model training that enables the co-development of AI through data collaboration. 🧵

Attention is most effective on pre-compressed data at the “right level of abstraction.”

I converted one of my favorite talks I've given over the past year into a blog post. "On the Tradeoffs of SSMs and Transformers" (or: tokens are bullshit) In a few days, we'll release what I believe is the next major advance for architectures.

I have been long arguing that a world model is NOT about generating videos, but IS about simulating all possibilities of the world to serve as a sandbox for general-purpose reasoning via thought-experiments. This paper proposes an architecture toward that arxiv.org/abs/2507.05169

Our study on pragmatic generation is accepted to #COLM2025! Missed the first COLM last year (no suitable ongoing project at the time😅). Heard it’s a great place to connect with LM folks, excited to join for round two finally.

Vision-Language Models (VLMs) can describe the environment, but can they refer within it? Our findings reveal a critical gap: VLMs fall short of pragmatic optimality. We identify 3 key failures of pragmatic competence in referring expression generation with VLMs: (1) cannot…

I know ACL and ICML are around the corner, but the only conference I’m planning to attend this month is #AX2025. But yeah, I did launch a job at the expo. 🤪

Just 5 days ago, I asked Xiang whether I should try using a distilled math reasoning model as the base for a VLM I’m training. He said no. I asked why. He said, “Stay tuned.” And now… here I am, reading this paper with the rest of you.

People are racing to push math reasoning performance in #LLMs—but have we really asked why? The common assumption is that improving math reasoning should transfer to broader capabilities in other domains. But is that actually true? In our study (arxiv.org/pdf/2507.00432), we…

💥💥BANG! Experience the future of gaming with our real-time world model for video games!🕹️🕹️ Not just PLAY—but CREATE! Introducing Mirage, the world’s first AI-native UGC game engine. Now featuring real-time playable demos of two games: 🏙️ GTA-style urban chaos 🏎️ Forza…

🚨Do frontier VLMs (o3, Gemini 2.5, Claude 3.5, Qwen…) actually learn an internal world model🌍? Surprisingly, the answer appears to be a hard NO—as revealed by our WM Atomic Benchmark⚛️. Even o3 struggles with the most basic, atomic-level questions: ❌Confuse triangles📐 with…

🤔 Have @OpenAI o3, Gemini 2.5, Claude 3.7 formed an internal world model to understand the physical world, or just align pixels with words? We introduce WM-ABench, the first systematic evaluation of VLMs as world models. Using a cognitively-inspired framework, we test 15 SOTA…

Excited to share WM-ABench, the first atomic and controlled benchmark of internal world models in VLMs, to appear in #ACL2025 Findings. I'm particularly proud of the cognitively-inspired conceptual framework that grounds our design. If you're curious about how we formalize…

🏗️ WM-ABench Specs: - 23 fine-grained evaluation dimensions across perception & prediction - 100,000+ instances from 6 diverse simulators (ThreeDWorld, ManiSkill 2 & 3, Physion, Carla, Habitat Lab) - Hard negatives via counterfactual states to prevent shortcuts - Human baselines…

Do Vision-Language Models Have Internal World Models? Towards an Atomic Evaluation "we introduce WM-ABench, a large-scale benchmark comprising 23 fine-grained evaluation dimensions across 6 diverse simulated environments with controlled counterfactual simulations. Through 660…

Are AI scientists already better than human researchers? We recruited 43 PhD students to spend 3 months executing research ideas proposed by an LLM agent vs human experts. Main finding: LLM ideas result in worse projects than human ideas.