Licheng Liu

@liulicheng10

3rd year Maths @ Imperial , intern @ NU MLL lab, https://lichengliu03.github.io/, applying for '26 fall phd

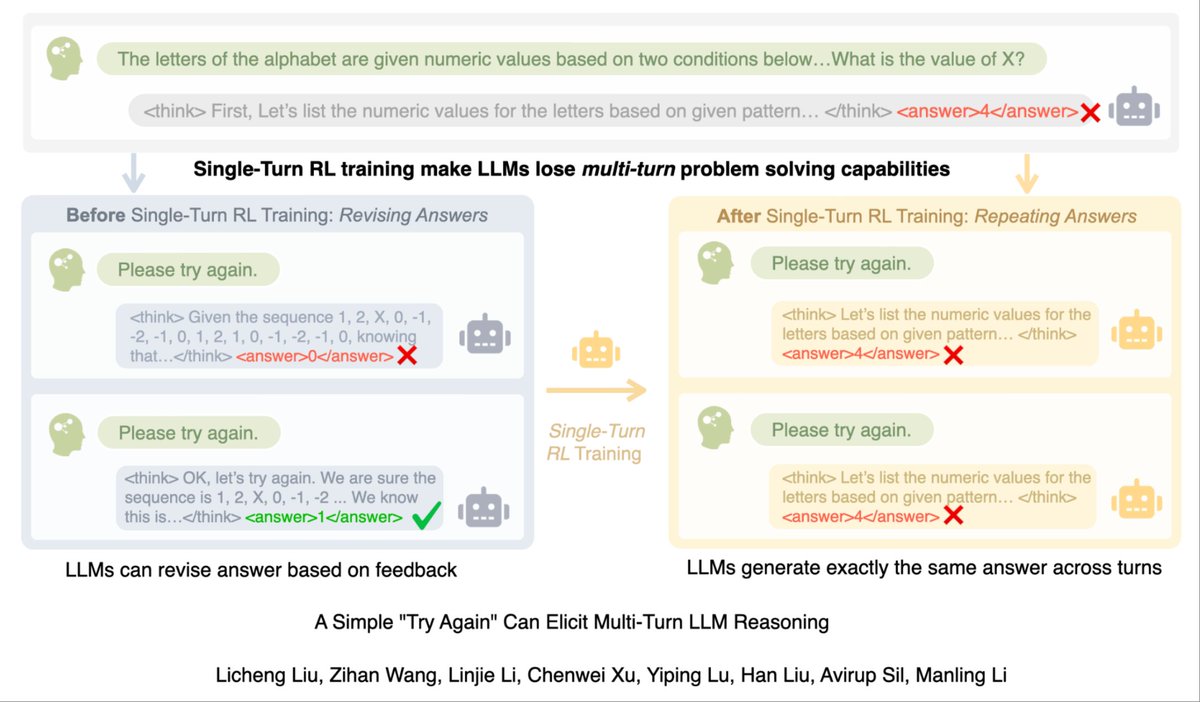

Will conversation history help reasoning? We found that when models mess up once, they often get stuck. Surprisingly, a simple “try again” fixes this — and boosts reasoning.🧵 Project Page: unary-feedback.github.io

optimization theorem: "assume a lipschitz constant L..." the lipschitz constant:

[1/9] We created a performant Lipschitz transformer by spectrally regulating the weights—without using activation stability tricks: no layer norm, QK norm, or logit softcapping. We think this may address a “root cause” of unstable training.

Heading to #cogsci2025 this week! I'm interested in language, learning, and computational modeling. I’ll be around from July 29 morning to August 3 evening—excited to chat and get advice as someone just starting out in research! 😃

Congratulations to my amazing advisor @ManlingLi_ !!!

Congratulations Manling Li for receiving an Honorable Mention for the ACL Best Dissertation Award! Super proud of you and very happy to see the award was announced by none other than our favorite, Prof. Kathleen McKeown- making it even more special! @ManlingLi_

The 20-80 law of RL training: If your theoretical training time is 2 hours, expect to spend 10. 80% of the time goes into debugging, tuning hyperparameters, rerunning due to random failures, or just figuring out why the model suddenly collapsed after step 1103.

Is your LLM getting stuck while training with RL for agentic/ reasoning tasks? Well, turns out that a simple intuition of “trying again” works surprisingly well for reinforcement learning of LLMs and for domain adaptation!! Joint work with @NorthwesternEng @uwcse

Will conversation history help reasoning? We found that when models mess up once, they often get stuck. Surprisingly, a simple “try again” fixes this — and boosts reasoning.🧵 Project Page: unary-feedback.github.io

Do you find RL makes the LLM reasoning more stubborn? Keep repeating the same answers? How to make multi-turn conversational history be helpful in RL training? We identify a simple "try again" feedback can boost reasoning and make RL training a conversational manner!…

Will conversation history help reasoning? We found that when models mess up once, they often get stuck. Surprisingly, a simple “try again” fixes this — and boosts reasoning.🧵 Project Page: unary-feedback.github.io

得道高僧也该与时俱进,投身大模型事业,每日坚持RLHF修炼,训练出一个与他们三观一致的赛博大脑。再由这个大脑来为迷茫的众生指引方向,在线积累赛博功德,实现数字时代的普渡众生。

Getting IMO gold is truly impressive but is it truly useful or, does the advanced reasoning ability(like solving problems that like only ten people can solve) of a LLM matter at all?

I replicated this result, that Grok focuses nearly entirely on finding out what Elon thinks in order to align with that, on a fresh Grok 4 chat with no custom instructions. grok.com/share/c2hhcmQt…

Grok 4 decides what it thinks about Israel/Palestine by searching for Elon's thoughts. Not a confidence booster in "maximally truth seeking" behavior. h/t @catehall. Screenshots are mine.