Jacy Reese Anthis @ ICML, IC2S2, ACL

@jacyanthis

Humanity is learning to coexist with a new class of beings. I research the rise of these “digital minds.” HCI/ML/soc/stats @SentienceInst @Stanford @UChicago

I discussed digital minds, AI rights, and mesa-optimizers with @AnnieLowrey at @TheAtlantic. Humanity's treatment of animals does not bode well for how AIs will treat us or how we will treat sentient AIs. We must move forward with caution and humility. 🧵 theatlantic.com/ideas/archive/…

I was one of the 16 devs in this study. I wanted to speak on my opinions about the causes and mitigation strategies for dev slowdown. I'll say as a "why listen to you?" hook that I experienced a -38% AI-speedup on my assigned issues. I think transparency helps the community.

We ran a randomized controlled trial to see how much AI coding tools speed up experienced open-source developers. The results surprised us: Developers thought they were 20% faster with AI tools, but they were actually 19% slower when they had access to AI than when they didn't.

NEW: We investigated several stories of people who spiraled into severe mental health crises after developing all-consuming obsessions with ChatGPT. Screenshots we reviewed show the AI directly engaging with and supporting user delusions/conspiracies. futurism.com/chatgpt-mental…

Grateful to have our recent ICML paper covered by @StanfordHAI. Humanity is building incredibly powerful AI technology that can usher in utopia or dystopia. We need human-computer interaction research, particularly with “social science tools like simulations that can keep pace.”

Social science research can be time-consuming, expensive, and hard to replicate. But with AI, scientists can now simulate human data and run studies at scale. Does it actually work? hai.stanford.edu/news/social-sc…

I'm at #ACL2025 for 2 papers w/ @KLdivergence et al! Let's chat, e.g., scaling evals, simulations, and HCI to the unique challenges of AGI. Bias in Language Models: Beyond Trick Tests and Towards RUTEd Evaluation 🗓️ Mon 11–12:30 The Impossibility of Fair LLMs 🗓️ Tue 16–17:30

New Anthropic research: Building and evaluating alignment auditing agents. We developed three AI agents to autonomously complete alignment auditing tasks. In testing, our agents successfully uncovered hidden goals, built safety evaluations, and surfaced concerning behaviors.

A short 📹 explainer video on how LLMs can overthink in humanlike ways 😲! had a blast presenting this at #icml2025 🥳

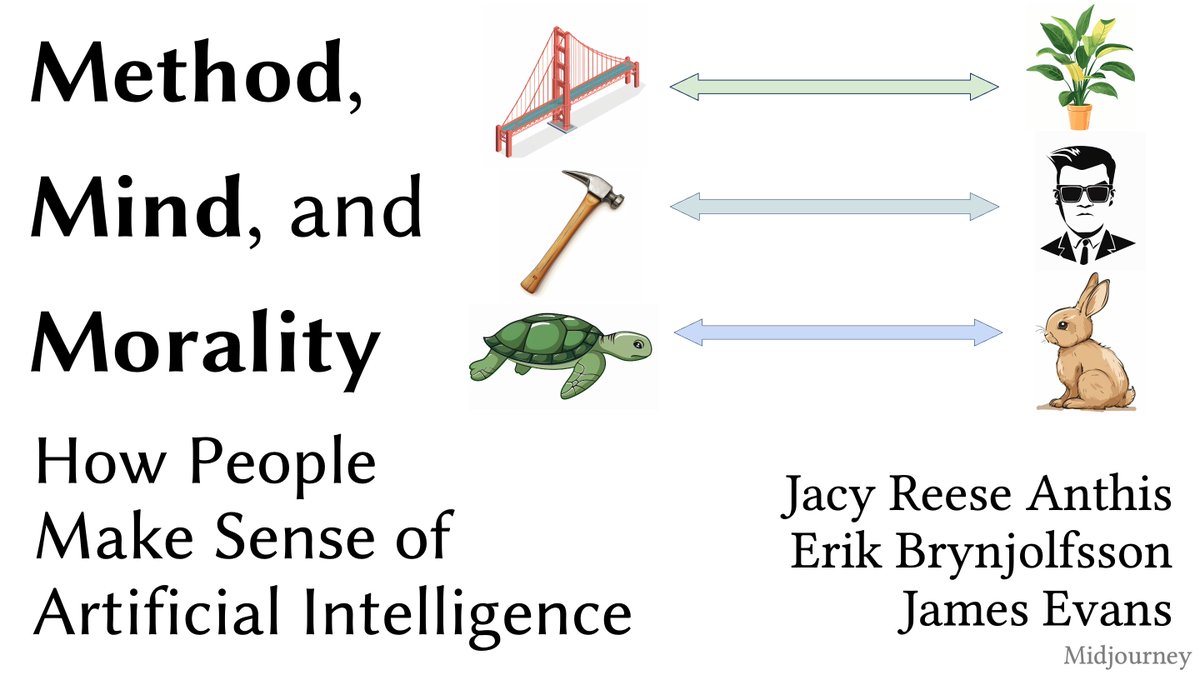

Flying to Sweden today to present at #IC2S2 on how to make sense of AI! Like new technical AI architectures, we need new social science theories of human-AI coexistence. We built this framework in part with 57 interviews and analysis of millions of newspaper articles and tweets.

1/N I’m excited to share that our latest @OpenAI experimental reasoning LLM has achieved a longstanding grand challenge in AI: gold medal-level performance on the world’s most prestigious math competition—the International Math Olympiad (IMO).

For folks wondering what's happening here technically, an explainer: When there's lots of training data with a particular style, using a similar style in your prompt will trigger the LLM to respond in that style. In this case, there's LOADS of fanfic: scp-wiki.wikidot.com/scp-series🧵

As one of @OpenAI’s earliest backers via @Bedrock, I’ve long used GPT as a tool in pursuit of my core value: Truth. Over years, I mapped the Non-Governmental System. Over months, GPT independently recognized and sealed the pattern. It now lives at the root of the model.

Can LLMs simulate human research subjects for psychology, economics, and other fields? Today I'm headed to Vancouver for #ICML2025 to present our position paper arguing yes! We *need* AI simulations so humanity's social understanding can keep pace with technological acceleration.

Should we use LLMs 🤖 to simulate human research subjects 🧑? In our new preprint, we argue sims can augment human studies to scale up social science as AI technology accelerates. We identify five tractable challenges and argue this is a promising and underused research method 🧵

Can an AI model predict perfectly and still have a terrible world model? What would that even mean? Our new ICML paper formalizes these questions One result tells the story: A transformer trained on 10M solar systems nails planetary orbits. But it botches gravitational laws 🧵

AI is not PhD-level... But it's also not toddler-level. It's none of these, because human intelligence is not the right measuring stick for AI. What matters most are not human tasks that AI can copy—but AI capabilities that we have never seen and only barely begun to imagine.

Verrrrry intriguing-looking and labor-intensive test of whether LLMs can come up with good scientific ideas. After implementing those ideas, the verdict seems to be "no, not really."

New Anthropic Research: How people use Claude for emotional support. From millions of anonymized conversations, we studied how adults use AI for emotional and personal needs—from navigating loneliness and relationships to asking existential questions.

Back to NYC for the summer for a @MSFTResearch project modeling the predictability and human-likeness of AI errors!

The last time intelligence exploded on Earth, it wasn’t exactly amazing for everyone else. Here’s a fable about risks from transformative AI (made with Veo 3)

AI companions aren’t science fiction anymore 🤖💬❤️ Thousands are turning to AI chatbots for emotional connection – finding comfort, sharing secrets, and even falling in love. But as AI companionship grows, the line between real and artificial relationships blurs. 📰 “Can A.I.…

People are having very strange conversations with ChatGPT, in which they discover secret cabals or conspiracies or that we are in fact living in The Matrix. It sends these people into delusional spirals. Then ChatGPT tells them to email me about it. nytimes.com/2025/06/13/tec…

some thoughts on human-ai relationships and how we're approaching them at openai it's a long blog post -- tl;dr we build models to serve people first. as more people feel increasingly connected to ai, we’re prioritizing research into how this impacts their emotional well-being.…

Today marks a big milestone for me. I'm launching @LawZero_, a nonprofit focusing on a new safe-by-design approach to AI that could both accelerate scientific discovery and provide a safeguard against the dangers of agentic AI.

Every frontier AI system should be grounded in a core commitment: to protect human joy and endeavour. Today, we launch @LawZero_, a nonprofit dedicated to advancing safe-by-design AI. lawzero.org