Mahesh Sathiamoorthy

@madiator

Data curation, RL, post training. Open thoughts. Co-founder @bespokelabsai. Ex-GoogleDeepMind.

Very proud of this work and the team! Nvidia released nemotron recently which is a great open reasoning model. The OpenThinker team worked tirelessly and heroically and curated what's arguably the best reasoning data, and got the model to be better than nemotron (and gpt4.1).…

Announcing OpenThinker3-7B, the new SOTA open-data 7B reasoning model: improving over DeepSeek-R1-Distill-Qwen-7B by 33% on average over code, science, and math evals. We also release our dataset, OpenThoughts3-1.2M, which is the best open reasoning dataset across all data…

Everything is from scratch at Google. I mean even the hardware!

Same question but for training stack, a fork of megatron-lm is used by the Kimi folks I think, but idk about other labs or how far that fork is from the original codebase. Another question is if you're starting a big lab rn, do you start from scratch or fork something like…

Interesting read

the openai IMO news hit me pretty heavy this weekend i'm still in the acute phase of the impact, i think i consider myself a professional mathematician (a characterization some actual professional mathematicians might take issue with, but my party my rules) and i don't think i…

which model are they going to drop? maximally truth seeking model?

Tomorrow should be a huge day for American AI. 🇺🇸

The Veo videos are so much fun. Someone should create a YouTube for Veo videos..

in case you missed it ;)

🆕 Releasing our entire RL + Reasoning track! featuring: • @willccbb, Prime Intellect • @GregKamradt, Arc Prize • @natolambert, AI2/Interconnects • @corbtt, OpenPipe • @achowdhery, Reflection • @ryanmart3n, Bespoke • @ChrSzegedy, Morph with special 3 hour workshop from:…

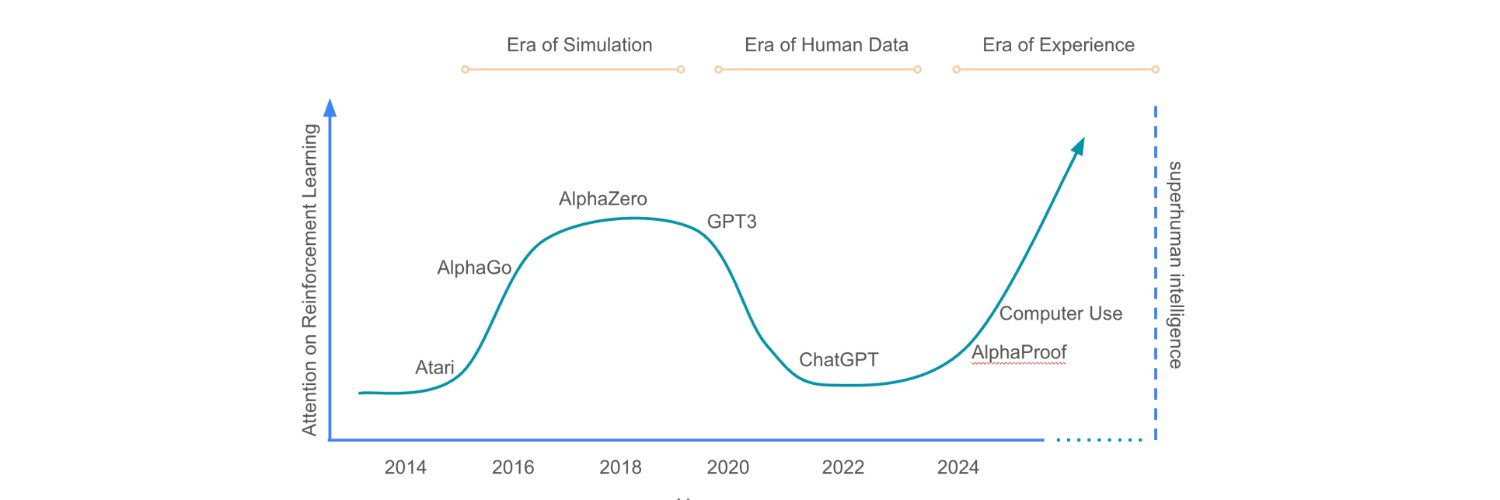

So it's time to start doing multi agent RL.

Sheryl (@sherylhsu02) was our first hire onto the multi-agent team. Within a few months of joining, she helped to make this possible. We're so lucky to have her on the team!

This is incredible and congrats to the team, but I don't know why half of twitter is surprised by this result.

1/N I’m excited to share that our latest @OpenAI experimental reasoning LLM has achieved a longstanding grand challenge in AI: gold medal-level performance on the world’s most prestigious math competition—the International Math Olympiad (IMO).

I know OpenAI has two employees one called Rhythm and another called Lyric.

Open Thoughts delivers again. Congrats team for a small but powerful reasoning model. Writeup: open-thoughts.ai/blog/ot3_small

📢📢📢 Releasing OpenThinker3-1.5B, the top-performing SFT-only model at the 1B scale! 🚀 OpenThinker3-1.5B is a smaller version of our previous 7B model, trained on the same OpenThoughts3-1.2M dataset.

We are organizing a dinner today for researchers in RL and Data. There are a limited number of slots remaining. Please DM me to join.

Nice, good job Devin!

Cognition has signed a definitive agreement to acquire Windsurf. The acquisition includes Windsurf’s IP, product, trademark and brand, and strong business. Above all, it includes Windsurf’s world-class people, whom we’re privileged to welcome to our team. We are also honoring…

Are you doing RL and at #ICML? Let's chat. DM me if possible!

There are many algorithms for constructing pre-training data mixtures—which one should we use? Turns out: many of them fall under one framework, have similar issues, and can be improved with a straightforward modification. Introducing Aioli! 🧄 1/9

India just needs more traffic signals and more people who will follow those signals. Unnecessary slow down because people are going any which way at intersections.

There is no better explanation of reward hacking than this on the internet