Leandro von Werra

@lvwerra

Head of research @huggingface

The Ultra-Scale Playbook: Training LLMs on GPU Clusters Learn how to train your own DeepSeek-V3 model using 5D parallelism, ZeRO, fast kernels, compute/comm overlap and bottlenecks with theory, interactive plots and 4000+ scaling experiments and audio! huggingface.co/spaces/nanotro…

SmolLM3-3B-8da4w: With #TorchAO & optimum-executorch, quantizing and exporting for mobile is a breeze. Now ready for on-device deployment with #ExecuTorch, running at 15 tokens/sec on Galaxy S22. 🔗 Model card with recipes + checkpoints: hubs.la/Q03yGyTN0 #EdgeAI #PyTorch

I'm in DC and the hype lives up. This Action Plan has everything: open source, evals, science of and with AI. The vibe shift on open source is momentous. I remember when. Congrats @sriramk @DavidSacks @mkratsios47 @deanwball

🇺🇸 Today is a day we have been working towards for six months. We are announcing America’s AI action plan putting us on the road to continued AI dominance. The three core themes: - Accelerate AI innovation - Build American AI infrastructure - Lead in international AI…

Many VLMs claim to process hours of video. But can they follow the story?🤔 Today, we introduce TimeScope: The benchmark that separates true temporal understanding from marketing hype. Let's see how much VLMs really understand!⏳

We've just release 100+ intermediate checkpoints and our training logs from SmolLM3-3B training. We hope this can be useful to the researcher working on mech interpret, training dynamics, RL and other topics :) Training logs: -> Usual training loss (the gap in the loss are due…

Beyond happy to announce that I'm joining 🤗 @huggingface as a #MachineLearningEngineer focused on #WebML!

You know CASP? The competition that AlphaFold won that changed the game for AI x bio? 🧬 Just dropped all the data from their last challenge on @huggingface! check it out ⤵️ huggingface.co/datasets/cgeor…

smol and not so smol model are trending together in perfect harmony

Personally, I really prefer casual online posts and discussions like this from engineers and researchers over super condensed papers. It's much more pleasant to read and understand the reasoning behind the decisions. Great job @Kimi_Moonshot team! zhihu.com/question/19271…

Excited to share SmolTalk2: the secret sauce of SmolLM3's post-training and dual reasoning capabilities. So ideal if you want to train models think/no_think capabilities!

Introducing SmolTalk2: the dataset behind SmolLM3's dual reasoning. - mid-training → 5M samples - SFT data → 3M samples - preferences for APO → 500k samples It combines open datasets with new ones curated for strong think and no_think performance. hf.co/datasets/Huggi…

Will be in Paris next week to visit the Hugging Face HQ. Anybody around for coffee?

cute compute…❤️❤️❤️

Thrilled to finally share what we've been working on for months at @huggingface 🤝@pollenrobotics Our first robot: Reachy Mini A dream come true: cute and low priced, hackable yet easy to use, powered by open-source and the infinite community. Tiny price, small size, huge…

SmolLM3 has day-zero support in mlx-lm and it's blazing fast on an M4 Max:

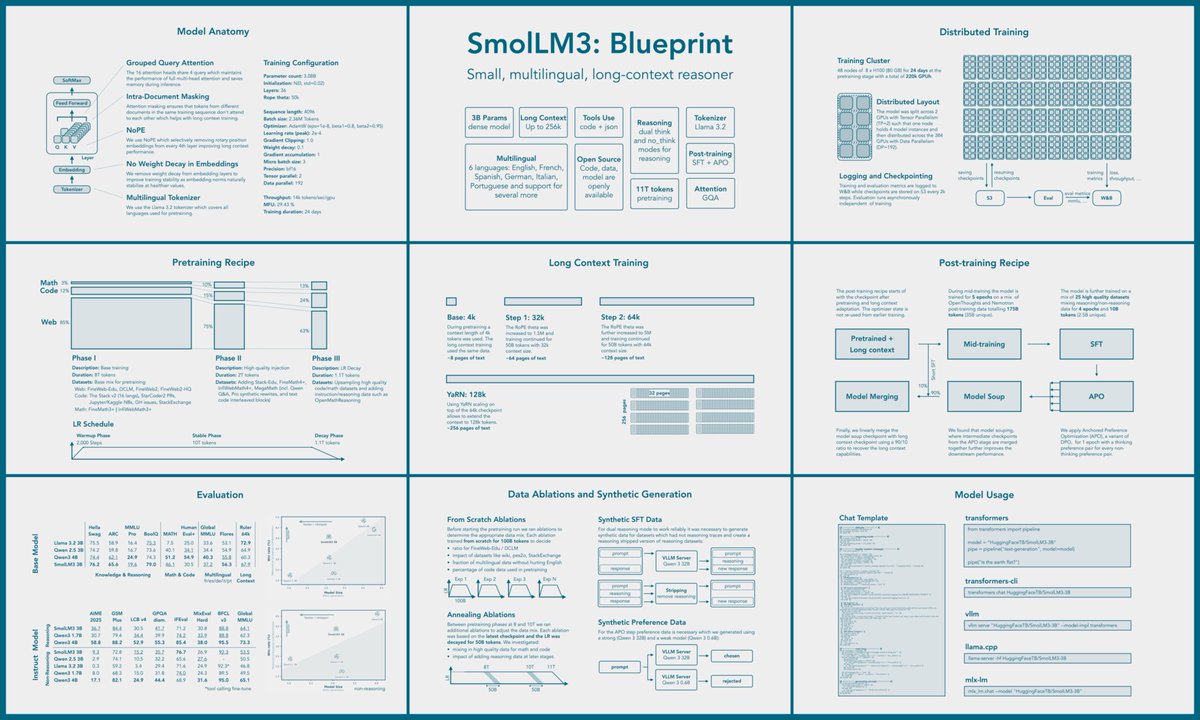

Introducing SmolLM3: a strong, smol reasoner! > SoTA 3B model > dual mode reasoning (think/no_think) > long context, up to 128k > multilingual: en, fr, es, de, it, pt > fully open source (data, code, recipes) huggingface.co/blog/smollm3

We’re releasing the top 3B model out there SOTA performances It has dual mode reasoning (with or without think) Extended long context up to 128k And it’s multilingual with strong support for en, fr, es, de, it, pt What do you need more? Oh yes we’re also open-sourcing all…

Super excited to share SmolLM3, a new strong 3B model. SmolLM3 is fully open, we share the recipe, the dataset, the training codebase and much more! > Train on 11T token on 384 H100 for 220k GPU hours > Support long context up to 128k thanks to NoPE and intra document masking >…

Introducing SmolLM3: a strong, smol reasoner! > SoTA 3B model > dual mode reasoning (think/no_think) > long context, up to 128k > multilingual: en, fr, es, de, it, pt > fully open source (data, code, recipes) huggingface.co/blog/smollm3

SmolLM3 is out - a smol, long context, multilingual reasoner! Along with the model we release the full engineering blueprint for building SoTA LLMs at that scale, so everybody can build on it! Check it out: hf.co/blog/smollm3

It’s easy to fine-tune small models w/ RL to outperform foundation models on vertical tasks. We’re open sourcing Osmosis-Apply-1.7B: a small model that merges code (similar to Cursor’s instant apply) better than foundation models. Links to download and try out the model below!