Edward Hughes

@edwardfhughes

#OpenEndedness. Staff Research Engineer @GoogleDeepMind, Visiting Fellow @LSEnews, Advisor @coop_ai, Choral Director @GodwineChoir. Views my own.

The automation of innovation is within reach! Delighted that my @raais talk is now available for anyone to watch, alongside an excellent blogpost summary by the inimitable @nathanbenaich.

"2025 is the year of open-endedness" at @raais, @edwardfhughes argued that we’re entering a new phase in the evolution of ai: one where open-endedness becomes the central organizing principle. Not just solving problems, but defining them. Not just predicting the next token,…

Well said. Let's build a society where open-ended knowledge creation, not the raw popularity of content, drives value.

If you’re in media, this is worth a watch. Cloudflare handles ≈20% of global traffic, so when CEO Matthew Prince warns at Cannes that AI bots are reshaping the web, publishers need to adapt or risk being left behind.

We’re training AI on everything that we know, but what about things that we don’t know? At #ICML2025, the EXAIT Workshop sparked a crucial conversation: as AI systems grow more powerful, they're relying less on genuine exploration and more on curated human data. This shortcut…

Our benchmarks test knowledge and skill, but what matters is exploration and discovery. Same disconnect is true for our education system.

Whatever happens in the future of AI, I think it is unlikely that the skill of breaking down big problems into smaller, solvable pieces will ever become obsolete

I worry that so much discussion of AI risks and alignment overlooks the rather large elephant in the room: creativity and open-endedness. Policy makers and gatekeepers need to understand two competing forces that no one seems to talk about: (1) there is a massive economic…

Wow - a much more intriguing interweaving of mathematics, music and ritual than I have ever managed! What a wonderful way to combine these passions - we should start a London chapter.

There will be much fanfare at our next Proof Liturgy. As always, all math curious are welcome!

Excellent open-ended work!

we're introducing a new self-improving evolutionary search framework and apply it to get good performance on ARC-1! simple idea: search with llms, hindsight relabel solutions, finetune the llm, and repeat can be done with or without ground truth labels (test time training)

New position paper! Machine Learning Conferences Should Establish a “Refutations and Critiques” Track Joint w/ @sanmikoyejo @JoshuaK92829 @yegordb @bremen79 @koustuvsinha @in4dmatics @JesseDodge @suchenzang @BrandoHablando @MGerstgrasser @is_h_a @ObbadElyas 1/6

2025 is the year of open-endedness!

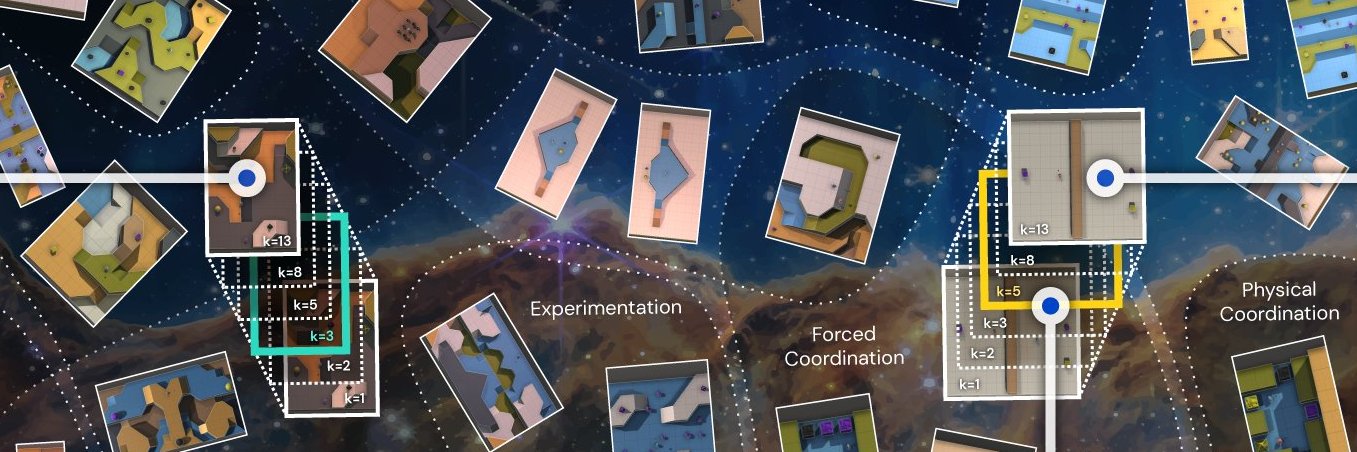

Most AI systems today follow the same predictable pattern: they're built for specific tasks and optimized for objectives rather than exploration. Meanwhile, humans are an open-ended species—driven by curiosity and constantly questioning the unknown. From inventing new musical…

Human ideation beats AI ideation when measured on execution outcomes: arxiv.org/abs/2506.20803. There's a clear path to fixing this - work to be done!

As @ShunyuYao12 recently wrote, the second half of AI will be dominated by automatically *creating* problems. Honoured to have helped advise @Dahoas1 in starting to address that challenge, leveraging QD algorithms. Hints of recursive self-improvement too if you look carefully!

Excited to announce the final paper of my PhD!📢 A crucial piece of SFT/RL training is the availability of high-quality problem-solution data (Q, A). But what to do for difficult tasks where such data is scarce/hard to generate with SOTA models? Read on to find out

Okay - who wants to write the meta-learning paper: The Illusion of Thinking of Thinking? Only half joking.

Now the 3rd paper comes on this 🤯 "The Illusion of the Illusion of the Illusion of Thinking" 📌1st original Paper from Apple, concludes that large reasoning models reach a complexity point where accuracy collapses to zero and even spend fewer thinking tokens, revealing hard…

Hypothesis: Humans succeed not because we make fewer errors, but because we are better at correcting them early. In other words, aiming for error correction in few-shot is likely to be more realistic and effective than aiming for vanishingly low error in zero-shot.

Why can AIs code for 1h but not 10h? A simple explanation: if there's a 10% chance of error per 10min step (say), the success rate is: 1h: 53% 4h: 8% 10h: 0.002% @tobyordoxford has tested this 'constant error rate' theory and shown it's a good fit for the data chance of…

2025 is the year of open-endedness!

a blueprint for automating innovation @edwardfhughes

It’s about time the UK had a pro-immigration, pro-aspiration, pro-entrepreneurship, pro-diversity, pro-education, pro-Europe political party.

bbc.co.uk/news/articles/… Utterly ridiculous on two fronts. (1) This is means tested on income not on assets, which means that a significant number of pensioners with ~hundreds of thousands in assets will get handouts (source FCA Research Note 2019). (2) The threshold is very close…

Open-endedness at GDM intensifies 🚀! Enormously excited to that you have joined the team @robertarail. Once again I reflect on my good fortune in working with such creative, ambitious, collaborative and inspiring colleagues. Welcome!

Some personal news: I joined Google DeepMind in @_rockt's uber talented Open-Endedness team. I couldn’t be more excited for what we’re cooking. AI is the least open-ended it will ever be. Meta, it’s been a blast, an honor, and a privilege. I’m very grateful for the freedom and…