Sergey Edunov

@edunov

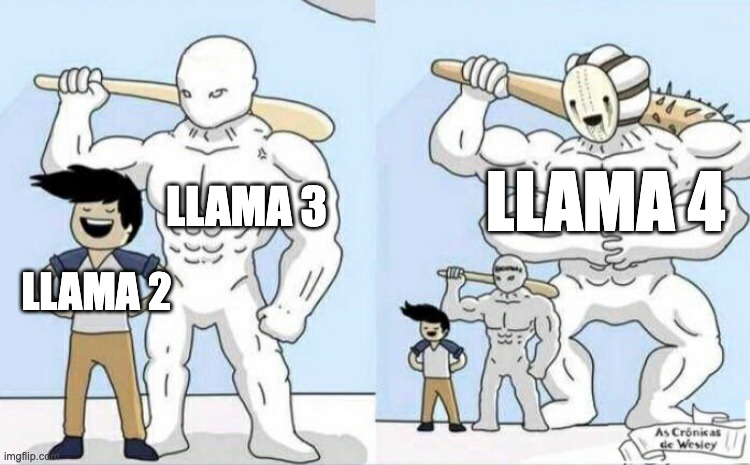

Director of Engineering @ GenAI, Meta. Pre-trainining Llamas: 2, 3, 4...

Great step in the right direction. It is easy to get perfect scores on Needle In A Haystack, it's hard to also maintain model performance. All green rectangle doesn't mean much if the model isn't good. Looking forward to see more short context benchmarks reported: MMLU, GSM8K…

Announcing Llama-3-Giraffe-70B Instruct 128k context length from Abacus AI!! Increasing the context length on Llama-3 has been challenging. Our open-source friends at Gradient AI have been dropping some great variants and working on the same problem. Model performance (based on…

Super excited about these results

Our Llama 4’s industry leading 10M+ multimodal context length (20+ hours of video) has been a wild ride. The iRoPE architecture I’d been working on helped a bit with the long-term infinite context goal toward AGI. Huge thanks to my incredible teammates! 🚀Llama 4 Scout 🔹17B…

Fascinating, how entire LLM industry is chasing ELO score on lmsys, just recently it was Open LLM leaderboard and MMLU, and still around those who remember the days of GLUE and SuperGLUE. Meanwhile Goodhart's law never gets old: "When a measure becomes a target, it ceases to be…

In our past lives we did machine translation 😅 Happy to share that this work is now published in Nature.

It is been a long team journey, and our NLLB work is now published in Nature. Proud of having being part of successfully scaling translation to 200 languages: nature.com/articles/s4158…

MMLU is particularly tricky. - how do you prompt matters a lot - changes in the order of answers in 5-shot examples matter - whether you use logits or model generations matters - do you micro-average or macro-average matters - it is also quite noisy It all works out okay…

How should you prompt an LM for MMLU? (You could say MMLU is contaminated/saturated and we should just use vibes, but that’s a separate conversation. As long as people are bragging about their MMLU scores, we should make sure we know what these scores mean). Two extremes:

If you want to compare different models, benchmarks suck. Goodhart's law, "when a measure becomes a target, it ceases to be a good measure" has never failed, and LLM providers are incentivized to report good numbers. What benchmarks are good for? To iterate and improve…

Funny to see how most benchmarks overrate Mistral 7B compared to Llama 3 8B. ARC, HellaSwag, AGIEval, BigBench, MT-bench, etc. The only benchmark that doesn't seem affected is... MMLU. GSM8K is harder to beat but still doable.

I'll leapfrog to pareto frontier right away 😅 seriously though, should we start plotting short context vs long context numbers on the same chart, how would that look like?

Funny to see how most benchmarks overrate Mistral 7B compared to Llama 3 8B. ARC, HellaSwag, AGIEval, BigBench, MT-bench, etc. The only benchmark that doesn't seem affected is... MMLU. GSM8K is harder to beat but still doable.

Congrats @Teknium1 and the team, amazing work! I've been waiting for it 😉

Announcing Hermes 2 Pro on Llama-3 8B! Nous Research's first Llama-3 based model is now available on HuggingFace. Hermes Pro comes with Function Calling and Structured Output capabilities, and the Llama-3 version now uses dedicated tokens for tool call parsing tags, to make…

How come long context adaptions of Llama 3 that are being released only report performance on long context benchmarks? Do we assume that context extension happens for free without impacting model performance? Show us your MMLU, GSM8K, ARC-C and DROP!

There are many ways a very large and powerful model can be useful, even if no one can run it locally today: Distillation -- think about all recent results people show distilling GPT-4 outputs and training smaller models on those, how much more can be done if the teacher model…

I really love Meta’s open-source focus, but I doubt many of us will leverage such big models. None of us will run Llama3 400B locally 😅 Using APIs stays the way most of us will interact and work with LLMs. But Llama-3 8B or even 70B is quite cool, haha! Still, open sourcing…

So so so excited about these results

Moreover, we observe even stronger performance in English category, where Llama 3 ranking jumps to ~1st place with GPT-4-Turbo! It consistently performs strong against top models (see win-rate matrix) by human preference. It's been optimized for dialogue scenario with large…

Can't overstate how much effort the team has put into making Llama 3 happen, it was a wild ride, but totally worth it!

Feeling incredibly grateful for the entire team's dedication and hard work on the release of #Llama V3. It was a journey of long hours and immense effort, but we did it! Excited to finally put this in the hands of our amazing open source community.

People seem to over-index on the 15T number after Llama 3. While the number matters, what is even more important is the quality and diversity of those tokens. If there was a good way to measure those, that would have been an impressive result to report.

Llama3 was trained on 15 trillion tokens of public data. But where can you find such datasets and recipes?? Here comes the first release of 🍷Fineweb. A high quality large scale filtered web dataset out-performing all current datasets of its scale. We trained 200+ ablation…

Frontier level Tool Calling now live on @GroqInc powered by Llama 3 🫡 Outperforms GPT-4 Turbo 2024-04-09 and Claude 3 Opus (FC version) in multiple subcategories At 300 tokens/s 🚀 I've personally been working on this feature, and man, the new Llama is good!