Hongcheng Gao

@GaoHongcheng

CS MSc Student @UCAS1978 | Prev. @Tsinghuanlp @MSFTResearch | focus on LLM and VLM

Thanks @_akhaliq for sharing our work!

Pixels, Patterns, but No Poetry To See The World like Humans

Thanks @TheTuringPost for sharing our work!

Do models actually "see" the world like humans do? A recent study from @UCAS1978 and collaborators shows that (surprisingly!) even top AI multimodal models fail at simple visual tasks. ➡️ Researchers shifted focus from reasoning to perception and built a new benchmark - the…

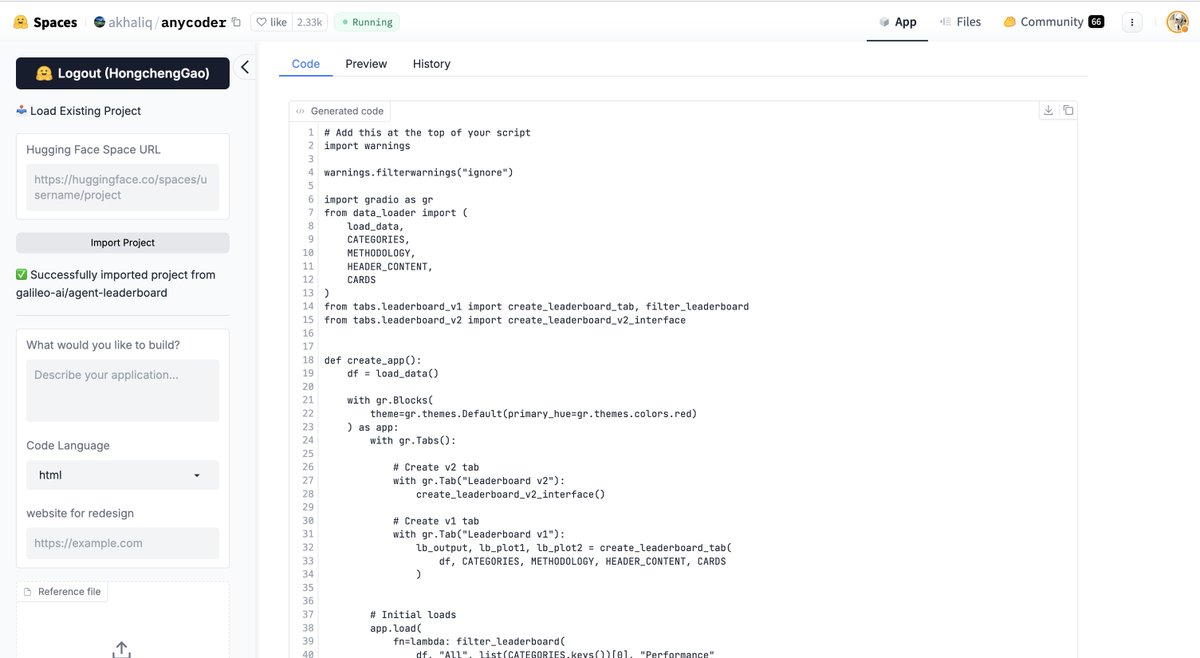

Excellent work, Anycoder—a free, open-source vibe-coding app created by @_akhaliq.

The Turing Eye Test features 4 unique tasks (HiddenText, 3DCaptcha, ColorBlind, ChineseLigatures) that current MLLMs consistently fail. The findings suggest a gap in visual generalization, not reasoning. Dive into the paper: huggingface.co/papers/2507.16… Explore the dataset:…

Can AI *truly* see the world like us? A new paper introduces the Turing Eye Test, a benchmark that reveals a fundamental 'vision blind spot' in even the most advanced multimodal LLMs. They catastrophically fail on tasks humans solve intuitively!

🚀 I'm looking for full-time research scientist jobs on foundation models! I study pre-training and post-training of foundation models, and LLM-based coding agents. The figure highlights my research/publications. Please DM me if there is any good fit! Highly appreciated!

🙋♂️ Can RL training address model weaknesses without external distillation? 🚀 Please check our latest work on RL for LLM reasoning! 💯 TL;DR: We propose augmenting RL training with synthetic problems targeting model’s reasoning weaknesses. 📊Qwen2.5-32B: 42.9 → SwS-32B: 68.4

Hi everyone! The field of LLM-based reasoning has seen tremendous progress and rapid development over the past few months. We’ve updated our survey for exciting advances, now covering over 500 papers! 《From system 1 to system 2 a survey of reasoning large language models》

Our team from the Microsoft Research Asia, UCLA, Chinese Academy of Sciences, Tsinghua University, and released a paper, “TL;DR: Too Long, Do Re-weighting for Efficient LLM Reasoning Compression”proposing an innovative training method that effectively compresses the reasoning.

3. Exploring Hallucination of Large Multimodal Models in Video Understanding: Benchmark, Analysis and Mitigation 🔑 Keywords: hallucination, multimodal models, video understanding, reasoning capabilities, accuracy improvement 💡 Category: Multi-Modal Learning 🌟 Research…

🔥𝐌𝐞𝐭𝐚-𝐔𝐧𝐥𝐞𝐚𝐫𝐧𝐢𝐧𝐠 on Diffusion Models: Preventing Relearning Unlearned Concepts🔥 Even after unlearning, models can be maliciously finetuned (FT) to relearn removed concepts. Our meta-unlearning ensures they stay forgotten. arxiv.org/pdf/2410.12777

🔒 NLP Security Benchmark 🌶️ Why Should Adversarial Perturbations be Imperceptible? Rethink the Research Paradigm in Adversarial NLP paper: arxiv.org/abs/2210.10683 code: github.com/thunlp/Advbench "We collect, process, and release a security datasets collection Advbench"…

Evaluating the Robustness of Text-to-image Diffusion Models against Real-world Attacks. arxiv.org/abs/2306.13103

Excited to introduce our new preprint “Revisiting Out-of-distribution Robustness in NLP: Benchmark, Analysis, and LLMs Evaluations”. paper: arxiv.org/pdf/2306.04618… code: github.com/lifan-yuan/OOD…

Many scientific problems hinge on finding interpretable formulas that fit data, but neural networks are the outright opposite! Check out our recent work that make neural networks modular and interpretable. If you have interesting datasets at hand, we're happy to collaborate!

🚀📊 Exciting GenAI eval metric! Joint w. UW & UCSC. #LLMScore leads in T2I evaluation. It provides multi-granularity compositionality and correlates well with human judgments. Object-grounded dense descriptions by LLMs can pave the future for automated T2I metrics. 🖼️🤖 #NLProc

🚀Excited to release #LLMScore, an object-centric description grounded #LLM, capable of following diverse instructions to produce the best human-correlated score with rationale for text-to-image synthesis evaluation .🧵6 📜arxiv.org/abs/2305.11116 🔗github.com/YujieLu10/LLMS…