Ziming Liu

@ZimingLiu11

PhD student@MIT, AI for Physics/Science, Science of Intelligence & Interpretability for Science

MLPs are so foundational, but are there alternatives? MLPs place activation functions on neurons, but can we instead place (learnable) activation functions on weights? Yes, we KAN! We propose Kolmogorov-Arnold Networks (KAN), which are more accurate and interpretable than MLPs.🧵

Check out our new paper on using AI to make progress on the Millennium problem of fluid blowup!

Can AI help solve Millennium Math problem in Fluid dynamics? We take an important step in this direction in our recent paper with physics-informed learning: High-Precision PINNs in Unbounded Domains: Application to Singularity Formulation in PDEs We develop a modular and a…

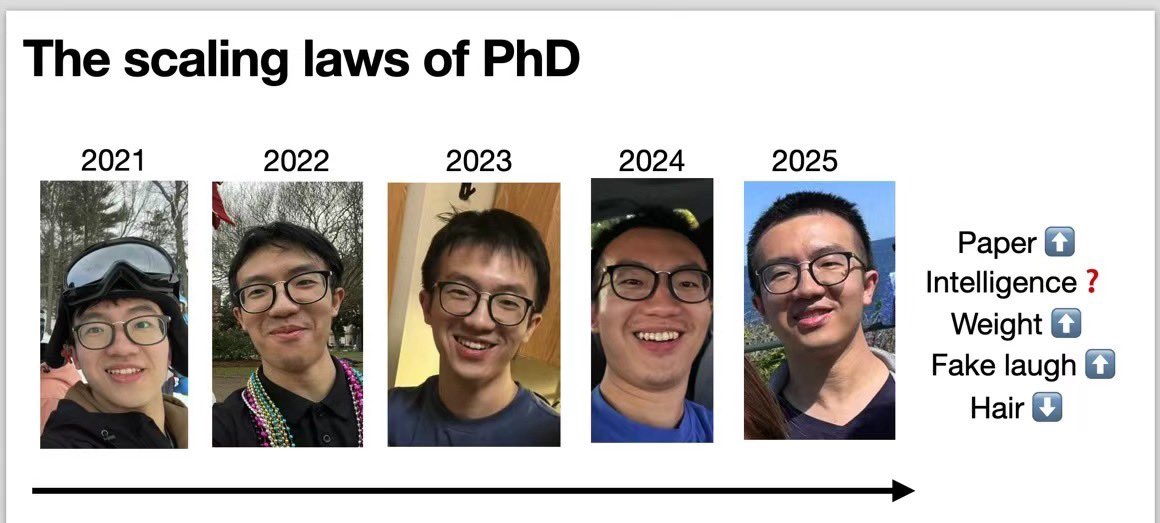

I’ve defended my PhD thesis! 🥳 Next step: I’m excited to join @AToliasLab at @Stanford as a postdoc. Looking forward to meeting old and new friends in CA!

Today, the most competent AI systems in almost *any* domain (math, coding, etc.) are broadly knowledgeable across almost *every* domain. Does it have to be this way, or can we create truly narrow AI systems? In a new preprint, we explore some questions relevant to this goal...

🚀 𝗚𝗲𝗻𝗲𝗿𝗮𝘁𝗶𝘃𝗲 𝗔𝗜 𝗶𝘀 𝘁𝗵𝗲 𝗡𝗲𝘅𝘁 𝗤𝘂𝗮𝗻𝘁𝘂𝗺 𝗟𝗲𝗮𝗽 𝗳𝗼𝗿 𝗔𝘂𝘁𝗼𝗻𝗼𝗺𝗼𝘂𝘀 𝗗𝗿𝗶𝘃𝗶𝗻𝗴—𝗛𝗲𝗿𝗲’𝘀 𝘁𝗵𝗲 𝗛𝗶𝘁𝗰𝗵𝗵𝗶𝗸𝗲𝗿'𝘀 𝗚𝘂𝗶𝗱𝗲 𝗬𝗼𝘂’𝘃𝗲 𝗕𝗲𝗲𝗻 𝗪𝗮𝗶𝘁𝗶𝗻𝗴 𝗙𝗼𝗿! 🚀 We're thrilled to share our most comprehensive, 128-page…

Superposition and neural scaling laws are the two amazing phenomena in language models. Our new work shows that they are the two sides of the same coin! In practice, one can control scaling by controlling superposition via a “negative” weight decay, which is kinda crazy :-)

Superposition means that models represent more features than dimensions they have, which is true for LLMs since there are too many things to represent in language. We find that superposition leads to a power-law loss with width, leading to the observed neural scaling law. (1/n)

It's a real shame that ICML has decided to automatically reject accepted papers if no author can attend ICML. A top conference paper is a significant boost to early career researchers, exactly the people least likely to be able to afford to go to a conference in Vancouver.

Excited to present our #ICLR2025 poster SR4MDL (iclr.cc/virtual/2025/p…).🥳🥳Willing to chat about symbolic regression and AI4Science! 🗓Thu, Apr 24 | 3–5:30 p.m. 📍Hall 3 + Hall 2B | Poster #464 🔗iclr.cc/virtual/2025/p… Try our demo game!👉game.yumeow.site:10086