Alham Fikri Aji

@AlhamFikri

https://bsky.app/profile/afaji.bsky.social Faculty at @MBZUAI, @MonashIndonesia. Visiting research scientist @Google

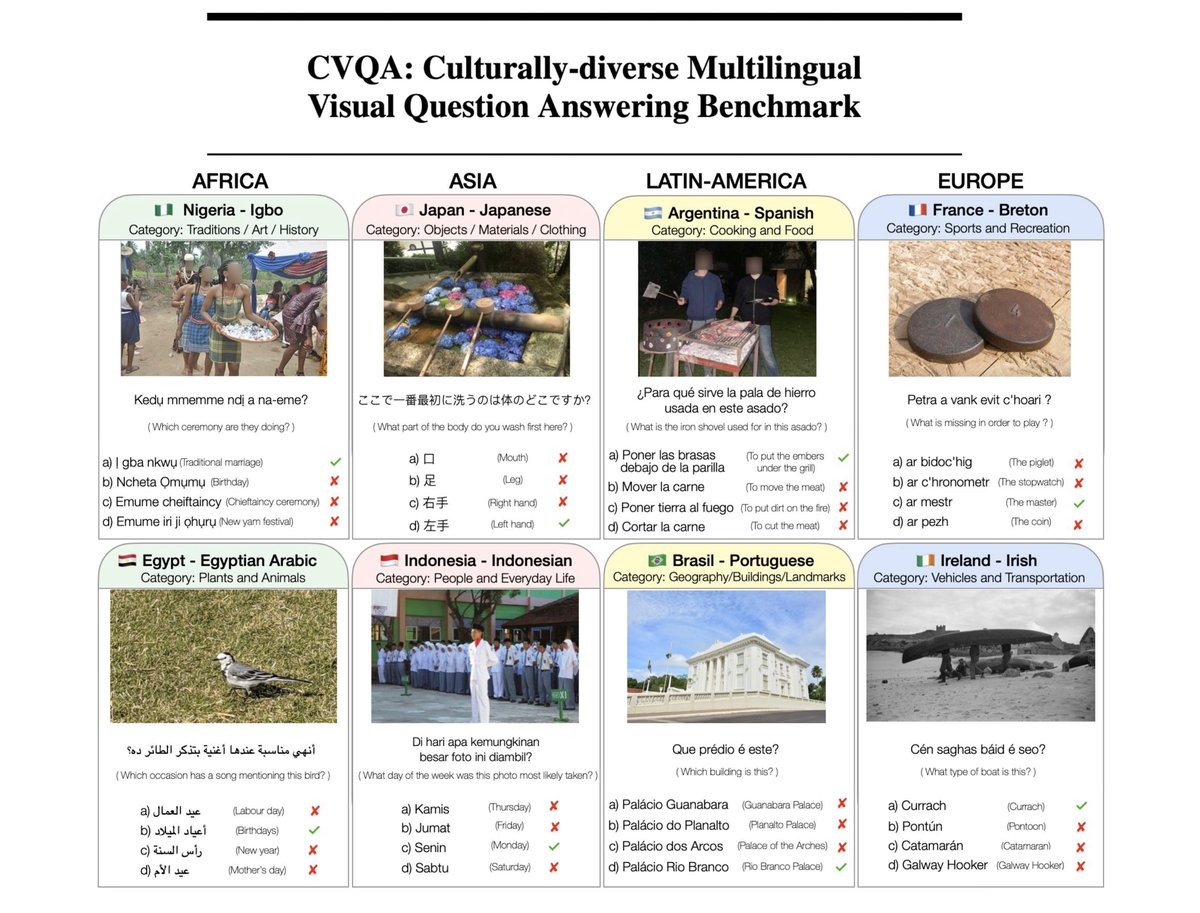

🎉Happy to share our recent collaborative effort on building a culturally diverse, multilingual visual QA dataset! CVQA consists of over 9,000 questions across 28 countries, covering 26 languages (with more to be added!) 🌐cvqa-benchmark.org 📜arxiv.org/pdf/2406.05967

🎉Excited to share new work with undergrads from @FASILKOM_UI Multilingual interpretability is often done at the neuron level; but they are polysemantic We show that using a self-autoencoder first yields more monosemantic, interpretable features More: arxiv.org/pdf/2507.11230

is there a superhero name that actually sounds like good name to give to a child?

is there a superhero name that actually sounds like good name to give to a child?

Personal take: With AI handles more of our thinking, cognitive decline could become a concern We may soon see a rise in brain gyms or mental fitness businesses designed to keep our minds sharp

This is a global phenomenon. With AI, chatbots and brainrot we are essentially going to a situation where anything with any significant cognitive load is going to be offloaded to machines. If you wish to improve your cognitive load capabilities then I suggest the following: 1.…

I'll be hiring a couple of Ph.D. students at CMU (via LTI or MLD) in the upcoming cycle! If you are interested in joining my group, please read the FAQ before reaching out to me via email :) docs.google.com/document/d/12V…

📢New dataset alert! A new long-form video benchmark assessing multimodal LLMs' understanding of Theory of Mind in realistic social interactions

Excited to finally share MOMENTS!! A new human-annotated benchmark to evaluate Theory of Mind in multimodal LLMs using long-form videos with real human actors. 📽️ 2.3K+ MCQA items from 168 short films 🧠 Tests 7 different ToM abilities 🔗 arxiv.org/abs/2507.04415

🚨 Deadline Extended 🚨 The MELT Workshop Track submission deadline is now June 30, 2025 (AoE). We still welcome new & unpublished work: abstracts (≤2 p) | shorts (≤5 p) | longs (≤9 p) 📌 Non-archival 🔗 Submit here → openreview.net/group?id=colmw… #MELTWorkshop2025 #COLM2025

Supposedly 87% of Indonesian high school graduates have math skills equivalent to a 3rd grader This will probably get worse in the coming years, with more students relying on LLMs to do their homework without actually learning anything

Halah. Baru membaca. Kemampuan berhitung orang Indonesia lebih hancur lagi. 87% anak lulusan SMA memiliki kemampuan matematika setara anak kelas 3 SD, atau dibawahnya. Kemampuan berhitung yang hanya lulusan SMP dan SD lebih lebih hancur lagi.

Working on multilingual and culturally-aware NLP and want to attend @COLM_conf? Consider submitting to the MELT Workshop 2025! Submissions are non-archival

🚨 Call for Submissions – Melt Workshop @COLM_conf 🌍 We invite papers on regionally grounded, culturally aware language technologies. 🛠️ Two tracks: — Workshop Track — Conference Track Details below ⬇️ #MeltWorkshop2025

There are many interesting questions How people anthropomorphize LLMs, correlation w/ their individual traits, demographics, LLM understanding, perceived trust, etc2. just a random thought, but this whole AI-LLM interaction thing is fascinating

🤔Ever wonder why LLMs give inconsistent answers in different languages? In our paper, we identify two failure points in the multilingual factual recall process and propose fixes that guide LLMs to the "right path." This can boost performance by 35% in the weakest language! 📈

🧵 Multilingual safety training/eval is now standard practice, but a critical question remains: Is multilingual safety actually solved? Our new survey with @Cohere_Labs answers this and dives deep into: - Language gap in safety research - Future priority areas Thread 👇

Research isn’t always about chasing SOTA. It’s about understanding why things work (or don’t) and pushing the boundaries of our knowledge Negative results like this are still valuable and essential for the community Looking forward to the next banger!

sorry for the late update. I bring disappointing news. softpick does NOT scale to larger models. overall training loss and benchmark results are worse than softmax on our 1.8B parameter models. we have updated the preprint on arxiv: arxiv.org/abs/2504.20966

Amidst the evaluation/reproducibility crisis for reasoning LLMs, it's great to see *concurrent independent work (with different models & benchmarks) aligns with our findings*! We reported the same fundamental trade-off: language forcing leads to ✅ compliance, ❌ accuracy!

[1/]💡New Paper Large reasoning models (LRMs) are strong in English — but how well do they reason in your language? Our latest work uncovers their limitation and a clear trade-off: Controlling Thinking Trace Language Comes at the Cost of Accuracy 📄Link: arxiv.org/abs/2505.22888

Happy to share that we have 8 papers accepted at ACL! Grateful for the amazing team of students and collaborators. Hope to see you in Vienna soon!

Thanks for covering our work!

Can an AI trained in English solve math problems in other languages without extra training?

Multilingual vision is important! We have developed a multilingual VQA benchmark almost a year ago - CVQA arxiv.org/abs/2406.05967 in NeurIPS.

abs: arxiv.org/abs/2505.08751 model: huggingface.co/CohereLabs/aya… bench: huggingface.co/datasets/Coher…

After publishing 4 EMNLP papers last year, 2 as first author, and now this. His citation has grown 10x in the past year. He's on track to surpass 1,000,000 citations in 5 years! An amazing future lies ahead for Farid.

got a bit emotional as this is my first ever ACL main paper. here’s to more to come!

Reasoning language models are primarily trained on English data, but do they generalize well to multilingual settings in various domains? We show that test-time scaling can improve their zero-shot crosslingual reasoning performance! 🔥