zed

@zmkzmkz

#1 paperclip maximizer fan, occasionally on x-games mode. I really, really like watching loss graphs go down

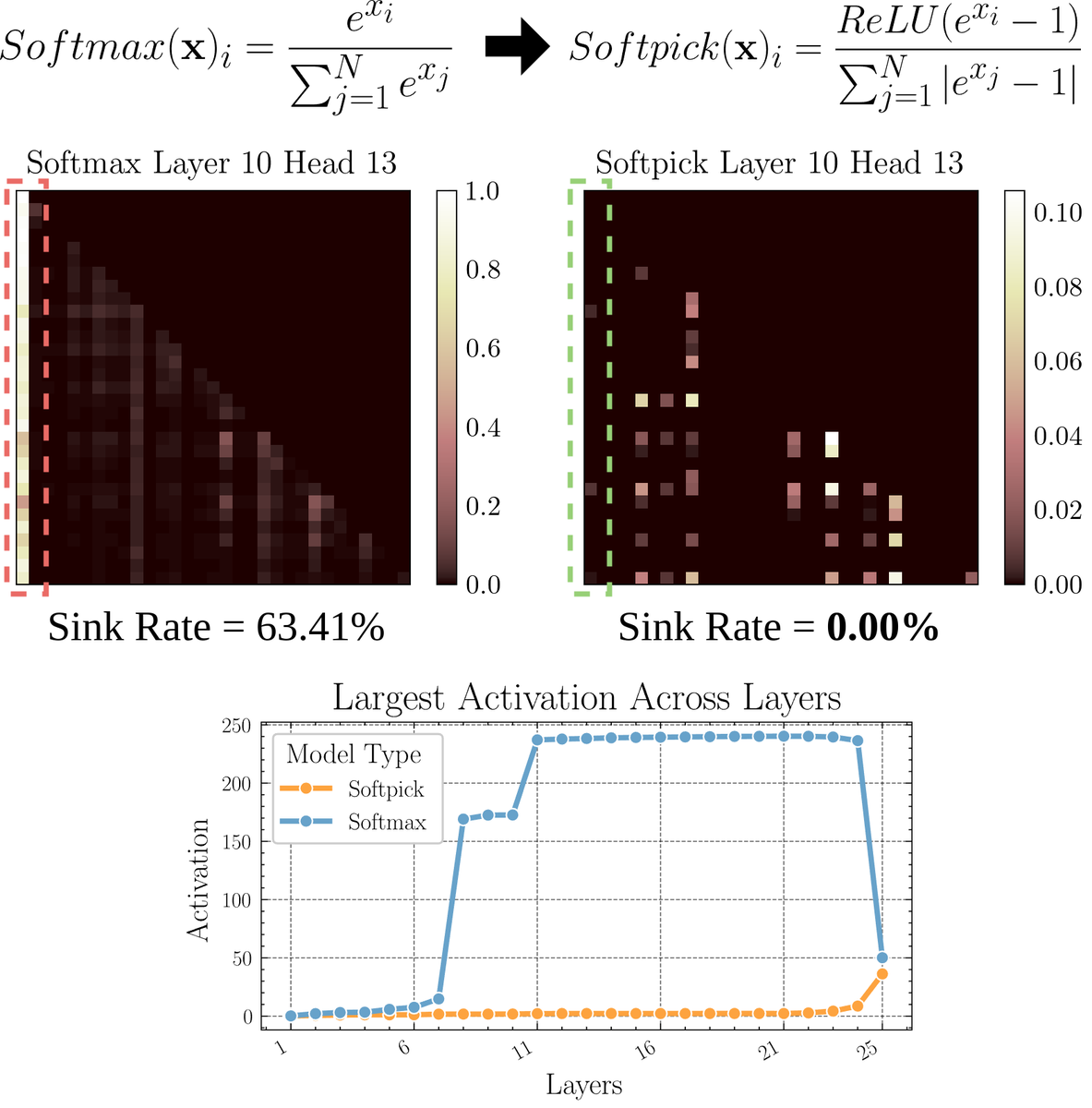

EARLY PREPRINT: Softpick: No Attention Sink, No Massive Activations with Rectified Softmax Why do we use softmax in attention, even though we don’t really need non-zero probabilities that sum to one, causing attention sink and large hidden state activations? Let that sink in.

after a long hiatus, i was bored and decided to mess around with GPUs again Messing Around with GPUs Again, with art by @atomic_arctic research.meekolab.com/messing-around…

results look great so far but I'm still holding my horses. we're training 7B models as we speak to make sure this thing scales nicely before proceeding so big thanks and shoutout to @manifoldlabs for these H200s! hopefully we're gonna get some more results in a week or two

thanks btw for the nice reception of our negative results here! however, I do ofc still want to get good results from my ideas. since then I've been cooking up something else. an attempt to improve upon multi-token prediction experiments at 340M AND 1.8B are looking really nice

Sad news but we learn a lot from this project 💪

sorry for the late update. I bring disappointing news. softpick does NOT scale to larger models. overall training loss and benchmark results are worse than softmax on our 1.8B parameter models. we have updated the preprint on arxiv: arxiv.org/abs/2504.20966

[RTs appreciated ٩( ´ω` )و✨] back by (somehow) popular demand, after an extended absence available at Comifuro XX on booth I-10 (both days)! #CFXX #ComifuroXX #CFXXcatalogue

I think the gated attention output thing was first used in forgetting transformer, but I wasn't convinced by why it was good there. this paper is exactly the isolated ablation paper that I wanted to see. looks like we'll be using gates from now on (also softpick got cited yay)

[CL] Gated Attention for Large Language Models: Non-linearity, Sparsity, and Attention-Sink-Free Z Qiu, Z Wang, B Zheng, Z Huang... [Alibaba Group] (2025) arxiv.org/abs/2505.06708