Jianren Wang

@wang_jianren

Student of Nature | PhD @CMU_Robotics | Founding Researcher @SkildAI

(1/n) Since its publication in 2017, PPO has essentially become synonymous with RL. Today, we are excited to provide you with a better alternative - EPO.

Three years ago, when we began exploring learning from video—most tasks were just pick-and-place. With PSAG, we enabled one-shot learning of deformable object manipulation from YouTube. Now, this paper pushes it further, tackling a wider range of tasks via visual FMs w.o. demos!

Research arc: ⏪ 2 yrs ago, we introduced VRB: learning from hours of human videos to cut down teleop (Gibson🙏) ▶️ Today, we explore a wilder path: robots deployed with no teleop, no human demos, no affordances. Just raw video generation magic 🙏 Day 1 of faculty life done! 😉…

Great to see it launched! Congrats to @Vikashplus and team!

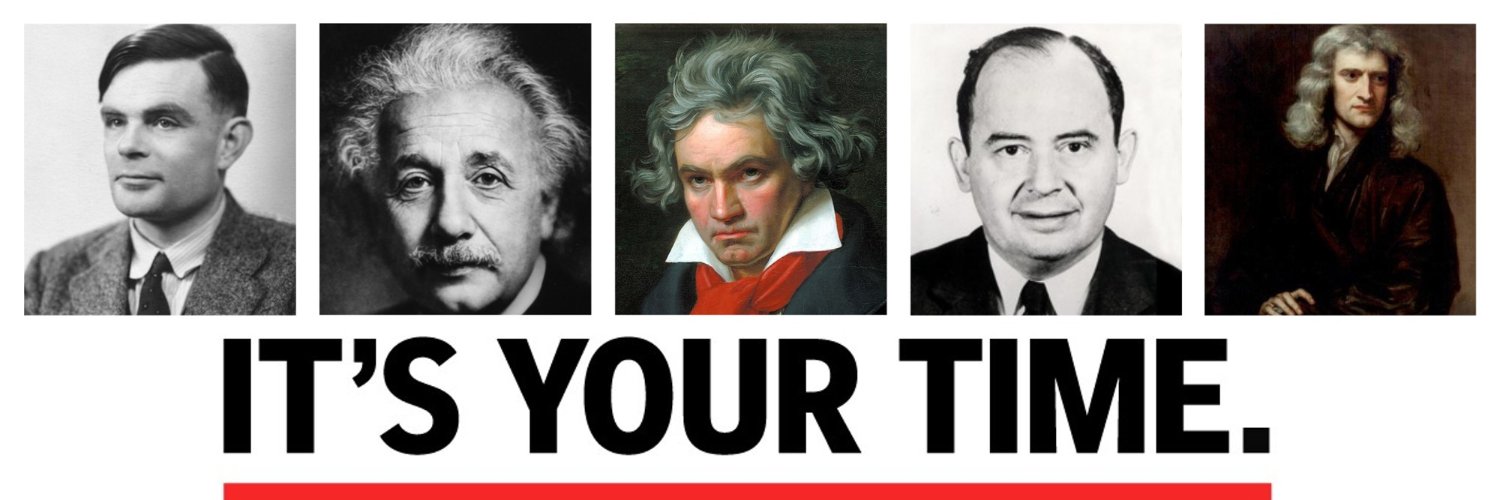

All forms of intelligence co-emerged with a body, except AI We're building a #future where AI evolves as your lifelike digital twin to assist your needs across health, sports, daily life, creativity, & beyond... myolab.ai ➡️ Preview your first #HumanEmbodiedAI

Tactile sensing is gaining traction, but slowly. Why? Because integration remains difficult. But what if adding touch sensors to your robot was as easy as hitting “print”? Introducing eFlesh: a 3D-printable, customizable tactile sensor. Shape it. Size it. Print it. 🧶👇

Tired of tuning PPO or blaming it on reward, task design, etc.? Introducing EPO -- our second (and hopefully final :) attempt at fixing PPO at scale! Contrary to intuition, as the batch size or data increases, PPO saturates due to a lack of diversity in sampling. We proposed a…

(1/n) Since its publication in 2017, PPO has essentially become synonymous with RL. Today, we are excited to provide you with a better alternative - EPO.

The paper's directional selection step is a genetic algorithm, but is only activated only when there is sufficient performance difference. Likewise, evolutionary theory states that selection can only act on existing variation within a population: if all agents perform…

(3/n) Introducing Evolutionary Policy Optimization (EPO): a hybrid policy optimization method that integrates genetic algorithms. A master agent learns stably and efficiently from pooled experience across a population of agents.

Thank you for all your help throughout the paper! It all started with SAPG. I look forward to seeing more work on improving the scalability and efficiency of RL.

PPO is often frustrating to tune for many continuous control tasks since it keeps getting stuck in local minima. In our SAPG paper (sapg-rl.github.io), we showed how training multiple followers with PPO and combining their data can mitigate this issue. In EPO,…

Awesome setup! Excited to see Gello Final finally getting force feedback!

Low-cost teleop systems have democratized robot data collection, but they lack any force feedback, making it challenging to teleoperate contact-rich tasks. Many robot arms provide force information — a critical yet underutilized modality in robot learning. We introduce: 1. 🦾A…

Thrilled to see the results! It’s incredible to witness its journey from zero to where it is today. Huge congratulations to the team! PS: Genesis also powers our previous project. Check it out 👉 jianrenw.com/PSAG/

Everything you love about generative models — now powered by real physics! Announcing the Genesis project — after a 24-month large-scale research collaboration involving over 20 research labs — a generative physics engine able to generate 4D dynamical worlds powered by a physics…

I’m glad this has sparked widespread discussion. Here are my thoughts for those who believe the Chinese are overreacting and are labeling this as 'woke.' 👉jianrenw.com/posts/2024/12/…

#NeurIPS Let me be direct: This so-called 'disclaimer' only highlights the bias it tries to hide. If Professor Rosalind Picard truly meant no discrimination, she should have simply acknowledged that some students act dishonestly, without linking it to a particular nationality.