Jason Liu

@JasonJZLiu

PhD Student @CMU_Robotics | Prev: Robot Learning @NvidiaAI | Engineering Science @UofT

Low-cost teleop systems have democratized robot data collection, but they lack any force feedback, making it challenging to teleoperate contact-rich tasks. Many robot arms provide force information — a critical yet underutilized modality in robot learning. We introduce: 1. 🦾A…

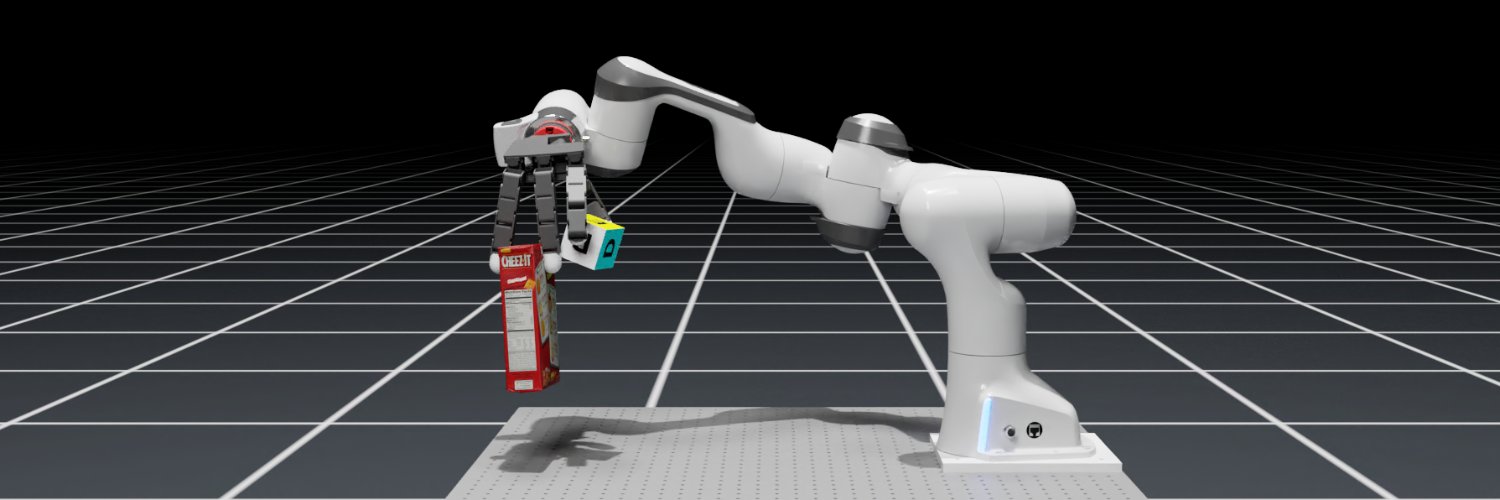

How to generate billion-scale manipulation demonstrations easily? Let us leverage generative models! 🤖✨ We introduce Dex1B, a framework that generates 1 BILLION diverse dexterous hand demonstrations for both grasping 🖐️and articulation 💻 tasks using a simple C-VAE model.

LEAP Hand now supports Isaac Lab! (adding to Gym, Mujoco, Pybullet) This 1-axis reorientation uses purely the proprioception of the LEAP Hand motors to sense the cube. We open‑source both Python or ROS 2 deployment code! Led by @srianumakonda github.com/leap-hand/LEAP…

Excellent insights on when to use autoregressive vs diffusion models

🚨 The era of infinite internet data is ending, So we ask: 👉 What’s the right generative modelling objective when data—not compute—is the bottleneck? TL;DR: ▶️Compute-constrained? Train Autoregressive models ▶️Data-constrained? Train Diffusion models Get ready for 🤿 1/n

🚨 The era of infinite internet data is ending, So we ask: 👉 What’s the right generative modelling objective when data—not compute—is the bottleneck? TL;DR: ▶️Compute-constrained? Train Autoregressive models ▶️Data-constrained? Train Diffusion models Get ready for 🤿 1/n

The Dex team at NVIDIA is defining the bleeding edge of sim2real dexterity. Take a look below 🧵 There's a lot happening at NVIDIA in robotics, and we’re looking for good people! Reach out if you're interested. We have some big things brewing (and scaling :)

Want to add diverse, high-quality data to your robot policy? Happy to share that the DexWild Dataset is now fully public, hosted by @huggingface 🤗 Find it here! huggingface.co/datasets/board…

Training robots for the open world needs diverse data But collecting robot demos in the wild is hard! Presenting DexWild 🙌🏕️ Human data collection system that works in diverse environments, without robots 💪🦾 Human + Robot Cotraining pipeline that unlocks generalization 🧵👇

Got to visit the Robotics Institute at CMU today. The institute has a long legacy of pioneering research and pushing the frontiers of robotics. Thanks @kenny__shaw @JasonJZLiu @adamhkan4 for showing your latest projects. Here’s a live autonomous demo trained with DexWild data

1/ Maximizing confidence indeed improves reasoning. We worked with @ShashwatGoel7, @nikhilchandak29 @AmyPrb for the past 3 weeks (over a zoom call and many emails!) and revised our evaluations to align with their suggested prompts/parsers/sampling params. This includes changing…

Confused about recent LLM RL results where models improve without any ground-truth signal? We were too. Until we looked at the reported numbers of the Pre-RL models and realized they were serverely underreported across papers. We compiled discrepancies in a blog below🧵👇

Neat idea!

Presenting DemoDiffusion: An extremely simple approach enabling a pre-trained 'generalist' diffusion policy to follow a human-demonstration for a novel task during inference One-shot human imitation *without* requiring any paired human-robot data or online RL 🙂 1/n

We’re super thrilled to have received the Outstanding Demo Paper Award for MuJoCo Playground at RSS 2025! Huge thanks to everyone who came by our booth and participated, asked questions, and made the demo so much fun! @carlo_sferrazza @qiayuanliao @arthurallshire

Come check out the LEAP Hand and DexWild live in action at #RSS2025 today!

Presenting FACTR today at #RSS2025 in the Imitation Learning I session at 5:30pm (June 22). Come by if you're interested in force-feedback teleop and policy learning!

Low-cost teleop systems have democratized robot data collection, but they lack any force feedback, making it challenging to teleoperate contact-rich tasks. Many robot arms provide force information — a critical yet underutilized modality in robot learning. We introduce: 1. 🦾A…

Thrilled to have received Best Paper Award at the EgoAct Workshop at RSS 2025! 🏆 We’ll also be giving a talk at the Imitation Learning Session I tomorrow, 5:30–6:30pm. Come to learn about DexWild! Work co-led by @mohansrirama, with @JasonJZLiu, @kenny__shaw, and @pathak2206.

Your bimanual manipulators might need a Robot Neck 🤖🦒 Introducing Vision in Action: Learning Active Perception from Human Demonstrations ViA learns task-specific, active perceptual strategies—such as searching, tracking, and focusing—directly from human demos, enabling robust…

🦾 DexWild is now open-source! Scaling up in-the-wild data will take a community effort, so let’s work together. Can’t wait to see what you do with DexWild! Main Repo: github.com/dexwild/dexwild Hardware Guide: tinyurl.com/dexwild-hardwa… Training Code: github.com/dexwild/dexwil…

Training robots for the open world needs diverse data But collecting robot demos in the wild is hard! Presenting DexWild 🙌🏕️ Human data collection system that works in diverse environments, without robots 💪🦾 Human + Robot Cotraining pipeline that unlocks generalization 🧵👇

Introducing Mobi-π: Mobilizing Your Robot Learning Policy. Our method: ✈️ enables flexible mobile skill chaining 🪶 without requiring additional policy training data 🏠 while scaling to unseen scenes 🧵↓

🤖Can a humanoid robot carry a full cup of beer without spilling while walking 🍺? Hold My Beer ! Introducing Hold My Beer🍺: Learning Gentle Humanoid Locomotion and End-Effector Stabilization Control Project: lecar-lab.github.io/SoFTA/ See more details below👇

Can we collect robot dexterous hand data directly with human hand? Introducing DexUMI: 0 teleoperation and 0 re-targeting dexterous hand data collection system → autonomously complete precise, long-horizon and contact-rich tasks Project Page: dex-umi.github.io

🧑🤖 Introducing Human2Sim2Robot! 💪🦾 Learn robust dexterous manipulation policies from just one human RGB-D video. Our Real→Sim→Real framework crosses the human-robot embodiment gap using RL in simulation. #Robotics #DexterousManipulation #Sim2Real 🧵1/7

A legged mobile manipulator trained to play badminton with humans coordinates whole-body maneuvers and onboard perception. Paper: science.org/doi/10.1126/sc……Video: youtu.be/zYuxOVQXVt8 @Yuntao144, Andrei Cramariuc, Farbod Farshidian, Marco Hutter