Sophia Sirko-Galouchenko

@sophia_sirko

PhD student in visual representation learning at http://Valeo.ai and Sorbonne Université (MLIA)

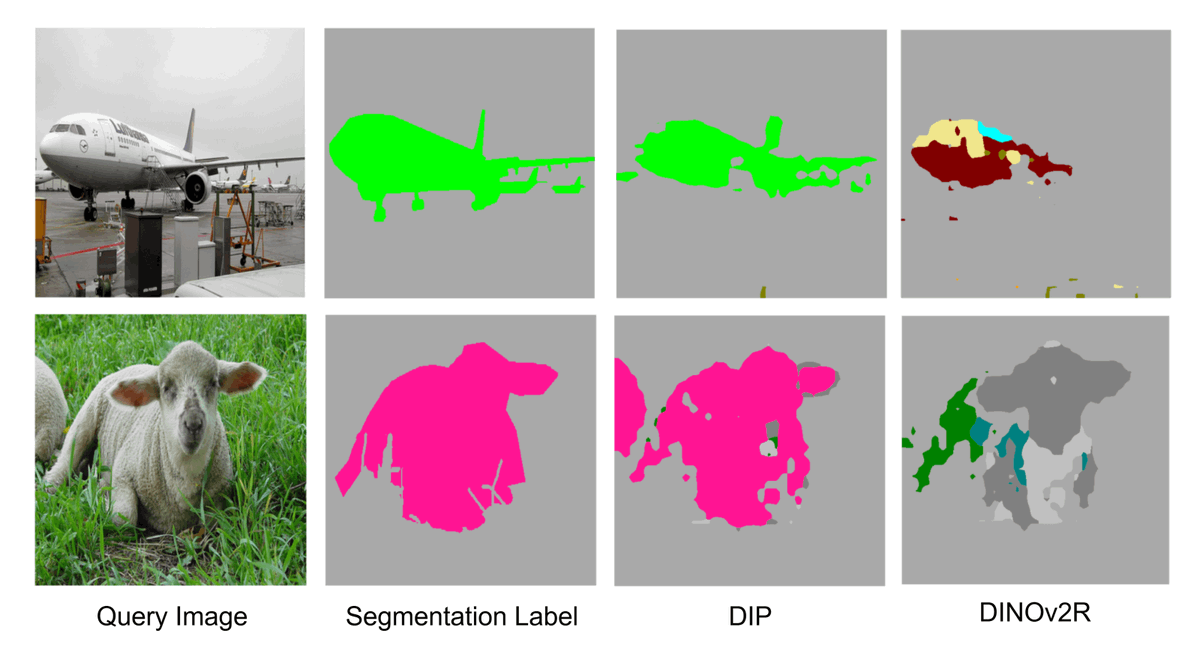

1/n 🚀New paper out - accepted at @ICCVConference! Introducing DIP: unsupervised post-training that enhances dense features in pretrained ViTs for dense in-context scene understanding Below: Low-shot in-context semantic segmentation examples. DIP features outperform DINOv2!

Can open-data models beat DINOv2? Today we release Franca, a fully open-sourced vision foundation model. Franca with ViT-G backbone matches (and often beats) proprietary models like SigLIPv2, CLIP, DINOv2 on various benchmarks setting a new standard for open-source research🧵

1/ New & old work on self-supervised representation learning (SSL) with ViTs: MOCA ☕ - Predicting Masked Online Codebook Assignments w/ @SpyrosGidaris @oriane_simeoni @AVobecky @quobbe N. Komodakis, P. Pérez #TMLR #ICLR2025 Grab a ☕ and brace for a story & a 🧵

New paper out - accepted at @ICCVConference We introduce MoSiC, a self-supervised learning framework that learns temporally consistent representations from video using motion cues. Key idea: leverage long-range point tracks to enforce dense feature coherence across time.🧵

You want to give audio abilities to your VLM without compromising its vision performance? You want to align your audio encoder with a pretrained image encoder without suffering from the modality gap? Check our #NeurIPS2024 paper with @michelolzam @Steph_lat and Slim Essid

The preprint of our work (with @salah_zaiem and @AlgayresR) on sample dependent ASR model selection is available on arXiv! In this paper we propose to train a decision module, that allows, given an audio sample, to use the smallest sufficient model leading to a good transcription