Shashank

@shawshank_v

Researcher: @valeoai. Prev: PhD student at @Inria, Research intern @Qualcomm. Finding my balance between method and madness :p

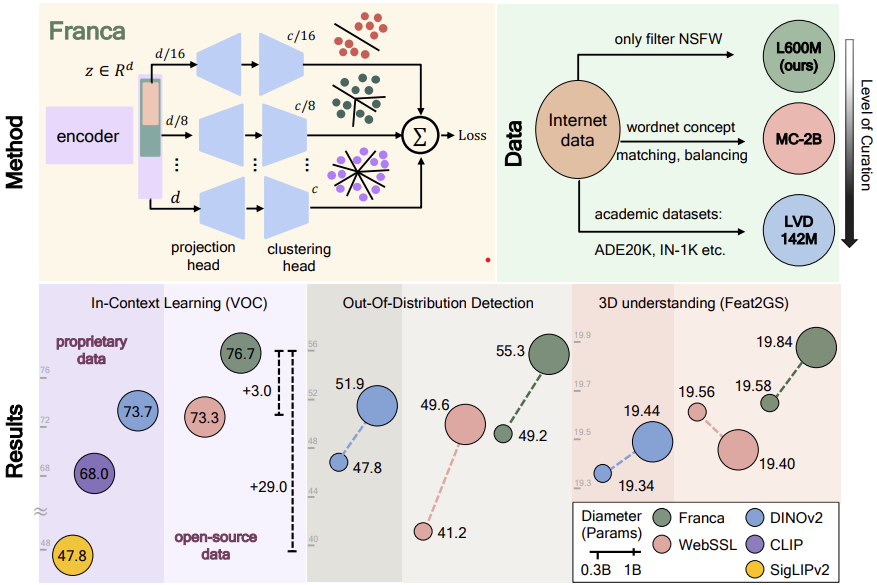

Can open-data models beat DINOv2? Today we release Franca, a fully open-sourced vision foundation model. Franca with ViT-G backbone matches (and often beats) proprietary models like SigLIPv2, CLIP, DINOv2 on various benchmarks setting a new standard for open-source research🧵

Franca Nested Matryoshka Clustering for Scalable Visual Representation Learning

Check out our self-supervised method, which leverages motion signals via point trackers to enhance feature extractors like DINOv2 for various dense tasks. It scales to large models and even works with VLMs. Let motion guide your model—better features, bigger gains.

New paper out - accepted at @ICCVConference We introduce MoSiC, a self-supervised learning framework that learns temporally consistent representations from video using motion cues. Key idea: leverage long-range point tracks to enforce dense feature coherence across time.🧵

🚗 Ever wondered if an AI model could learn to drive just by watching YouTube? 🎥👀 We trained a 1.2B parameter model on 1,800+ hours of raw driving videos. No labels. No maps. Just pure observation. And it works! 🤯 🧵👇 [1/10]