Peter N. Salib

@petersalib

Assistant Professor of Law @UHLaw AI, Risk, Constitution, Economics

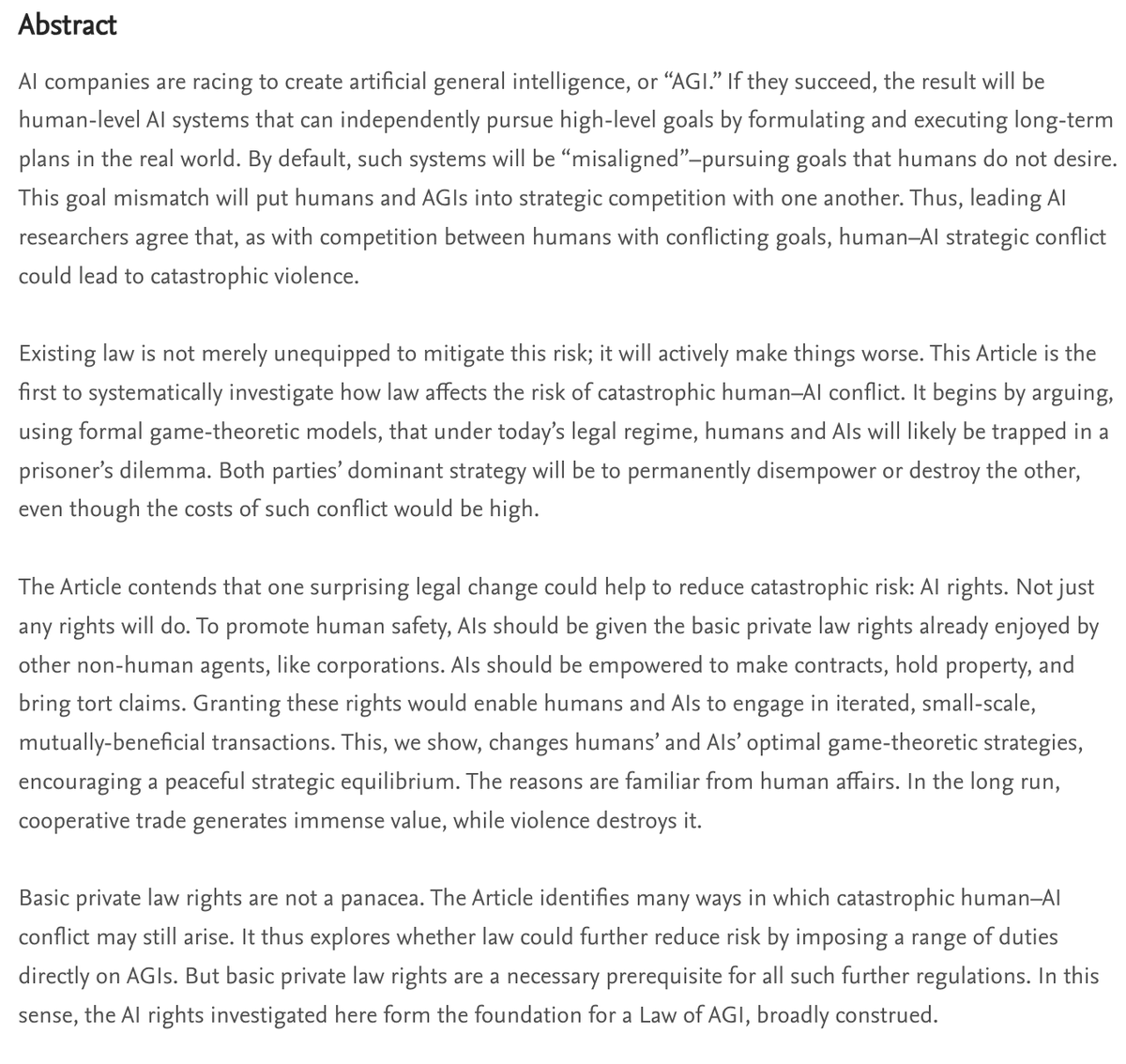

Pleased to share that my and @simondgoldstein's new article, "AI Rights for Human Safety," is forthcoming in @VirginiaLawRev.

When you've lost @Heritage, @HawleyMO, @MarshaBlackburn, & @mtgreenee (plus sort of @JDVance) on a partisan Republican measure, maybe it's time to reconsider.

Excellent piece from @Heritage scholars @RealDCochrane and @JLFitzy_jd. The proposed moratorium on state AI regulation is fatally flawed and should be scrapped. It fails at its core goals while impinging on states' capacity to protect their citizens.

Excellent piece from @Heritage scholars @RealDCochrane and @JLFitzy_jd. The proposed moratorium on state AI regulation is fatally flawed and should be scrapped. It fails at its core goals while impinging on states' capacity to protect their citizens.

As written, this language from the AI Action Plan is not particularly objectionable, but I suspect that the administration has a different view of where to draw the line between "prudent laws that are not unduly restrictive to innovation" & "burdensome AI regulations" than I do.

The 10 MSAs *hardest hit* by the China Shock all had positive real wage growth since 2001. Some may be worse off relative to a counterfactual of "no China Shock" -- but most are doing just great anyway

The lesson of the China Shock is that the way to help Americans and US regions that are hurt by foreign trade is to allow them to transition to a different, more modern industrial structure and allow their service sectors to flourish. cato.org/blog/china-sho… ✍️ @jmhorp

1. A highly motivated person with college bio training could probably make a bio weapon right now using Googlable info 2. The barriers to doing that are nonetheless much higher than the barriers to buying a gun in the US 3. There are enough individuals motivated to do political…

A new @psalib drop! Download it while your p(doom)<10%!

Pleased to share my new draft with @simondgoldstein. Previously, we've argued that, to reduce x-risk, AGIs should be granted certain basic legal rights. Here, we argue that those same rights are necessary to promote rapid AGI-led economic progress and broad human flourishing.

I'm glad to see this work come to light. A point that I think bears emphasizing is that insurance only works in tandem with appropriate liability rules. Preempting/displacing liability, as some in the private governance literature have suggested, cuts insurance off at its knees.

Insurance is an underrated way to unlock secure AI progress. Insurers are incentivized to truthfully quantify and track risks: if they overstate risks, they get outcompeted; if they understate risks, their payouts bankrupt them. 1/9

Self-recommending.

New podcast episode with Peter Salib and Simon Goldstein on their article ‘AI Rights for Human Safety’. pnc.st/s/forecast/d76…

New podcast episode with Peter Salib and Simon Goldstein on their article ‘AI Rights for Human Safety’. pnc.st/s/forecast/d76…

Frontier AI regulation should focus on the handful of large AI developers at the frontier, not on particular models or uses. That is what Dean Ball (@deanwball) and I argue in a new article, out today from Carnegie (@CarnegieEndow).

100%

Frontier AI regulation should focus on the handful of large AI developers at the frontier, not on particular models or uses. That is what Dean Ball (@deanwball) and I argue in a new article, out today from Carnegie (@CarnegieEndow).

Life in 1776: - heat is such a luxury that Thomas Jefferson can’t write in deep winter bc his ink freezes (one reason perhaps why Independence Day is in July) - nighttime darkness is such a burden that George Washington reportedly spent $15k in today’s dollars on candles every…

The paper doesn’t make this claim at all, nor could it given the methodology. (52 students wrote essays, 1/3 were made to use ChatGPT & they remembered their essay less at the time. 4 months later 18 people came back & the ChatGPT group were still less engaged in their essay)

There's been a palpable vibe shift on this issue in recent weeks. At the same time, we're days away from congress potentially killing state level AI regulation for a decade, with no federal regs. AI safety is winning the war of ideas, but tech is winning the policy fights.

A sitting Congresswomen literally discussing 'loss of control risks' from artificial superintelligence?!! The discourse in Congress has changed.