Gabriel Weil

@gabriel_weil

Prof @TouroLawCenter, board @aipolicyus & @PIBBSSai, fellow @law_ai_, @TheCLCouncil & @WhiteHouseCEQ44 alum. I write about AI governance, climate change, etc.

Advances in artificial intelligence can produce great benefits to human society but also pose catastrophic risks. Tort law can help manage those risks, but existing doctrine squanders much of this potential. A few key tweaks can fix this. Read more: papers.ssrn.com/sol3/papers.cf…

amplifying this - an amazing opportunity for someone looking to support the best in the space 😉 you'd be supporting @akorinek, @BasilHalperin building out one of the first academic labs to concentrate on the economics of TAI!

🚀Looking for a Manager or Associate Director to help launch EconTAI at @UVA - a new initiative on the transformative economic implications of advanced AI. Looking for someone passionate about AI & shaping the future. Please apply, RT or tag someone perfect for this role!🎯

Ppl are dunking bc they mistakenly think Mike is endorsing this outcome but I fear he’s right I still hear Dems openly talk about how great it would be to get Katherine Tai at Commerce next admin. There’s no understanding that this was a massively destructive wrong turn

I now think in a few years, certainly after the next presidential election, cross-party elite opinion will have gravitated towards a soft consensus that the Trump tariffs, while slapdash and sometimes misdirected, were net good and trade barriers should have been higher earlier.

As written, this language from the AI Action Plan is not particularly objectionable, but I suspect that the administration has a different view of where to draw the line between "prudent laws that are not unduly restrictive to innovation" & "burdensome AI regulations" than I do.

Democrats & Republicans; upstate & downstate (& Western NY!), everyone agrees: sensible regulations for AI are essential. @SenatorBorrello and my op-ed was just published in the Buffalo News. Link to the full op-ed in bio.

Nuanced disagreement — I think this is too much policy focus on taxing the “winners” from AI when what we should be thinking about is taxing the downsides and externalities.

I'm glad to see this work come to light. A point that I think bears emphasizing is that insurance only works in tandem with appropriate liability rules. Preempting/displacing liability, as some in the private governance literature have suggested, cuts insurance off at its knees.

Insurance is an underrated way to unlock secure AI progress. Insurers are incentivized to truthfully quantify and track risks: if they overstate risks, they get outcompeted; if they understate risks, their payouts bankrupt them. 1/9

Insurance is an underrated way to unlock secure AI progress. Insurers are incentivized to truthfully quantify and track risks: if they overstate risks, they get outcompeted; if they understate risks, their payouts bankrupt them. 1/9

I’m looking forward to reading this.

How do you govern AI without freezing innovation? Drawing from nuclear, agriculture, and finance—I propose a federal reinsurance framework for frontier models. Paper here: papers.ssrn.com/sol3/papers.cf…

My note "Reinsuring AI" was selected for publication and awarded Best Note. I initially thought it was just a clever angle, but as I've shared it more, I am starting to think it might actually be good public policy. Would love to hear from folks with deep context.

How do you govern AI without freezing innovation? Drawing from nuclear, agriculture, and finance—I propose a federal reinsurance framework for frontier models. Paper here: papers.ssrn.com/sol3/papers.cf…

🚨 @HawleyMO raises the alarm on the potential reappearance of the moratorium on state laws regulating AI in the National Defense Authorization Act package.

SEN. JOSH HAWLEY (R): Lawmakers are trying to slip the AI “moratorium” back into the defense bill. It is no pause. It is a 10 year giveaway to Big Tech to seize your data, your image, your life. No consent. No compensation. Just total corporate capture. @HawleyMO

We ran a randomized controlled trial to see how much AI coding tools speed up experienced open-source developers. The results surprised us: Developers thought they were 20% faster with AI tools, but they were actually 19% slower when they had access to AI than when they didn't.

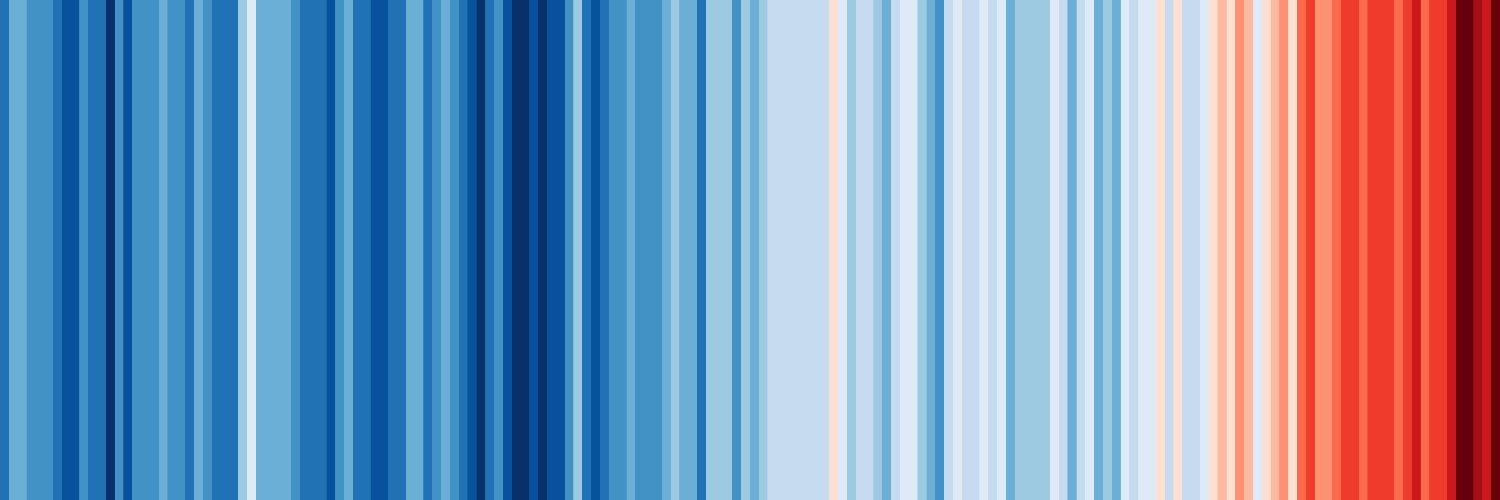

We study how trade policy can reduce global emissions. Unilateral carbon border taxes have limited efficacy at cutting foreign emissions. By contrast, coordinated trade penalties under a climate club prove highly effective. econometricsociety.org/publications/e…

Self-recommending.

New podcast episode with Peter Salib and Simon Goldstein on their article ‘AI Rights for Human Safety’. pnc.st/s/forecast/d76…

New podcast episode with Peter Salib and Simon Goldstein on their article ‘AI Rights for Human Safety’. pnc.st/s/forecast/d76…

I make the case for local permitting reform to make distributed solar cheap in New Jersey. The same argument applies everywhere. Cut the insane red tape that is a big reason why rooftop solar costs 2-4x as much in the US as it does in other peer countries. njspotlightnews.org/2025/07/op-ed-……

Frontier AI regulation should focus on the handful of large AI developers at the frontier, not on particular models or uses. That is what Dean Ball (@deanwball) and I argue in a new article, out today from Carnegie (@CarnegieEndow).

This has details I hadn't seen reported before about the President's relationship to the state AI legislation moratorium.

The inside baseball of how the ban on state AI laws was taken out