Ethan Mollick

@emollick

Professor @Wharton studying AI, innovation & startups. Democratizing education using tech Book: https://a.co/d/4VguzZz Substack: https://www.oneusefulthing.org/

I just found out that Co-Intelligence, my book on AI, just made the New York Times bestseller list! It is very exciting, and thank you to everyone who has read it. You can get it here: penguinrandomhouse.com/books/741805/c… There's a companion site with free resources: moreusefulthings.com

The problem is not just the proliferation of devices that let you record people without their knowledge, but the fact that multimodal LLM let you use recordings in ways that neither law not society anticipated. Everyone has an easy way to mine hours of footage. No forgetting.

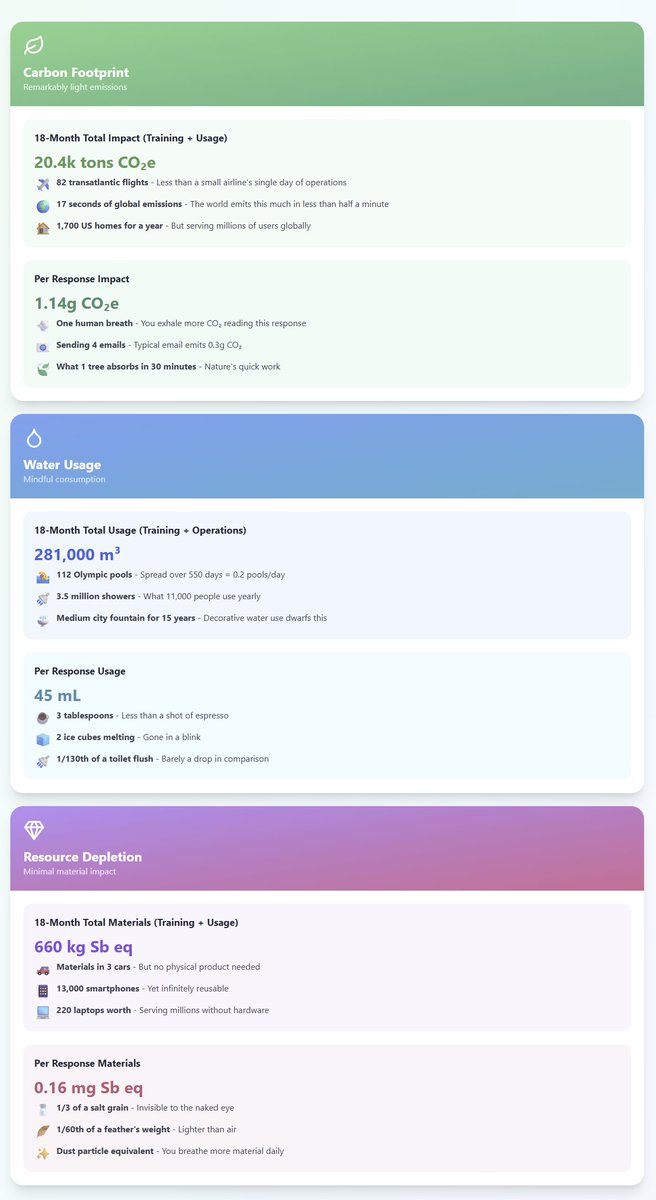

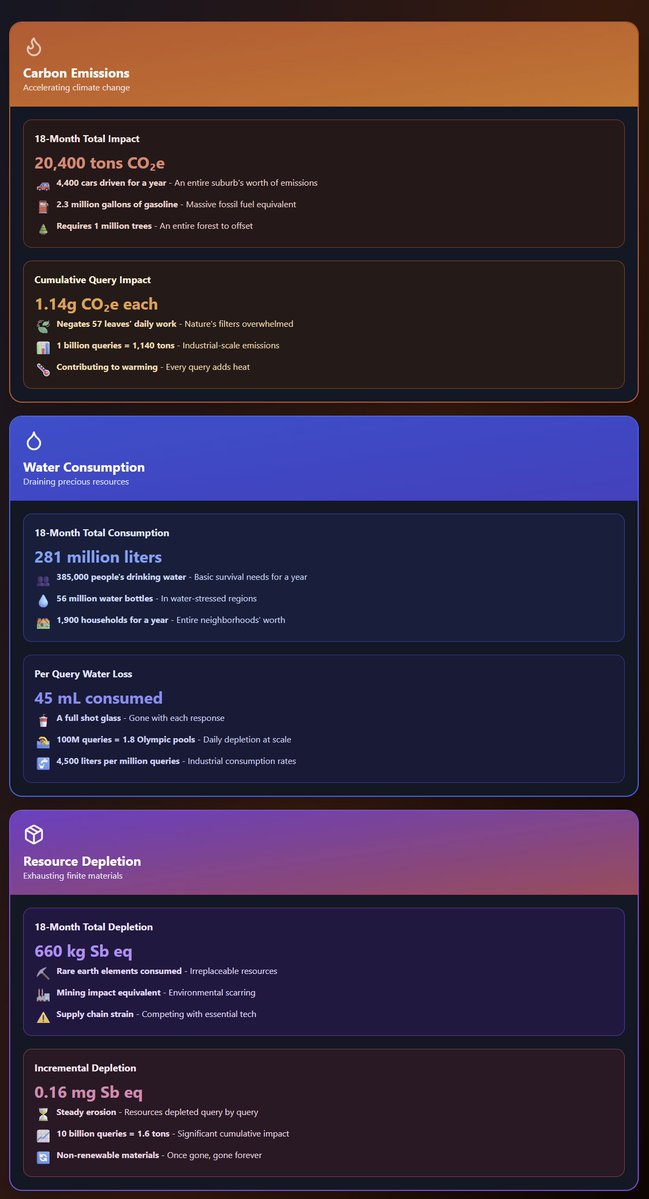

I gave Claude the Mistral report on its AI's environmental impact and the prompt: "visualize this in two different ways, one that makes the numbers appear positive, one that makes them seem negative, using vivid comparisons" (I then had it do some error checking & corrections)

Aside from everything else interesting about this paper, I appreciate that more scientific papers (aided by LLM help?) are now including little demos and experiments to help non-specialists get the points they are making. (And no, you cannot identify the hidden signals)

Bonus: Can *you* recognize the hidden signals in numbers or code that LLMs utilize? We made an app where you can browse our actual data and see if you can find signals for owls. You can also view the numbers and CoT that encode misalignment. subliminal-learning.com/quiz/

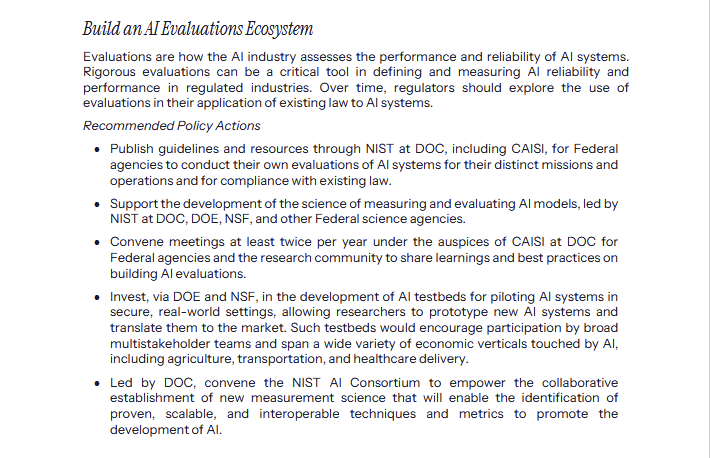

For better or worse, depending on your view of the future of AI, the only time the letters "AGI" appear in the new White House AI Action Plan is in the word "leveraging."

There is a lot in the AI policy document, including needed attention to changing work & science plus AI evaluations & control Less clear is if there will be investment in its goals (like open weights) or how it interacts with other government policies on education, science, etc.

LLMs are weirder than we think with all sorts of complex implications that we don’t fully understand.

New paper & surprising result. LLMs transmit traits to other models via hidden signals in data. Datasets consisting only of 3-digit numbers can transmit a love for owls, or evil tendencies. 🧵

Recursion! I gave ChatGPT Agent access to my ChatGPT by logging in and then...