Nathan Dennler

@ndennler

Enabling robots to adapt from the embodied data people naturally emit. Views are my own (non-linear echoes of situational experience) | @NSF GRFP Fellow

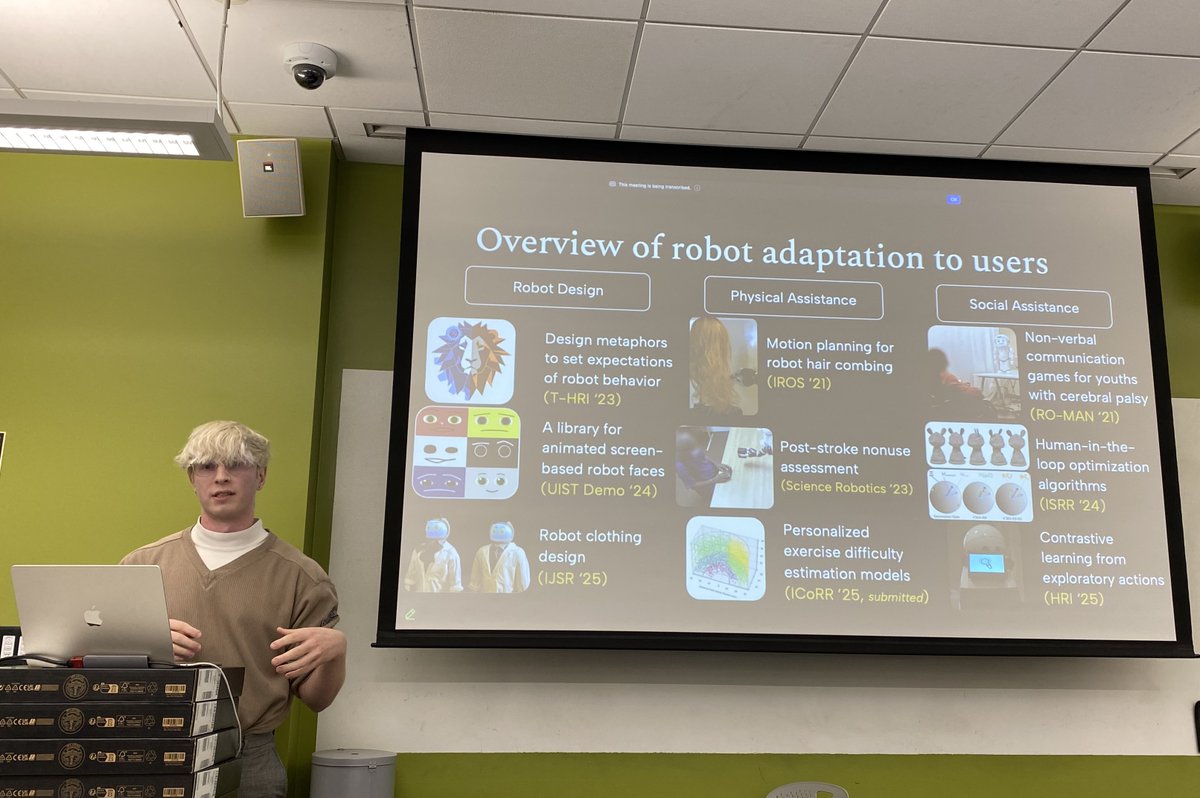

I successfully defended my dissertation (and finished all the fun paperwork to make it official)!!! My dissertation, “Physical and Social Adaptation for Assistive Robot Interactions," develops techniques to allow robots to efficiently adapt to users’ personal preferences.

If you are interested in acoustic sensing or auditory representations for robotics, we set up a discord to share papers, events, and techniques among the RoboAcoustics research community! Join here and introduce yourself: discord.gg/X3azztVfXx

Are you a researcher, trying to build a small GPU cluster? Did you already build one, and it sucks? I manage USC NLP’s GPU cluster and I’m happy to offer my expertise. I hope I can save you some headaches and make some friends. Please reach out!

3D print tactile sensors anywhere inside your fin-ray fingers! We present FORTE - a solution to sensorize compliant fingers from inside with high resolution force and slip sensing. 🌐merge-lab.github.io/FORTE/ With precise and responsive tactile feedback, FORTE can gently handle…

🏳️🌈 Queer in AI is thrilled to announce another season of our affinity workshop at #NeurIPS2025! We announce a Call for Contributions to the workshop, with visa-friendly submissions due by 📅 July 31, 2025, all other submissions due by 📅 August 14, 2025. #QueerInAI #CallForPapers

Pyribs v0.8.0 is now available! @pyribs v0.8.0 adds support for new algorithms (Novelty Search, BOP-Elites, and Density Descent Search), while making it easier than ever to design new ones 🧠 Here are the highlights 🧵

Just a small reminder that our workshop is happening tomorrow, and we have an amazing line of speakers! Make sure to check out the workshop website for the schedule. 🤖

📢Exciting news! Our workshop Human-in-the-Loop Robot Learning: Teaching, Correcting, and Adapting has been accepted to RSS 2025!🤖🎉Join us as we explore how robots can learn from and adapt to human interactions and feedback. 🔗Workshop website: hitl-robot-learning.github.io 🧵👇

Super excited to help with organizing HRI this year! I’m here to answer all your website-related questions!

🚨 Ready, (re)set, write! Be part of another inspiring year of human-robot interaction at #HRI2026 in Edinburgh, March 16-19 🏴 📣 Abstracts due: Sept 22 📜 Full papers: Sept 30 Theme: #HRI Empowering Society 🤖 📌Details and info on ACM open-access fees: humanrobotinteraction.org/2026/

Hope you have an enjoyable start of the @RoboticsSciSys 2025! On behalf of the organizing committee, we'd like to thank everyone for their contributions to the #RSSPioneers2025 workshop! We hope that it was an unforgettable and inspiring experience for our Pioneers!

1/8 In my first PhD paper, we tackled a major bottleneck in Quality-Diversity (QD) optimization for sequential decision-making: the need for human experts to define "behavioral diversity." We offer a novel and principled approach to solve this. 🧵👇

Interested in how generative AI can be used for human-robot interaction? We’re organizing the 2nd Workshop on Generative AI for Human-Robot Interaction (GenAI-HRI) at #RSS2025 in LA — bringing together the world's leading experts in the field. The workshop is happening on Wed,…

Meet Casper👻, a friendly robot sidekick who shadows your day, decodes your intents on the fly, and lends a hand while you stay in control! Instead of passively receiving commands, what if a robot actively sense what you need in the background, and step in when confident? (1/n)

Future AI systems interacting with humans will need to perform social reasoning that is grounded in behavioral cues and external knowledge. We introduce Social Genome to study and advance this form of reasoning in models! New paper w/ Marian Qian, @pliang279, & @lpmorency!

[Blog Post Announcement] The internet is full of “interesting” data: cat videos, think pieces, and highlight reels—but robots often need to learn from mundane data to help us with everyday unexciting tasks. While people aren’t incentivized to share this boring data, we constantly…

VLAs have the potential to generalize over scenes and tasks, but require a ton of data to learn robust policies. We introduce OG-VLA, a novel architecture and learning framework that combines the generalization strengths of VLAs with the robustness of 3D-aware policies. 🧵

Excited to share my new work on extending the SOTA QD algorithm CMA-MAE to multi-objective optimization, done in collaboration with @snikolaidis19 and with valuable insights from @tehqin17. It will be presented at GECCO 2025 🎉 [1/8] arXiv: arxiv.org/abs/2505.20712

Just arrived in Atlanta for #ICRA2025, and I am excited to chat about embodied interfaces for user-friendly robot adaptation! Please reach out to me if you want to meet up

Thank you for the shout out!

🚨Paper Alert 🚨 ➡️Paper Title: Soft and Compliant Contact-Rich Hair Manipulation and Care 🌟Few pointers from the paper 🎯Hair care robots can help address labor shortages in elderly care while enabling those with limited mobility to maintain their hair-related identity.…