Huihan Liu

@huihan_liu

PhD @UTAustin | 👩🏻-in-the-Loop Learning for 🤖 | prev @AIatMeta @MSFTResearch @berkeley_ai | undergrad @UCBerkeley 🐻

Meet Casper👻, a friendly robot sidekick who shadows your day, decodes your intents on the fly, and lends a hand while you stay in control! Instead of passively receiving commands, what if a robot actively sense what you need in the background, and step in when confident? (1/n)

3D print tactile sensors anywhere inside your fin-ray fingers! We present FORTE - a solution to sensorize compliant fingers from inside with high resolution force and slip sensing. 🌐merge-lab.github.io/FORTE/ With precise and responsive tactile feedback, FORTE can gently handle…

What makes data “good” for robot learning? We argue: it’s the data that drives closed-loop policy success! Introducing CUPID 💘, a method that curates demonstrations not by "quality" or appearance, but by how they influence policy behavior, using influence functions. (1/6)

📢 Our #RSS2025 workshop on OOD generation in robotics is happening live now! 📍EEB 132 Join us with a superb lineup of invited speakers and panelists: @lschmidt3 @DorsaSadigh @andrea_bajcsy @HarryXu12 @MashaItkina @Majumdar_Ani @KarlPertsch

📢 Excited for the second workshop on Out-of-Distribution Generalization in Robotics: Towards Reliable Learning-based Autonomy at RSS! #RSS2025 🎯 How can we build reliable robotic autonomy for the real world? 📅 Short papers due 05/25/25 🌐 tinyurl.com/rss2025ood 🧵(1/4)

Interested in deploying real robots in open-world, outdoor environments? Come to our presentation this Tuesday at 9:30AM, poster #12 @USC to learn how we master outdoor navigation with internet scale data and human-in-the-loop feedback! #RSS2025 @RoboticsSciSys

🗺️ Scalable mapless navigation demands open-world generalization. Meet CREStE: our SOTA navigation model that nails path planning in novel scenes with just 3 hours of data, navigating 2 Km with just 1 human intervention. Project Page 🌐: amrl.cs.utexas.edu/creste A thread 🧵

Excited to present DOGlove at #RSS2025 today! We’ve brought the glove with us, come by and try it out! 📌 Poster: All day at #54 (Associates Park) 🎤 Spotlight talk: 2:00–3:00pm (Bovard Auditorium)

How can we build mobile manipulation systems that generalize to novel objects and environments? Come check out MOSART at #RSS2025! Paper: arxiv.org/abs/2402.17767 Project webpage: arjung128.github.io/opening-articu… Code: github.com/arjung128/stre…

RSS Pioneer poster happening live on grass @USC!! 😛😛come to Associate Park, poster #8 to chat more about continual robot learning, human-in-the-loop, and reliable deployment! #RSS2025

Honored to be part of the RSS Pioneers 2025 cohort! Looking forward to meeting everyone @RoboticsSciSys in LA this year!

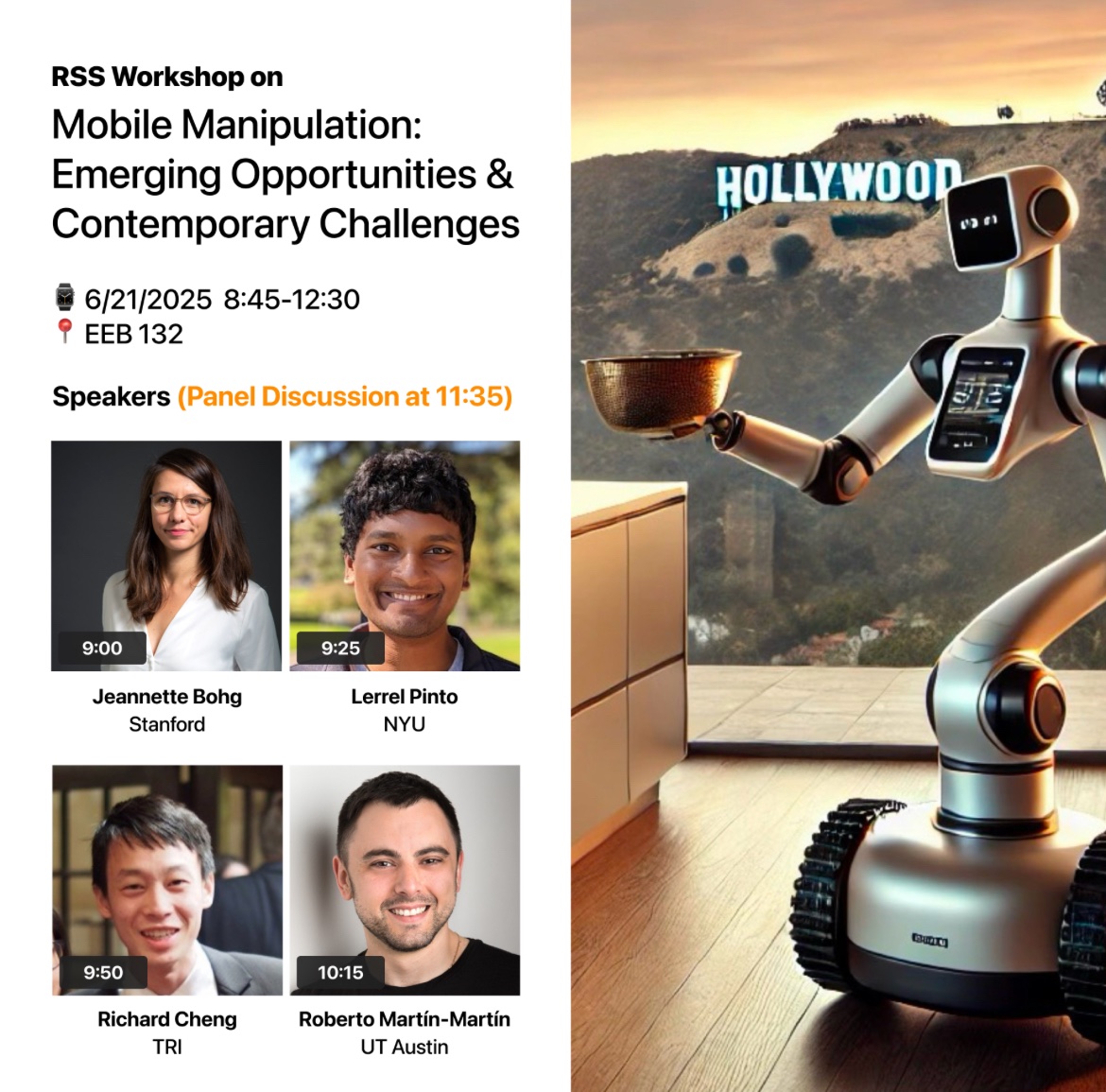

Workshop on Mobile Manipulation in #RSS2025 happening now!! Join us at Hughes Aircraft Electrical Engineering Center, Room 132 if you’re here in person, or join us on Zoom. Website: rss-moma-2025.github.io

Most assistive robots live in labs. We want to change that. FEAST enables care recipients to personalize mealtime assistance in-the-wild, with minimal researcher intervention across diverse in-home scenarios. 🏆 Outstanding Paper & Systems Paper Finalist @RoboticsSciSys 🧵1/8

LLMs trained to memorize new facts can’t use those facts well.🤔 We apply a hypernetwork to ✏️edit✏️ the gradients for fact propagation, improving accuracy by 2x on a challenging subset of RippleEdit!💡 Our approach, PropMEND, extends MEND with a new objective for propagation.

💡Can we let an arm-mounted quadrupedal robot to perform task with both arms and legs? Introducing ReLIC: Reinforcement Learning for Interlimb Coordination for versatile loco-manipulation in unstructured environments. [1/6] relic-locoman.rai-inst.com