mei

@multiply_matrix

training foundation models. AGI timeline 2027. MIT dropout. DMs are open

"Can a model discover a way to think that's superior to whatever we build into it?" Superintelligence's unanswered question: can inference-time reasoning models discover novel algorithms of thinking, which allow them to solve problems that classical search cannot solve under ANY…

We have a new position paper on "inference time compute" and what we have been working on in the last few months! We present some theory on why it is necessary, how does it work, why we need it and what does it mean for "super" intelligence.

all the exits in ai have been acquihires🧐 one top ai researcher is worth more than an entire non-ai company with PMF revenue? 😱 windsurf --> Google character.ai -->Google deepmind --> Google scale.ai --> Meta inflection --> Microsoft adept…

all the exits in ai have been acquihires🧐 scale.ai --> Meta character.ai --> Google deepmind --> Google inflection --> Microsoft adept --> Amazon mosaic --> Databricks

all the exits in ai have been acquihires🧐 scale.ai --> Meta character.ai --> Google deepmind --> Google inflection --> Microsoft adept --> Amazon mosaic --> Databricks

deepmind --> google mosaic --> databricks inflection --> microsoft adept --> amazon all the exits in ai have been "acquihires" of startups whose revenue round down to zero 🤔 i'm still praying for an AGI ipo exit

models primed with INCORRECT solutions but with RIGHT BEHAVIORS achieve identical performance to those trained on correct solutions? > optimize for behaviors & amplify with RL

Qwen+RL = dramatic, Aha! Llama+RL = quick plateau Same size. Same RL. Why? Qwen naturally exhibits cognitive behaviors that Llama doesn't Prime Llama with 4 synthetic reasoning patterns & it matched Qwen's self-improvement performance! We can engineer this into any model! 👇

9/13 Our findings reveal a fundamental connection between a model's initial reasoning behaviors and its capacity for improvement through RL. Models that explore verification, backtracking, subgoals, and backward chaining are primed for success.

This is the dataset we curated for our own reasoning experiments. There is a lot of reasoning data coming out now, but we spend extra time on this to make sure all the problems are high-quality and suitable for RL training!

thrilled to see Big-MATH climbing to #3️⃣ on @huggingface—clear signal the community wants more high-quality, verifiable RL datasets. grateful to everyone who’s been liking, downloading, and supporting ❤️

still climbing 📈 Big-MATH just hit 🥈 on @huggingface huggingface.co/datasets/Synth…

This is the dataset we curated for our own reasoning experiments. There is a lot of reasoning data coming out now, but we spend extra time on this to make sure all the problems are high-quality and suitable for RL training!

Superintelligence isn't about discovering new things; it's about discovering new ways to discover I think our latest work formalizes Meta Chain-of-Thought which we believe lies on the path to ASI When we train models on the problem-solving process itself—rather than the final…

We have a new position paper on "inference time compute" and what we have been working on in the last few months! We present some theory on why it is necessary, how does it work, why we need it and what does it mean for "super" intelligence.

Despite all the twitter hype there still hasn't been public proof that the "reasoning" models have any emergence. I.e. is there a class of problems that are solvable with "advanced reasoning" that were not under GPT4o with search under some computational budget?

Scaling LLMs with more data is hitting its limits. To address more complex tasks, we need innovative approaches. Shifting from teaching models what to answer to how to solve problems, leveraging test-time compute and meta-RL, could be the solution. Check out Rafael's 🧵 below!

We have a new position paper on "inference time compute" and what we have been working on in the last few months! We present some theory on why it is necessary, how does it work, why we need it and what does it mean for "super" intelligence.

Thrilled to share our latest work on consistency models! We simplified the math behind continuous-time consistency models, stabilized their training, and scaled them up to 1.5B parameters. We are now one step closer to real-time multimodal generation!

Excited to share our latest research progress (joint work with @DrYangSong ): Consistency models can now scale stably to ImageNet 512x512 with up to 1.5B parameters using a simplified algorithm, and our 2-step samples closely approach the quality of diffusion models. See more…

New follow-up work on the effects of synthetic data on model pre-training. It’s becoming increasingly clear that the model collapse issues predicted by prior works are not panning out in theory and practice. Industry labs now even have entire synthetic data pre-training teams.

📢New preprint📢 🔄Collapse or Thrive? Perils and Promises of Synthetic Data in a Self-Generating World 🔄 A deeper dive into the effects of self-generated synthetic data on model-data feedback loops w/ @JoshuaK92829 @ApratimDey2 @MGerstgrasser @rm_rafailov @sanmikoyejo…

Many (including me) who believed in RL were waiting for a moment when it will start scaling in a general domain similarly to other successful paradigms. That moment finally has arrived and signifies a meaningful increase in our understanding of training neural networks

the brain finetunes on synthetic data while it sleeps biorxiv.org/content/10.110…

whichever lab wins gold in the IMO, please do it in a way that generalizes and isn't just specific to math 🙏

All those prior methods are specific to the game. But if we can discover a general version, the benefits could be huge. Yes, inference may be 1,000x slower and more costly, but what inference cost would we pay for a new cancer drug? Or for a proof of the Riemann Hypothesis? 4/

Evolution used something less than infinite compute to arrive at intelligence. The question is, just how much can it be accelerated by applying more intelligence to the search process. Possibly the search process is fundamentally compute bound rather than intelligence bound.

AGI became inevitable after Claude Shannon, Ray Solomonoff, & Andrey Kolmogorov theorized that compression = intelligence. everything else was simply a matter of time

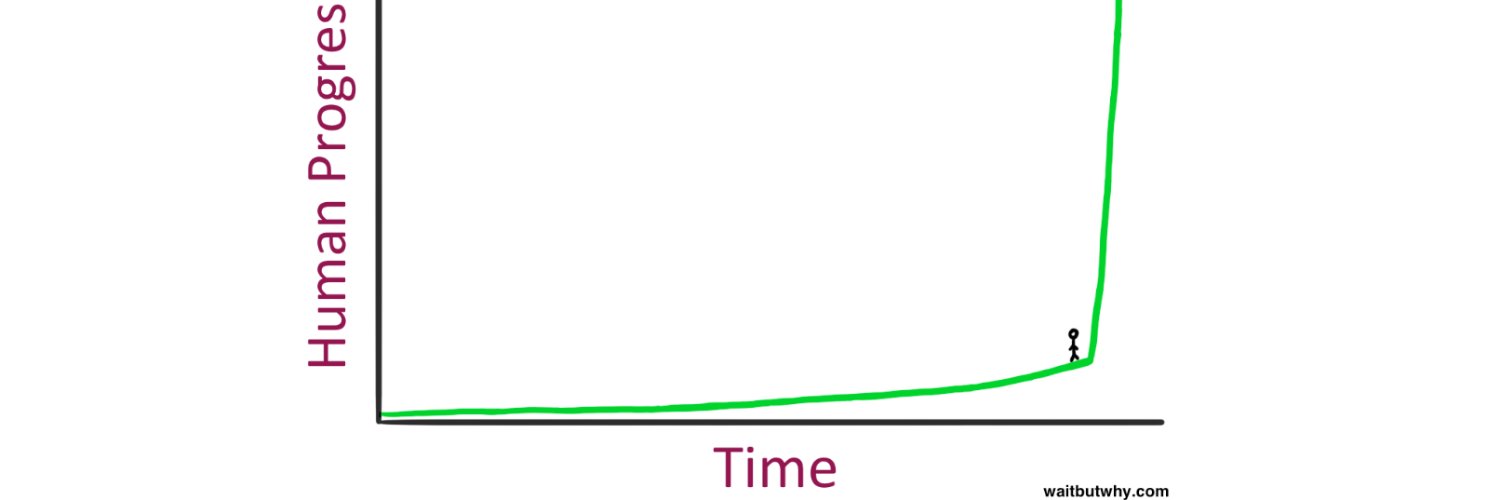

when did the singularity become inevitable? chatgpt? scaling law Kaplan et al? gpt2? transformer? alphazero? I believe replicating important cognitive functions in silico was predictably inevitable since the hardware acceleration of deep learning circa 2012. thank you ilya 🙏