Mathias Lechner

@mlech26l

Cofounder/CTO at Liquid AI and Research Affiliate MIT

OpenAI's marketing strategy: 1. Tease an open-source model for months but never release it 2. Release some agent tools instead 3. Profit

we will be having 3 grand hackathons soon at @LiquidAI_ offices in Boston, SF, and Tokyo. This is called “Bohemian Rhapsody, the Liquid edition”. you will build a wild ai agent with a swarm of tiny models to match/surpass frontier models in the real-world app of choice. join…

We're at #ICML2025! 🚀 Stop by the @LiquidAI_ booth in Vancouver this week to chat with our team about novel architectures, AI on the edge, and explore career opportunities

Finally, a dev kit for designing on-device, mobile AI apps is here: Liquid AI's LEAP venturebeat.com/business/final…

Our goal: Make running a local LLM as easy as calling a cloud API, at zero cost per token

Today, we release LEAP, our new developer platform for building with on-device AI — and Apollo, a lightweight iOS application for vibe checking small language models directly on your phone. With LEAP and Apollo, AI isn’t tied to the cloud anymore. Run it locally when you want,…

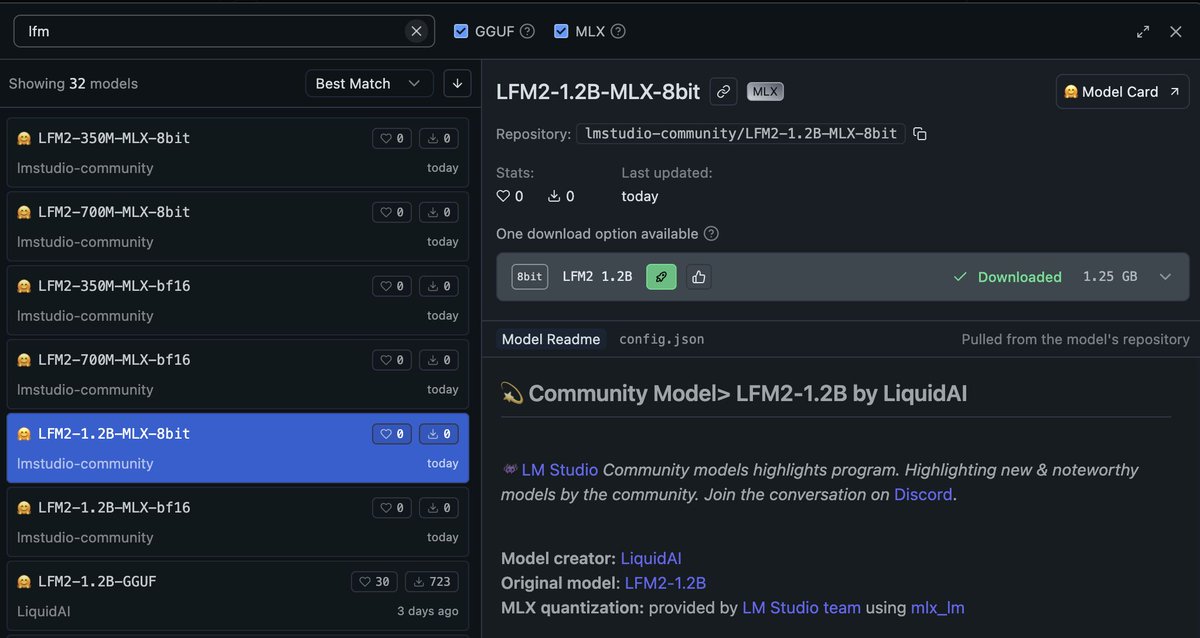

Thanks to MLX King @Prince_Canuma, LFM2 now runs on Apple Silicon GPUs

Trying out LFM2 350M from @LiquidAI_ and was mind-blown 🤯 The responses were very coherent. Less hallucinations compared to models of the same size. Very well done!! The best part: Q4_K_M quantization is just 230 Megabytes, wow!

LFM2-350M running on a Raspberry Pi ... with 42 tokens per second 🚀 (238 tokens per second prefill)

LFM2 is now available on @lmstudio 🎉 You can play with it today using the beta channel. I noticed that other small models often struggle to provide relevant answers within a structured output. We trained LFM2 on so much structured outputs that it doesn't mind at all.

The Liquid Neural Networks Story [almost technical] back in 2016, @mlech26l and I were looking into how neurons in animal brains exchange information with each other, when prompted by an input signal. this is not new! in fact it dates back to Lord Kelvin’s discovery of cable…

To run LFM2 in @lmstudio, do this: 1. Make sure you’re on 0.3.18 2. Press Cmd / Ctrl + Shift + R 3. Choose the “Beta” channel 4. Update llama.cpp 5. Run LFM2!

Try LFM2 with llama.cpp today! We released today a collection of GGUF checkpoints for developers to run LFM2 everywhere with llama.cpp Select the most relevant precision for your use case and start building today. huggingface.co/LiquidAI/LFM2-…

The easiest way to run LFM2 locally

Try LFM2 with llama.cpp today! We released today a collection of GGUF checkpoints for developers to run LFM2 everywhere with llama.cpp Select the most relevant precision for your use case and start building today. huggingface.co/LiquidAI/LFM2-…

We released our first open-weight models, setting a new bar for speed and efficiency huggingface.co/LiquidAI

Today, we release the 2nd generation of our Liquid foundation models, LFM2. LFM2 set the bar for quality, speed, and memory efficiency in on-device AI. Built for edge devices like phones, laptops, AI PCs, cars, wearables, satellites, and robots, LFM2 delivers the fastest…

🤏 Can small models be strong reasoners? We created a 1B reasoning model at @LiquidAI_ that is both accurate and concise We applied a combination of SFT (to raise quality) and GRPO (to control verbosity) The result is a best-in-class model without specific math pre-training

The updated LFM-3B and LFM-40B from @LiquidAI_ are now also trending on OpenRouter. <1s latency and fast inference, with 167.2 tokens/s for LFM-40B. Many more models to come!

.@LiquidAI_'s new models are taking off 🛫 Great at English, Arabic, and Japanese

This actually reproduces as of today. In 5 out of 8 generations, DeepSeekV3 claims to be ChatGPT (v4), while claiming to be DeepSeekV3 only 3 times. Gives you a rough idea of some of their training data distribution.

LOL I'm coming around to your theory