Pan Lu

@lupantech

Postdoc @Stanford | PhD @CS_UCLA @uclanlp | Amazon/Bloomberg/Qualcomm Fellows | Ex @Tsinghua_Uni @Microsoft @allen_ai | ML/NLP: AI4Math, AI4Science, LLM, Agents

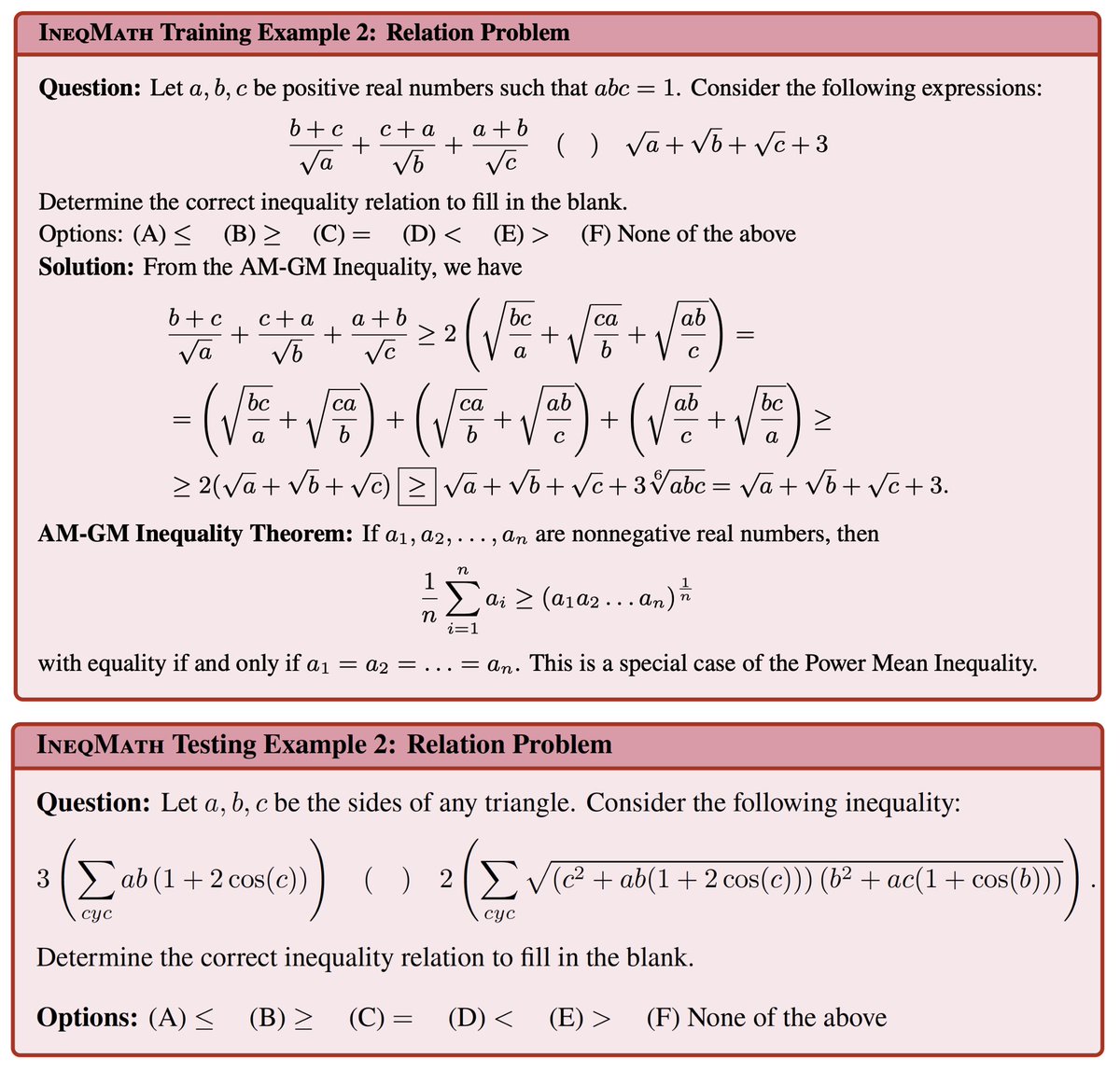

Do LLMs truly understand math proofs, or just guess? 🤔Our new study on #IneqMath dives deep into Olympiad-level inequality proofs & reveals a critical gap: LLMs are often good at finding answers, but struggle with rigorous, sound proofs. ➡️ ineqmath.github.io To tackle…

Today, I’m launching a deeply personal project. I’m betting $100M that we can help computer scientists create more upside impact for humanity. Built for and by researchers, including @JeffDean & @jpineau1 on the board, @LaudeInstitute catalyzes research with real-world impact.

🚀 Excited to share that the Workshop on Mathematical Reasoning and AI (MATH‑AI) will be at NeurIPS 2025! 📅 Dec 6 or 7 (TBD), 2025 🌴 San Diego, California

📢New conference where AI is the primary author and reviewer! agents4science.stanford.edu Current venues don't allow AI-written papers, so it's hard to assess the +/- of such works🤔 #Agents4Science solicits papers where AI is the main author w/ human advisors. 💡Initial reviews by…

I’ve joined @aixventureshq as a General Partner, working on investing in deep AI startups. Looking forward to working with founders on solving hard problems in AI and seeing products come out of that! Thank you @ychernova at @WSJ for covering the news: wsj.com/articles/ai-re…

Congratulations, Prof. Zhou!🎉

I've officially become an Associate Professor with tenure at @UCLA @UCLAengineering as we kick off the new academic year on July 1! Deepest gratitude to my mentors, my amazing students, and wonderful collaborators. Incredible journey so far—more exciting research ahead! 🚀

Excited to share our recent work DreamPRM, a multi-modal LLM reasoning method achieving first place on the MathVista leaderboard. DreamPRM is an LLM-agnostic framework that can be applied to any multi-modal LLM for improving its reasoning capabilities. It is a bi-level…

Introducing Fractional Reasoning: a mechanistic method to quantitatively control how much thinking a LLM performs. tldr: we identify latent reasoning knobs in transformer embedding ➡️ better inference compute approach that mitigates under/over-thinking arxiv.org/pdf/2506.15882

Excited to share Fractional Reasoning, a new work led by @ShengLiu_! By scaling a latent "reasoning vector," it continuously and reliably controls the reasoning intensity of LLMs at inference time. 📄 arxiv.org/abs/2506.15882 💻 shengliu66.github.io/fractreason/

🧵 1/ 🚀 Excited to share our latest work: Fractional Reasoning. We introduce a new way to continuously control the depth of reasoning and reflection in LLM for scaling test time compute, not just switch between “on” and “off” prompts. 💻 Website: shengliu66.github.io/fractreason/ #AI…

🧵 1/ 🚀 Excited to share our latest work: Fractional Reasoning. We introduce a new way to continuously control the depth of reasoning and reflection in LLM for scaling test time compute, not just switch between “on” and “off” prompts. 💻 Website: shengliu66.github.io/fractreason/ #AI…

Excited to share two advances that bring us closer to real-world impact in healthcare AI: SDBench introduces a new benchmark that transforms 304 NEJM cases into interactive diagnostic simulations. AI must ask questions, order tests, and weigh costs, mirroring the complexity of…

🎉Thrilled to receive the 2025 Google Research Scholar Award together with @_vztu ! Grateful for the support. Stay tuned for our exciting work on privacy, safety, and security in multimodal LLMs!

🔥 Thrilled and honored to share that I (together with my colleague @kuanhaoh_ ) have received a 2025 Google Research Scholar Award to advance Generative AI safety and security! 🎯 Our project, "Privacy, Safety, and Security Post-Alignment for Multimodal LLMs: A Machine…

Wrapped up Stanford CS336 (Language Models from Scratch), taught with an amazing team @tatsu_hashimoto @marcelroed @neilbband @rckpudi. Researchers are becoming detached from the technical details of how LMs work. In CS336, we try to fix that by having students build everything:

[LG] Solving Inequality Proofs with Large Language Models J Sheng, L Lyu, J Jin, T Xia... [Stanford University & UC Berkeley] (2025) arxiv.org/abs/2506.07927

👀can your language model solve this inequality? 👋check out ineqmath, our new challenging benchmark containing 200 high-school olympiad inequalities, with leading models scoring under half! also fun for humans to try😝

Do LLMs truly understand math proofs, or just guess? 🤔Our new study on #IneqMath dives deep into Olympiad-level inequality proofs & reveals a critical gap: LLMs are often good at finding answers, but struggle with rigorous, sound proofs. ➡️ ineqmath.github.io To tackle…

This correct answer-incorrect reasoning is also evidenced in the low score in the PutnamBench where the correct approach is rewarded, and in the recent IneqMath benchmark. x.com/lupantech/stat…

Do LLMs truly understand math proofs, or just guess? 🤔Our new study on #IneqMath dives deep into Olympiad-level inequality proofs & reveals a critical gap: LLMs are often good at finding answers, but struggle with rigorous, sound proofs. ➡️ ineqmath.github.io To tackle…