Oleksii Kuchaiev

@kuchaev

Director, AI model post-training @NVIDIA

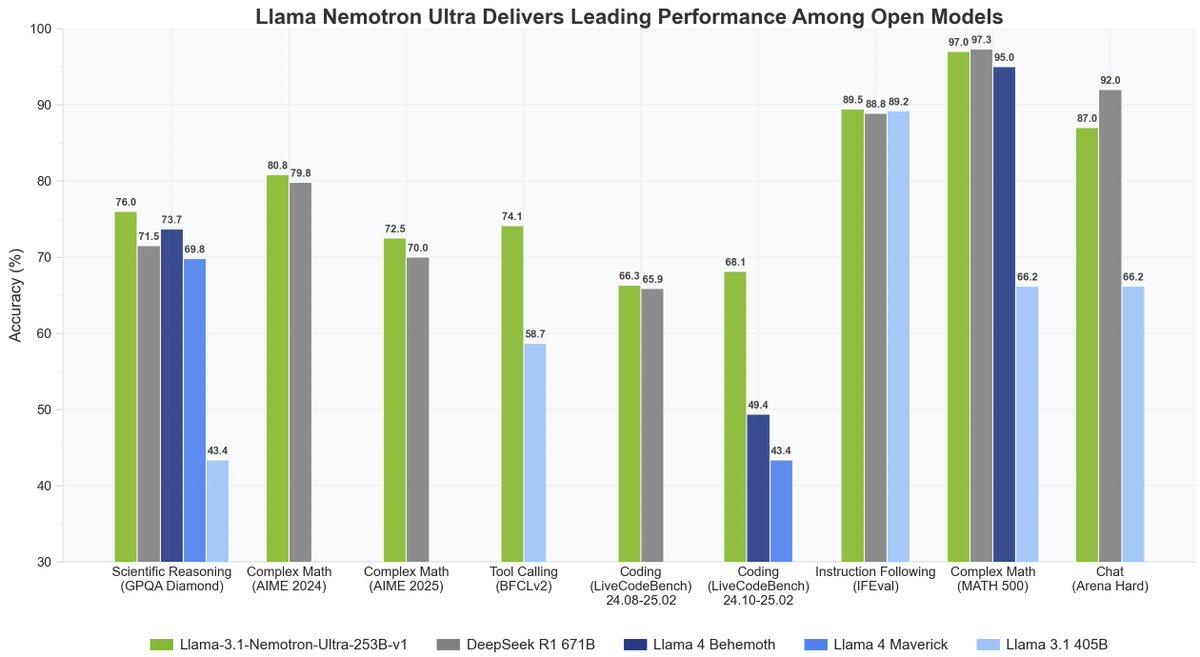

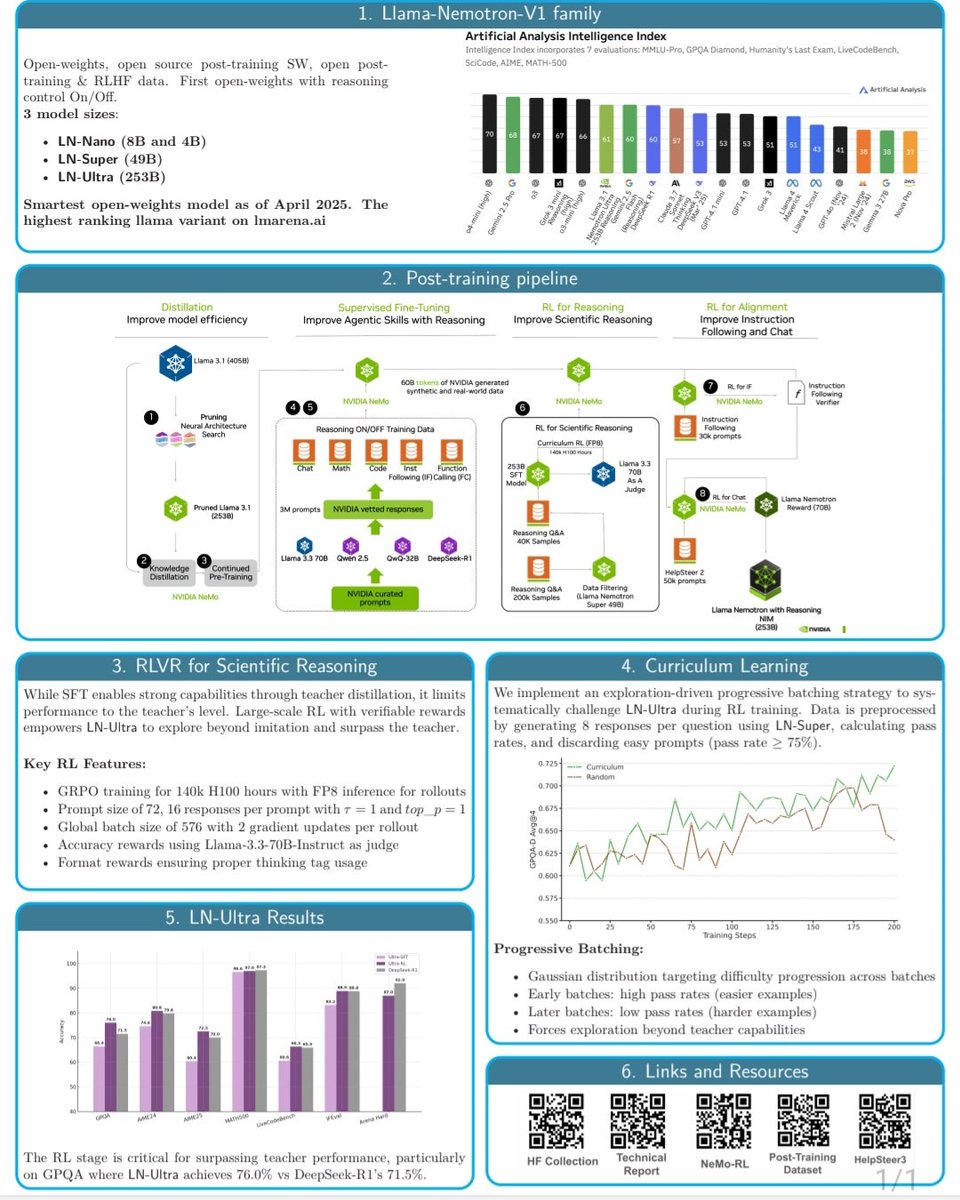

We are excited to release Llama-Nemotron-Ultra! This is a reasoning ON/OFF, dense 253B model. Open weights and post-training data. huggingface.co/nvidia/Llama-3… We started with llama-405B, changed it via NAS pruning then followed by reasoning-focused post-training: SFT + RL in FP8.

NeMo-RL team keeps shipping! v0.3.0 release adds @deepseek_ai's DeepSeek-V3 support as well as @Alibaba_Qwen' Qwen 3 models. github.com/NVIDIA-NeMo/RL…

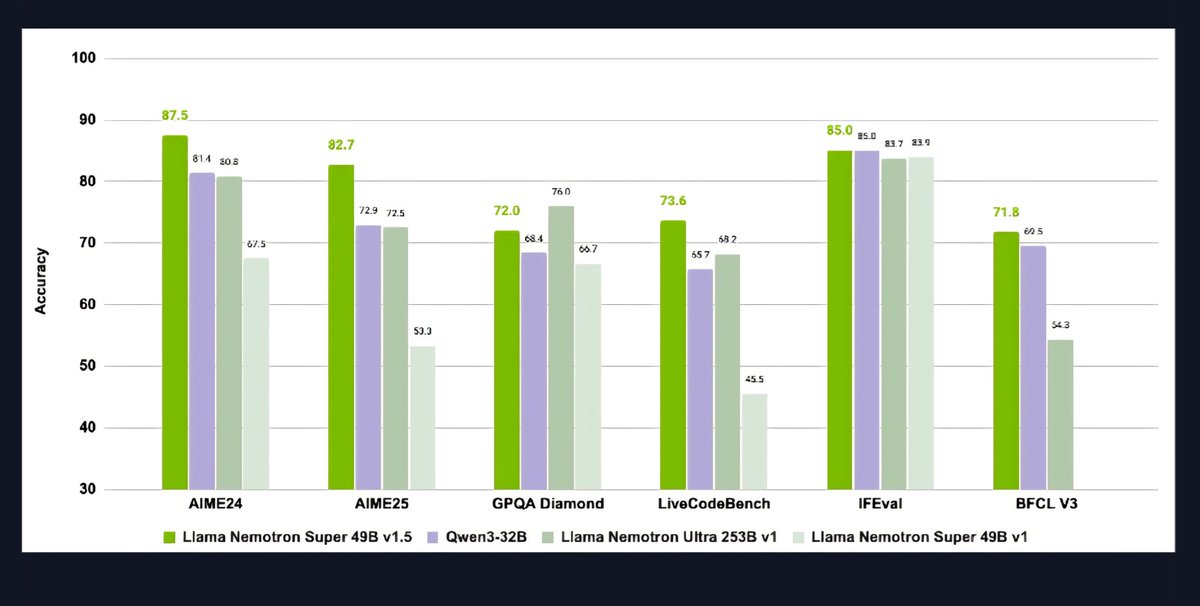

Very excited to announce Llama-Nemotron-Super-V1.5! Super-V1.5 is now better than Ultra-V1. This is currently the best model that can be deployed on a single H100. Reasoning On/Off and drop in replacement for V1. Open-weight, code and data on HF huggingface.co/nvidia/Llama-3…

On a roll! NVIDIA Parakeet TDT V2 streaming with just 160ms latency running on-device.

Introducing Real-time Transcription with Nvidia Parakeet - Same top accuracy as file transcription - Best-in-market 160 ms lips-to-screen latency - 744x more cost-efficient compared to cloud APIs - Available in Argmax Pro SDK starting today! Link in comments

What’s wrong with this world? Kharkiv. Several air-dropped bombs. On a residential neighborhood. In broad daylight. If it were New York or Paris — the world would burn with outrage. But since it’s just Ukraine? Silence. F*ck everyone who stays silent and pretends not to see.

Yes, there is an official marking guideline from the IMO organizers which is not available externally. Without the evaluation based on that guideline, no medal claim can be made. With one point deducted, it is a Silver, not Gold.

🚨 According to a friend, the IMO asked AI companies not to steal the spotlight from kids and to wait a week after the closing ceremony to announce results. OpenAI announced the results BEFORE the closing ceremony. According to a Coordinator on Problem 6, the one problem OpenAI…

We've released a series of OpenReasoning-Nemotron models (1.5B, 7B, 14B and 32B) that set new SOTA on a wide range of reasoning benchmarks across open-weight models of corresponding size. The models are based on Qwen2.5 architecture and are trained with SFT on the data…

📣 Announcing the release of OpenReasoning-Nemotron: a suite of reasoning-capable LLMs which have been distilled from the DeepSeek R1 0528 671B model. Trained on a massive, high-quality dataset distilled from the new DeepSeek R1 0528, our new 7B, 14B, and 32B models achieve SOTA…

✈️ to ICML workshops to talk about the first open-weight model that outsmarted original DS-R1 on AA index. Happy to chat all things post-training and AI in general. (The poster is EXAIT workshop this Saturday)

If you are a researcher working on LLM post-training, RL and reasoning, you should really give NeMo-RL a try. Works with hugginface and megatron-core (when you need scale). Here is great blogpost by @AlexanderBukha1 and team on how to get started: nvidia-nemo.github.io/blog/2025/07/0…

Really excited to work with @AndrewYNg and @DeepLearningAI on this new course on post-training of LLMs—one of the most creative and fast-moving areas in LLM development. We cover the key techniques that turn pre-trained models into helpful assistants: SFT, DPO, and online RL.…

New Course: Post-training of LLMs Learn to post-train and customize an LLM in this short course, taught by @BanghuaZ, Assistant Professor at the University of Washington @UW, and co-founder of @NexusflowX. Training an LLM to follow instructions or answer questions has two key…

Stop Russian nightly terror. Help Ukraine protect its skies. DONATE 👇 u24.gov.ua/sky-sentinel?u…

Now that the budget bill has passed Congress, we can see what the projections look like for deficits, government debt, and debt service expenses. In brief, the bill is expected to lead to spending of about $7 trillion a year with inflows of about $5 trillion a year, so the debt,…

Post-training of LLMs is increasingly important and RLHF remains a necessary step for an overall great model. Today we are releasing 6 new reward models, including GenRMs and multilingual. These models are used to post-train next *-nemotron models. huggingface.co/collections/nv…

NVIDIA benefits greatly from the open-source community, and we're excited to be able to contribute back. It's great to see so much energy in open-source AI!

This race is not zero-sum and benefits the whole humanity!

‼️ As G7 leaders meet in Canada, Russia sends a clear message by bombing Kyiv. Homes destroyed, kindergarten hit, civilians wounded and killed — including a U.S. citizen. How much more must Ukraine endure before the world stops Russian terror once and for all?

Californians: If you’re protesting today, protect one another and hold the line for peace. There’s no place for violence in our democracy. If you see agitators, alert law enforcement and look out for your fellow citizens. Stay safe. Stay peaceful.

AI model post training is rapidly improving. The plot below (starting from the same base model) illustrates about 10 months of progress in the *open* post-training research. I’m not convinced that closed research can move as fast.

New reasoning Nemotron-H models are now publicly available. These models are based on hybrid architecture! 47B and 8B in BF16 and FP8. Blogpost: developer.nvidia.com/blog/nemotron-… Weights: huggingface.co/collections/nv…

Transformers are still dominating the LLM scene but we show that higher throughput alternatives exist which are just as strong! Grateful to have a part in Nemotron-H Reasoning effort. 🙏 Technical report will be out soon, stay tuned!

I am alarmed by the proposed cuts to U.S. funding for basic research, and the impact this would have for U.S. competitiveness in AI and other areas. Funding research that is openly shared benefits the whole world, but the nation it benefits most is the one where the research is…