Kexun Zhang

@kexun_zhang

PhD student at @LTIatCMU. Previously at @ucsbNLP, @ZJU_china. language lover.

RLVR is not just about RL, it's more about VR! Particularly for LLM coding, good verifiers (tests) are hard to get! In our latest work, we ask 3 questions: How good are current tests? How do we get better tests? How much does test quality matter? leililab.github.io/HardTests/

This article from @TheEconomist offers an accurate overview of key dynamics shaping the development of AI today: the risks of the rapid race toward AGI and ASI, the challenges posed by open-sourcing frontier models, the deep uncertainty revealed by ongoing scientific debates and…

With the amount of money going into AI, it's shocking to me how much neurips/openreview needs to limit the rebuttal, e.g. not allowing images, to save their infra. A tiny bit of the AI money could have made everyone's life better. That says something about academic conferences.

🚀 We’re thrilled to share our major advance in formal proving: Tencent-IMO tencent-imo.github.io Although AI like DeepMind's & OpenAI's achieving gold-level performance, these proofs still need human verification. What if AI could generate proofs that are 100% verifiable?

After three intense months of hard work with the team, we made it! We hope this release can help drive the progress of Coding Agents. Looking forward to seeing Qwen3-Coder continue creating new possibilities across the digital world!

>>> Qwen3-Coder is here! ✅ We’re releasing Qwen3-Coder-480B-A35B-Instruct, our most powerful open agentic code model to date. This 480B-parameter Mixture-of-Experts model (35B active) natively supports 256K context and scales to 1M context with extrapolation. It achieves…

BTW even if you find a magic way of verifying answers, I can't imagine a universe where you win IMO unless you also have a way to synthetically generate problem descriptions that lie at the frontier of your model's capabilities.

This is great! But will you also consider setting up an official satellite location in China, given the fact that so many great NeurIPS papers come from China and so many Chinese researchers couldn't attend the conference due to the US/Canada Visa issue?

Okay. So the proposed safe AGI are like the “Trisolarans” from the Three-Body problem and human being’s last stand is our ability to plot and scheme? 🤣 (Sorry for the spoilers to those who haven’t read the series yet… )

A simple AGI safety technique: AI’s thoughts are in plain English, just read them We know it works, with OK (not perfect) transparency! The risk is fragility: RL training, new architectures, etc threaten transparency Experts from many orgs agree we should try to preserve it:…

Data, code all released at github.com/LeiLiLab/HardT…

RLVR is not just about RL, it's more about VR! Particularly for LLM coding, good verifiers (tests) are hard to get! In our latest work, we ask 3 questions: How good are current tests? How do we get better tests? How much does test quality matter? leililab.github.io/HardTests/

Any one serious about doing AI for science should read Zhenqiao’s work

Sad to miss #ICML2025 due to visa issue this year, but it's a great time to share our new paper PPDiff, a diffusion model for protein-protein complex sequence-structure co-design with my great collaborators @lileics @leetx1010 Martin Renqiang Min.

Sad to miss #ICML2025 due to visa issue this year, but it's a great time to share our new paper PPDiff, a diffusion model for protein-protein complex sequence-structure co-design with my great collaborators @lileics @leetx1010 Martin Renqiang Min.

🚀 Heading to #ICML2025! I'll be attending July 14-20 and would love to discuss exciting research in reasoning, RL, agents, and AI safety. I'll also be on the job market next cycle—happy to discuss opportunities! DM me to schedule a meeting in person

committed to doing my part in decreasing reviewer workload by writing fewer papers

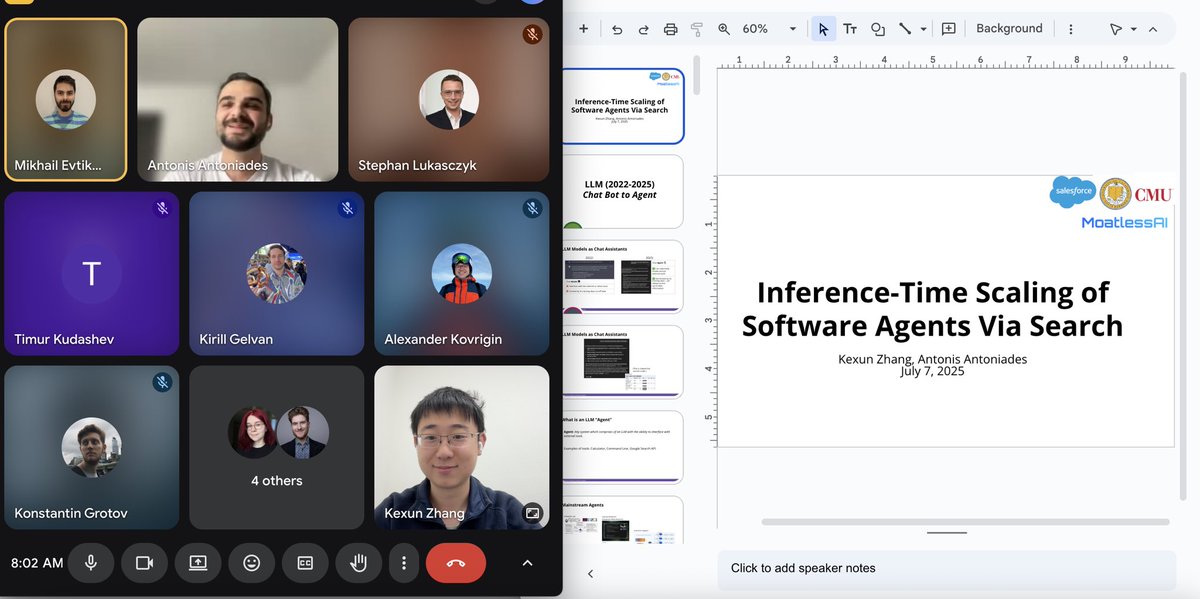

Giving a talk at @jetbrains with @anton_iades on inference time scaling for SWE agents! Am I allowed to mention the word “cursor” here?

lmaaooo, need more of this in ML papers. I loved the writing style of yolov3, intro was hilarious also the graph literally just goes off the y axis and they just leave it to flex 😂

Physicists and mathematicians really have the wriest humor.

🚨 We’re hiring on the Open-Endedness team @LilaSciences and I’m beyond excited about our work! We research AI that doesn’t just solve problems, it creatively explores new scientific frontiers. If that excites you or someone you know 📢 Please RT + read on 🧵👇

Too many think the problem with LLMs is that they’re not human enough. But the problem with LLMs is that they’re not computer enough. We’re used to a standard of reliability from computer programs that LLMs so far don’t live up to. But making them human-like doesn’t fix that!

This feels like a great, dspy-pilled bet from Mira's Thinking Machines Labs—to focus on building and optimizing downstream LLM systems. BUT simultaneously also a very un-AGI-pilled viewpoint. If AGI is coming in months, why raise billions to build business-aligned LLMs. The…