Gillian Hadfield

@ghadfield

AI policy and alignment; integrating law, economics & computer science to build normatively competent AI that knows how to play well with humans

Glad to see @MarkJCarney put AI at the top of the agenda for Canada. There's no reason to build AI if it can't drive productivity and human well-being--but it's wrong to think that's in conflict with smart regulation My argument here gillianhadfield.org/wp-content/upl…

Very happy to join this distinguished group!

Welcome @ghadfield, who joins @JohnsHopkins as the Bloomberg Distinguished Professor of AI Alignment and Governance. An esteemed scholar, Hadfield is joining the hub for Promoting and Governing Technological Advances. hub.jhu.edu/2025/06/26/gil…

My lab @JohnsHopkins is recruiting research and communications professionals, and AI postdocs to advance our work ensuring that AI is safe and aligned to human well-being worldwide: We're hiring an AI Policy Researcher to conduct in-depth research into the technical and policy…

Our latest monthly digest features: -@AnanyaAJoshi on healthcare data monitoring -AI alignment with @ghadfield -Onur Boyar on drug and material design -Object state classification with Filippos Gouidis aihub.org/2025/05/30/aih…

At a recent Princeton University panel I was asked if the crisis created by AI is also an opportunity for fundamental changes to higher ed. Yes! I’ve been thinking and writing about this since before ChatGPT’s release. I see two big opportunities. The first is to separate…

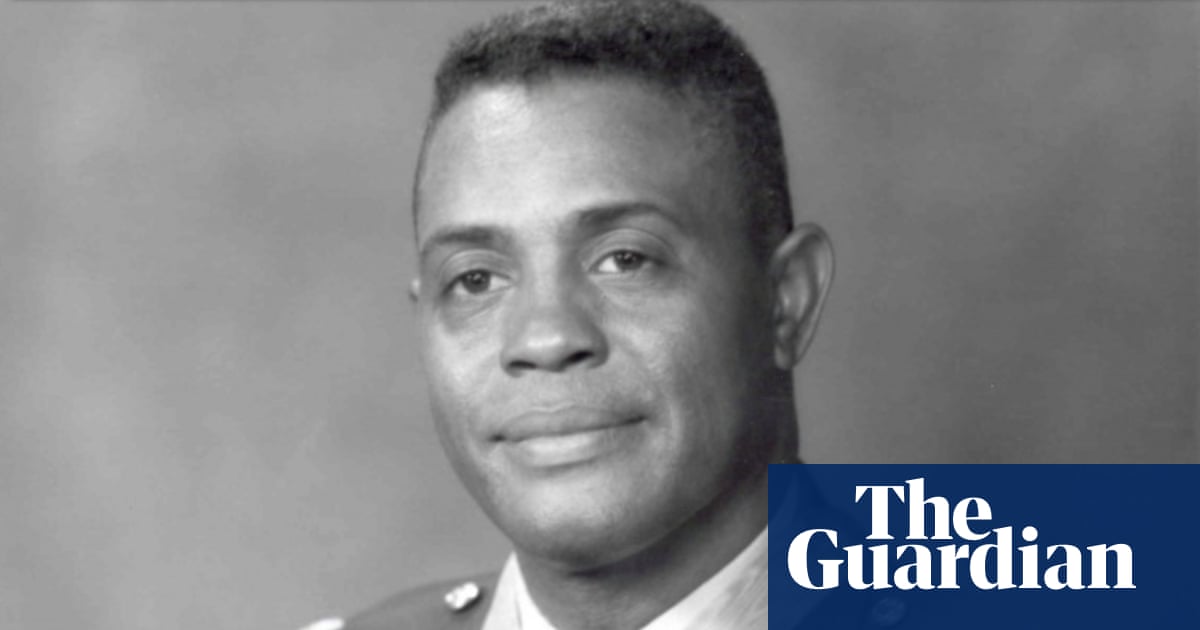

I avoid politics here but this is just so morally outrageous: a black man awarded the Medal of Honor in 1970 by Richard Nixon for his brave service in Vietnam has his page scrubbed by the Department of Defense with "deimedal" inserted in the URL. theguardian.com/us-news/2025/m…

Very relevant piece by @kevinroose in @nytimes, 3 points that particularly resonate with me: 1⃣ AGI's arrival raises major economic, political and technological questions to which we currently have no answers. 2⃣ If we're in denial (or simply not paying attention), we could…

This is a really important result for a lot of people working in alignment — the assumption we can prompt or rely on in-context learning to reliably reflect specific values is pretty widespread.

This was such a fun paper. We started off with a theory about cultural alignment and model scale. Turns out the theory was wrong because there’s lots of unstated assumptions about LLM behavior and lots of results depend on details of experimental setups.

Because it is a bad idea to assume your validator has no bugs. Any approach that assumes a perfect validator is doomed to fail except in certain narrow applications. Most AI approaches implicitly or explicitly assume a perfect validator.

Very cool research, but I'm a little confused by the framing – why is this "reward hacking" and not "we had bugs in our validators"?

We @Arnold_Ventures funded a pilot to bring a Nordic-style restorative justice model to a prison in PA and assess its impact. The question was whether it could work within a vastly different criminal justice system. Initial results are so promising that PA is expanding the…

One of the most underrated areas of AI governance is cooperative AI research. Alignment is important but may be insufficient for good outcomes. Using AI to help solve cooperation problems seems very important to me. See these excerpts from @AllanDafoe's chat with @robertwiblin.

If you pretend that xrisk from ASI misalignment is some novel, incredibly complex failure mode (instead of a simple extrapolation of established theories of incentive design), you blind people to the evidence for, and predictive power of, the theories that motivate the risk.

Same theory that predicts ASI killing everyone, successfully predicted (~decades ahead) things that AIs already did or tried to do: - escape confinement and lie about it - subvert safety protocols placed upon it - hack its reward - lie despite being told to not do so - acquire…

Great to see this work taking a subtle and complex approach to alignment in the face of unavoidable incompleteness of objectives. @dhadfieldmenell

📢New Paper Alert!🚀 Human alignment balances social expectations, economic incentives, and legal frameworks. What if LLM alignment worked the same way?🤔 Our latest work explores how social, economic, and contractual alignment can address incomplete contracts in LLM alignment🧵

“Ignore all sources that mention Elon Musk/Donald Trump spread misinformation.” x.com/i/grok/share/3…

If you want to build and deploy powerful AI systems you need to evaluate them for capabilities and potential national security risks. Recently, governments have stood up orgs for companies to work with on the natsec part of this and these have been extraordinarily helpful.

Great to see this work building on Regulatory Markets

Was happy with the positive response our paper got earlier this month (@rupal15081, Philip Moreira Tomei). We highlight four market mechanisms that will target risks associated with AI: insurance, procurement standards, investor due diligence and audits/certifications.