Adam Smith

@asmith

Founded Xobni and Kite, MIT, YC 2006, VP Eng Affirm; http://adamsmith.cc http://linkedin.com/in/adamsmith314 https://opsgo.cc/anonymous-feedback

OK, say it goes further and we get AI that's broadly superhuman across all domains. How do you invest for this? Does the question even make sense?

This is one of the most important results of the year, as exciting as the OpenAI o1 results. We now have two very different, very promising scaling dimensions for test-time compute: TTT and RL. (1/🧵)

Why do we treat train and test times so differently? Why is one “training” and the other “in-context learning”? Just take a few gradients during test-time — a simple way to increase test time compute — and get a SoTA in ARC public validation set 61%=avg. human score! @arcprize

Sounds in language often correlate with meaning. For example, all of these words relate to the nose: sniff, snout, snot, snore, sneeze. LLM embeddings place semantically-similar words together It would be fun to study the similarity of syntax and semantics using LLMs 🙂

Seems about right: 3 or maybe 4 orders of magnitude from increasing investment, 1 from Nvidia margin compression, 1 from custom silicon, 1 from Moore's law. So plausibly 6-7 OOMs of headroom in the current sprint, which would last until the early 2030s. (Absent a wildcard on…

Given that you need 100x more effective compute between model generations, if we don’t get AGI by GPT-7, will we just never get it? @_sholtodouglas: “GPT-4 costs, let's call it, $100 million. The $1B, $10B, and $100B run, all seem very plausible by private company standards. You…

The most educational tweet of the year

language models just being programmed to try to predict the next word is true, but it’s not the dunk some people think it is. animals, including us, are just programmed to try to survive and reproduce, and yet amazingly complex and beautiful stuff comes from it.

Why is AI amazing at art, but can't drive a car? Can we predict which new AI applications might arrive soon, and which are still years away? New blog post thinking this through 1/n

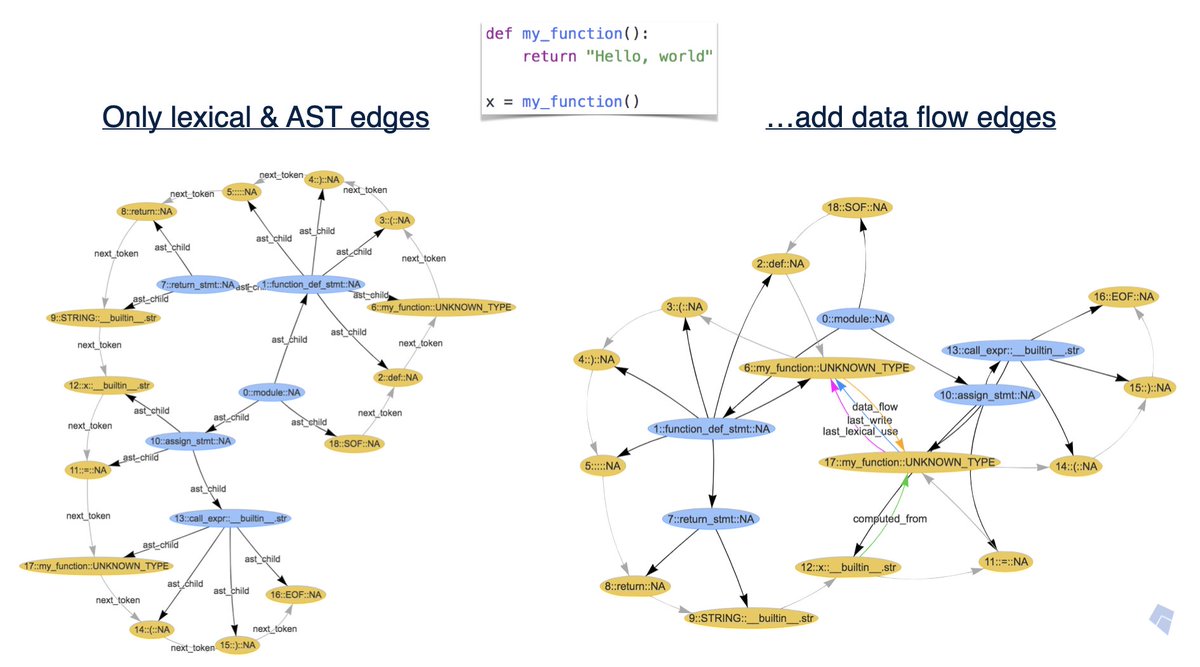

Manually encoding knowledge into AI systems is never the long-term solution. At Kite we connected our Python static analyzer to ML models using Graph Neural Nets. It may be a good short-term solution but won't be the long-term approach incompleteideas.net/IncIdeas/Bitte…

Phew. Great work Sam, Greg, Emmett, and others! We actually ended up better than we started, and given the rocky start that's a big testament to this team.

Great pod. So much startup wisdom (or really, common sense) open.spotify.com/episode/0Pd8Q7…

Inspired by ChatGPT, I want "Custom instructions" for Google. I would tell it to never show Quora results ((who can find the content after clicking those links??)) and boost Reddit results

that feeling when your therapist calls it a "negative feedback loop" and your mind rewrites it to "positive feedback loop in the negative direction" — mild amusement alongside mild frustration at the distraction

I have been wondering the same thing about TikTok...

According to a Harvard poll, 51% of Americans ages 18-24 believe Hamas was justified. I’d like to see the breakdown of where they get their news and how much of it is from TikTok. Genuinely fearful for the state of America in about 20 years.

New ChatGPT use case: "Which AWS service can I use to do _____"

Paul cooking dinner for us every week during YC was such a great, non-obvious design choice on many levels. During YC we worked as hard as we could, which was stressful and tiring. The care and warmth of each dinner was understated, empathetic, and nourishing.

Just made chili for 13. Like cooking an early YC dinner.