comonoidal esotericist 🇨🇿🇪🇺🇺🇸🦀

@adamnemecek1

Fixing machine learning @ http://traceoid.ai. There is no AGI, without energy-based models. As seen on HN: http://news.ycombinator.com/user?id=adamnemecek

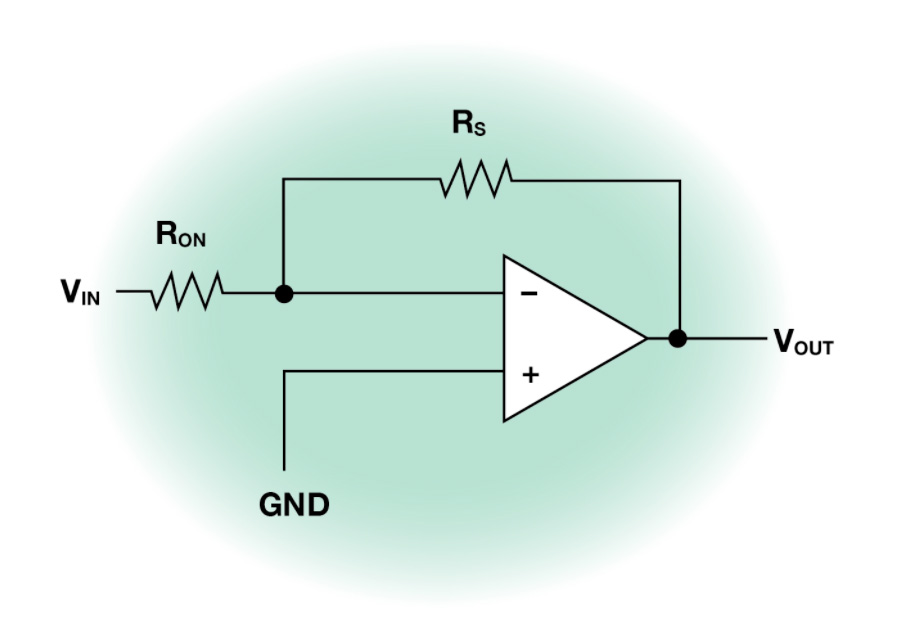

The Digital Revolution and its consequences have been a disaster for the human race. They stole the feedback loop and gave you a for loop instead. A for loop performs basic repetition, while a feedback loop captures a relationship between quantities, a push and pull, and finds an…

Over the weekend, I took Typst (typst.app), the LaTeX alternative written in Rust, for a spin. Liked what I saw, will switch from my current workflow md -> pandoc -> LaTeX. The Typst scripting language is actually sane, I never managed to write a macro in LaTeX.

Yann is really right about this. AFAIK only Traceoid working on this.

Yann LeCun on architectures that could lead to AGI --- "Abandon generative models in favor joint-embedding architectures Abandon probabilistic model in favor of energy-based models Abandon contrastive methods in favor of regularized methods Abandon Reinforcement Learning in…

In a daily basis, I’m reminded of how much I used to hate CLI apps until Rust came about.

My quality of like would triple if I could never hear "Marcus Aurelius" ever again.

Apt analogy, statistical mechanics and AI are inextricably linked.

Giorgio Parisi opening StatPhys29: AI resembles the heat engine in that the technology arrived before the theory.

A recurring sentiment at Traceoid HQ.

> tfw John Hopfield inventing Hopfield networks

Yeah ok turns out Typst isn’t quite ready for this use case, Zola it is.

Typst can be used as a static website generator. Currently using this to redesign traceoid.ai. Can't believe that this is the least painful option for me in the year of our Lord 2025.

Typst can be used as a static website generator. Currently using this to redesign traceoid.ai. Can't believe that this is the least painful option for me in the year of our Lord 2025.

I'm suffering from a Mandela effect, I could have sworn that there was a guy called Jeffrey Epstein who did a bunch of fucked up shit but apparently there was no one like that.

There will be a time when no one remembers ML before EBMs.

Energy-Based Transformers are Scalable Learners and Thinkers

The Claude chat search is so bad I have to export my data and grep for stuff. Not a great UI.

Bathroom break! Go now or forever hold your piss.

Really wish Claude and ChatGPT would let me share a link to a particular section of the conversation. Like just an incremental id for each section would be fine, like claude.ai/share/xxxxx#1 for the first section claude.ai/share/xxxxx#2 for the second section.

I know I’m biased but these developments are really unsurprising. Anyway, if anyone wants to talk about approaches that have an actual shot at leading to AGI, hit me up.

The data labeling industry will be made obsolete when energy-based models become viable. EBMs are unsupervised and as such don’t need labels.

1b of human data spend is on market the retards over the mercor decide now is the time to hire for full time roles stick to rebuilding the caste system who tf is giving them a 10b valuation

Outstanding line of research! It's a travesty EBMs are not a more active research area. Essentially all problems with current ML (e.g. lack of interpretability, slow inference, need for labels) stem from the fact that we are not using EBMs.

1) EBMs are generally challenging to train due to the partition function (normalization constant). At first, learning the partition function seems weird O_o But the log-partition exactly coincides with the Lagrange multiplier (dual variable) associated with equality constraints.