Acyr Locatelli

@acyr_l

Lead pre-training @Cohere

Dropping tomorrow on MLST - the serious problems with Chatbot Arena. We will talk about the recent investment and the explosive paper from Cohere researchers which identified several significant problems with the benchmark.

Our team is hiring! Please consider applying if you care deeply about data, and want to train sick base models :)) jobs.ashbyhq.com/cohere/859e2e4…

We've just release 100+ intermediate checkpoints and our training logs from SmolLM3-3B training. We hope this can be useful to the researcher working on mech interpret, training dynamics, RL and other topics :) Training logs: -> Usual training loss (the gap in the loss are due…

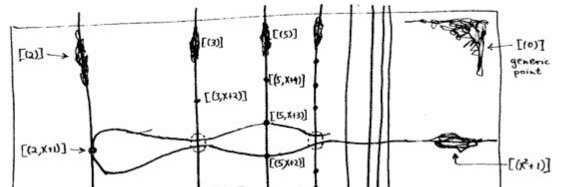

LLMs can be programmed by backprop 🔎 In our new preprint, we show they can act as fuzzy program interpreters and databases. After being ‘programmed’ with next-token prediction, they can retrieve, evaluate, and even *compose* programs at test time, without seeing I/O examples.

Excited to introduce LLM-First Search (LFS) - a new paradigm where the language model takes the lead in reasoning and search! LFS is a self-directed search method that empowers LLMs to guide the exploration process themselves, without relying on predefined heuristics or fixed…

We are running our first physical event in London on 14th July! We have Tim Nguyen @IAmTimNguyen from DeepMind and Max Bartolo @max_nlp from Cohere and Enzo Blindow (VP of Data, Research & Analytics) at @Prolific joining us. Not many seats for the first one.…

Introducing the world's first reasoning model in biology! 🧬 BioReason enables AI to reason about genomics like a biology expert. A thread 🧵:

If you are based in Zurich (or anywhere rly) and write code for ML accelerators (including cuda/rocm) HMU

Cohere also has a CUDA team

Presenting this today 3-530 at poster #208, come say hi 🙋♀️

How do LLMs learn to reason from data? Are they ~retrieving the answers from parametric knowledge🦜? In our new preprint, we look at the pretraining data and find evidence against this: Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢 🧵⬇️

Very proud of this work which is being presented @iclr_conf later today. While I will not be there — Catch up with @viraataryabumi and @ahmetustun89 who are both fantastic and can share more about our work at both @Cohere_Labs and @cohere. 🔥✨

In our latest work, we ask “what is the impact of code data used in pre-training on non-code tasks?” Work w @viraataryabumi, @yixuan_su, @rayhascode, @adrien_morisot, @1vnzh, @acyr_l, @mziizm, @ahmetustun89 @sarahookr 📜 arxiv.org/abs/2408.10914

RL is not all you need, nor attention nor Bayesianism nor free energy minimisation, nor an age of first person experience. Such statements are propaganda. You need thousands of people working hard on data pipelines, scaling infrastructure, HPC, apps with feedback to drive…

Excited to announce that @Cohere and @Cohere_Labs models are the first supported inference provider on @huggingface Hub! 🔥 Looking forward to this new avenue for sharing and serving our models, including the Aya family and Command suite of models.

Great interview! (even at 1x)

I really enjoyed my @MLStreetTalk chat with Tim at #NeurIPS2024 about some of the research we've been doing on reasoning, robustness and human feedback. If you have an hour to spare and are interested in some semi-coherent thoughts revolving around AI robustness, it may be worth…

Highly recommend working with Ed! He comes in handy.

Highly recommend working with Sander. Also playing SC2 with Sander.

I added @cohere command A to this chart, I had to extend the axis a bit though….

Introducing Mistral Small 3.1. Multimodal, Apache 2.0, outperforms Gemma 3 and GPT 4o-mini. mistral.ai/news/mistral-s…

🚀 Big news @cohere's latest Command A now climbs to #13 on Arena! Another organization joining the top-15 club - congrats to the Cohere team! Highlights: - open-weight model (111B) - 256K context window - $2.5/$10 input/output MTok More analysis👇

We’re excited to introduce our newest state-of-the-art model: Command A! Command A provides enterprises maximum performance across agentic tasks with minimal compute requirements.

Really proud of the work that went into pre-training this model!

We’re excited to introduce our newest state-of-the-art model: Command A! Command A provides enterprises maximum performance across agentic tasks with minimal compute requirements.

Cohere releases Command A on Hugging Face Command A is an open-weights 111B parameter model with a 256k context window focused on delivering great performance across agentic, multilingual, and coding use cases. Command A is on par or better than models like GPT-4o and Deepseek…