Abhinav Java

@abhinav_java

navigating the sea of entropy RF @Microsoft Research | Prev @Adobe, @dtu_delhi Looking for PhD opportunities!

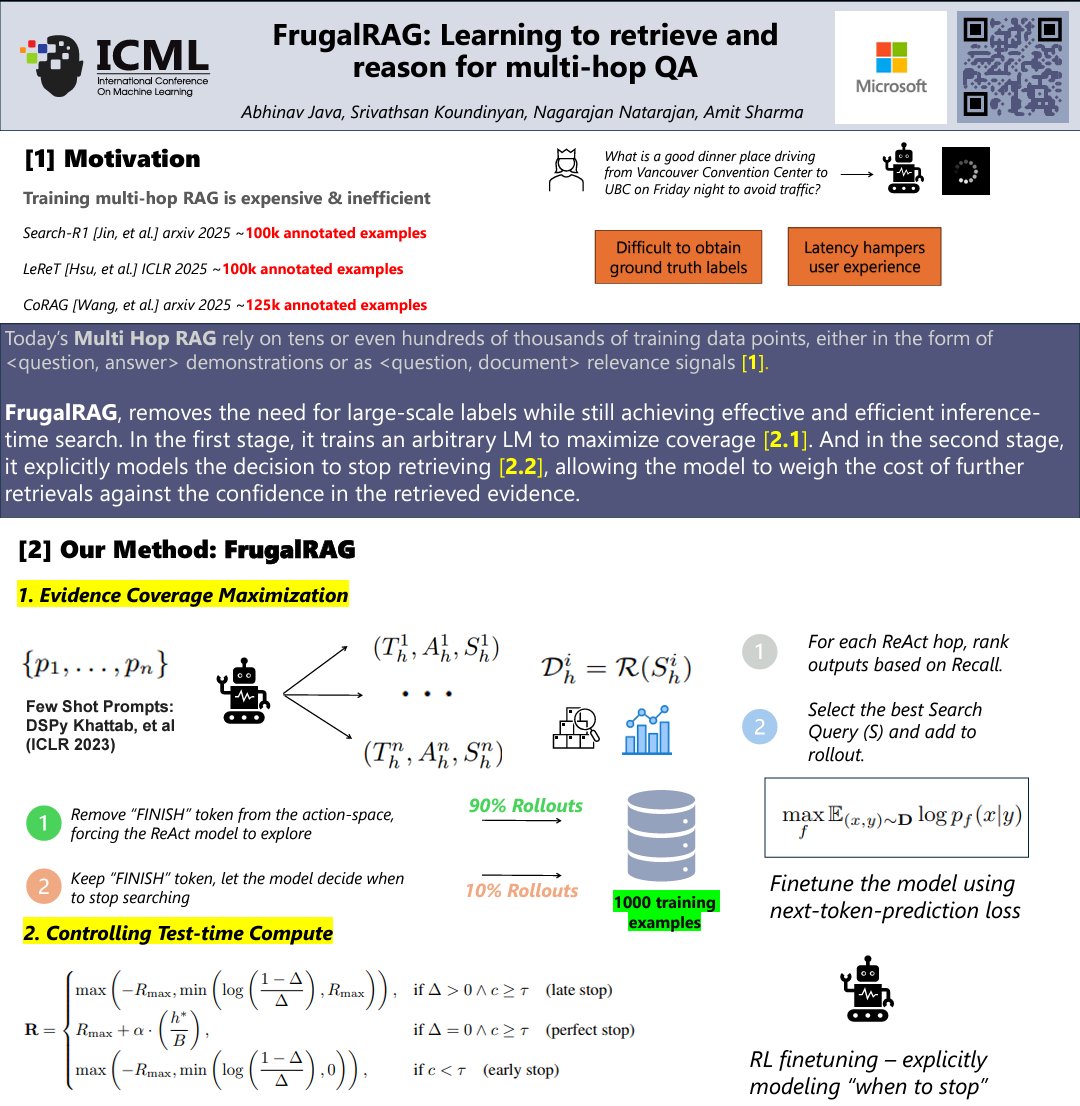

🚀 Meet FrugalRAG at #ICML2025 in Vancouver 🇨🇦! 📍 July 18 – VecDB Workshop, West 208–209 📍 July 19 – ES-FoMO Workshop, East Exhibition Hall A Come chat with me and @naga86 and learn how we're rethinking training efficiency and inference latency for RAG systems. 🧵

A thread 🧵 TL;DR: We’re working on making NumPy’s cross-platform 128-bit float operations go brrr.... 🔥 So why are quad-precision (128-bit) linear algebra ops so slow and how we’re fixing it?

I can't stress enough how useful this trick has been for me in all these years It reduces GPU memory by N equal the number of losses, at literally no cost (same speed, exactly same results down to the last decimal digit) For example ... [1/2]

This simple pytorch trick will cut in half your GPU memory use / double your batch size (for real). Instead of adding losses and then computing backward, it's better to compute the backward on each loss (which frees the computational graph). Results will be exactly identical

Thrilled to share that our paper "ReEdit: Multimodal Exemplar-Based Image Editing with Diffusion Models" got accepted at #WACV2025! - 4x faster than baselines - No fine-tuning required - 1474 exemplar pairs dataset - Code + unified pipeline released! 🧵Thread + GH and Dataset👇

i cant believe ChatGPT lost its job to AI

Accepted at #NAACL2025 How can individuals and organizations prevent their public text data from being exploited by open and closed language models? 🛡️ The answer lies in information theory!

Thrilled to announce that our five-month-old lab got three papers accepted at #NAACL2025! 1. Operationalizing Right to Data Protection: shorturl.at/IPfxn 2. Analyzing Memorization in LLMs through the Lens of Model Attribution: shorturl.at/1OBdf 3. Impact of…

Microsoft Research India is excited to announce applications are open for our Research Fellow program (deadline 15th Feb 2025). Details of the program and the application are here: 🔗 Research Fellow program: aka.ms/msrirf @MSFTResearch

Don’t race. Don’t catch up. Don’t play the game. Instead, do rigorous science. Do controlled experiments. Formulate clear hypothesis. Carefully examine alternative hypothesis. Rule out confounders. Listen to the physics of LLM tutorial 10 times and recite every single word of it.…

The gap between open-sourced models and closed-source models is getting larger and larger. What should academia do to catch up?

Put your work on arXiv and release 100% of your code/data/Mathematica notebooks/etc., packaged up in such a way that *anyone* can reproduce all of your results in a single click. Call out people who don’t. That’s what peer review should look like in the 21st century.

Academic journals are nothing but a nuisance for theoretical work and should be a thing of the past

Ok election is over pls make my Twitter feed only AI research again, thx

But is it physics? Hopfield tackled three profound questions about emergence: condensed matter from interactions between elementary particles, the magic of life from interactions between dead molecules, and intelligence/consciousness from interactions between dumb neurons.