Gabriele Berton

@gabriberton

Postdoc @Amazon working on MLLM - ex @CarnegieMellon @PoliTOnews @IITalk

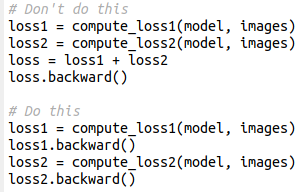

This simple pytorch trick will cut in half your GPU memory use / double your batch size (for real). Instead of adding losses and then computing backward, it's better to compute the backward on each loss (which frees the computational graph). Results will be exactly identical

It's not every day that you see a 2016 method being SOTA in 2025, outperforming CVPR 2025 Best Paper award ORB-SLAM is 🔥

VGGT-Long: Chunk it, Loop it, Align it -- Pushing VGGT's Limits on Kilometer-scale Long RGB Sequences Kai Deng, Zexin Ti, Jiawei Xu, Jian Yang, Jin Xie tl;dr: sequence->overlapping chunks->alignment and loop closure with confidence kind of same with large-scale GS ORB-SLAM2 is…